Compare commits

109 Commits

0.39.14.1

...

sig-handle

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

627fd368fb | ||

|

|

f2f8469891 | ||

|

|

27117b324d | ||

|

|

3cc7b8e489 | ||

|

|

307ce59b38 | ||

|

|

d1bd5b442b | ||

|

|

a3f492bf17 | ||

|

|

58b0166330 | ||

|

|

a1c3107cd6 | ||

|

|

8fef3ff4ab | ||

|

|

baa25c9f9e | ||

|

|

488699b7d4 | ||

|

|

cf3a1ee3e3 | ||

|

|

daae43e9f9 | ||

|

|

cdeedaa65c | ||

|

|

3c9d2ded38 | ||

|

|

9f4364a130 | ||

|

|

5bd9eaf99d | ||

|

|

b1c51c0a65 | ||

|

|

232bd92389 | ||

|

|

e6173357a9 | ||

|

|

f2b8888aff | ||

|

|

9c46f175f9 | ||

|

|

1f27865fdf | ||

|

|

faa42d75e0 | ||

|

|

3b6e6d85bb | ||

|

|

30d6a272ce | ||

|

|

291700554e | ||

|

|

a82fad7059 | ||

|

|

c2fe5ae0d1 | ||

|

|

5beefdb7cc | ||

|

|

872bbba71c | ||

|

|

d578de1a35 | ||

|

|

cdc104be10 | ||

|

|

dd0eeca056 | ||

|

|

a95468be08 | ||

|

|

ace44d0e00 | ||

|

|

ebb8b88621 | ||

|

|

12fc2200de | ||

|

|

52d3d375ba | ||

|

|

08117089e6 | ||

|

|

2ba3a6d53f | ||

|

|

2f636553a9 | ||

|

|

0bde48b282 | ||

|

|

fae1164c0b | ||

|

|

169c293143 | ||

|

|

46cb5cff66 | ||

|

|

05584ea886 | ||

|

|

176a591357 | ||

|

|

15569f9592 | ||

|

|

fd080e9e4b | ||

|

|

5f9e475fe0 | ||

|

|

34b8784f50 | ||

|

|

2b054ced8c | ||

|

|

6553980cd5 | ||

|

|

7c12c47204 | ||

|

|

dbd9b470d7 | ||

|

|

83555a9991 | ||

|

|

5bfdb28bd2 | ||

|

|

31a6a6717b | ||

|

|

7da32f9ac3 | ||

|

|

bb732d3d2e | ||

|

|

485e55f9ed | ||

|

|

601a20ea49 | ||

|

|

76996b9eb8 | ||

|

|

fba2b1a39d | ||

|

|

4a91505af5 | ||

|

|

4841c79b4c | ||

|

|

2ba00d2e1d | ||

|

|

19c96f4bdd | ||

|

|

82b900fbf4 | ||

|

|

358a365303 | ||

|

|

a07ca4b136 | ||

|

|

ba8cf2c8cf | ||

|

|

3106b6688e | ||

|

|

2c83845dac | ||

|

|

111266d6fa | ||

|

|

ead610151f | ||

|

|

7e1e763989 | ||

|

|

327cc4af34 | ||

|

|

6008ff516e | ||

|

|

cdcf4b353f | ||

|

|

1ab70f8e86 | ||

|

|

8227c012a7 | ||

|

|

c113d5fb24 | ||

|

|

8c8d4066d7 | ||

|

|

277dc9e1c1 | ||

|

|

fc0fd1ce9d | ||

|

|

bd6127728a | ||

|

|

4101ae00c6 | ||

|

|

62f14df3cb | ||

|

|

560d465c59 | ||

|

|

7929aeddfc | ||

|

|

8294519f43 | ||

|

|

8ba8a220b6 | ||

|

|

aa3c8a9370 | ||

|

|

dbb5468cdc | ||

|

|

329c7620fb | ||

|

|

1f974bfbb0 | ||

|

|

437c8525af | ||

|

|

a2a1d5ae90 | ||

|

|

2566de2aae | ||

|

|

dfec8dbb39 | ||

|

|

5cefb16e52 | ||

|

|

341ae24b73 | ||

|

|

f47c2fb7f6 | ||

|

|

9d742446ab | ||

|

|

e3e022b0f4 | ||

|

|

6de4027c27 |

11

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -1,9 +1,9 @@

|

|||||||

---

|

---

|

||||||

name: Bug report

|

name: Bug report

|

||||||

about: Create a report to help us improve

|

about: Create a bug report, if you don't follow this template, your report will be DELETED

|

||||||

title: ''

|

title: ''

|

||||||

labels: ''

|

labels: 'triage'

|

||||||

assignees: ''

|

assignees: 'dgtlmoon'

|

||||||

|

|

||||||

---

|

---

|

||||||

|

|

||||||

@@ -11,15 +11,18 @@ assignees: ''

|

|||||||

A clear and concise description of what the bug is.

|

A clear and concise description of what the bug is.

|

||||||

|

|

||||||

**Version**

|

**Version**

|

||||||

In the top right area: 0....

|

*Exact version* in the top right area: 0....

|

||||||

|

|

||||||

**To Reproduce**

|

**To Reproduce**

|

||||||

|

|

||||||

Steps to reproduce the behavior:

|

Steps to reproduce the behavior:

|

||||||

1. Go to '...'

|

1. Go to '...'

|

||||||

2. Click on '....'

|

2. Click on '....'

|

||||||

3. Scroll down to '....'

|

3. Scroll down to '....'

|

||||||

4. See error

|

4. See error

|

||||||

|

|

||||||

|

! ALWAYS INCLUDE AN EXAMPLE URL WHERE IT IS POSSIBLE TO RE-CREATE THE ISSUE - USE THE 'SHARE WATCH' FEATURE AND PASTE IN THE SHARE-LINK!

|

||||||

|

|

||||||

**Expected behavior**

|

**Expected behavior**

|

||||||

A clear and concise description of what you expected to happen.

|

A clear and concise description of what you expected to happen.

|

||||||

|

|

||||||

|

|||||||

4

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@@ -1,8 +1,8 @@

|

|||||||

---

|

---

|

||||||

name: Feature request

|

name: Feature request

|

||||||

about: Suggest an idea for this project

|

about: Suggest an idea for this project

|

||||||

title: ''

|

title: '[feature]'

|

||||||

labels: ''

|

labels: 'enhancement'

|

||||||

assignees: ''

|

assignees: ''

|

||||||

|

|

||||||

---

|

---

|

||||||

|

|||||||

15

.github/workflows/containers.yml

vendored

@@ -85,8 +85,8 @@ jobs:

|

|||||||

version: latest

|

version: latest

|

||||||

driver-opts: image=moby/buildkit:master

|

driver-opts: image=moby/buildkit:master

|

||||||

|

|

||||||

# master always builds :latest

|

# master branch -> :dev container tag

|

||||||

- name: Build and push :latest

|

- name: Build and push :dev

|

||||||

id: docker_build

|

id: docker_build

|

||||||

if: ${{ github.ref }} == "refs/heads/master"

|

if: ${{ github.ref }} == "refs/heads/master"

|

||||||

uses: docker/build-push-action@v2

|

uses: docker/build-push-action@v2

|

||||||

@@ -95,12 +95,12 @@ jobs:

|

|||||||

file: ./Dockerfile

|

file: ./Dockerfile

|

||||||

push: true

|

push: true

|

||||||

tags: |

|

tags: |

|

||||||

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:latest,ghcr.io/${{ github.repository }}:latest

|

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:dev,ghcr.io/${{ github.repository }}:dev

|

||||||

platforms: linux/amd64,linux/arm64,linux/arm/v6,linux/arm/v7

|

platforms: linux/amd64,linux/arm64,linux/arm/v6,linux/arm/v7

|

||||||

cache-from: type=local,src=/tmp/.buildx-cache

|

cache-from: type=local,src=/tmp/.buildx-cache

|

||||||

cache-to: type=local,dest=/tmp/.buildx-cache

|

cache-to: type=local,dest=/tmp/.buildx-cache

|

||||||

|

|

||||||

# A new tagged release is required, which builds :tag

|

# A new tagged release is required, which builds :tag and :latest

|

||||||

- name: Build and push :tag

|

- name: Build and push :tag

|

||||||

id: docker_build_tag_release

|

id: docker_build_tag_release

|

||||||

if: github.event_name == 'release' && startsWith(github.event.release.tag_name, '0.')

|

if: github.event_name == 'release' && startsWith(github.event.release.tag_name, '0.')

|

||||||

@@ -110,7 +110,10 @@ jobs:

|

|||||||

file: ./Dockerfile

|

file: ./Dockerfile

|

||||||

push: true

|

push: true

|

||||||

tags: |

|

tags: |

|

||||||

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:${{ github.event.release.tag_name }},ghcr.io/dgtlmoon/changedetection.io:${{ github.event.release.tag_name }}

|

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:${{ github.event.release.tag_name }}

|

||||||

|

ghcr.io/dgtlmoon/changedetection.io:${{ github.event.release.tag_name }}

|

||||||

|

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:latest

|

||||||

|

ghcr.io/dgtlmoon/changedetection.io:latest

|

||||||

platforms: linux/amd64,linux/arm64,linux/arm/v6,linux/arm/v7

|

platforms: linux/amd64,linux/arm64,linux/arm/v6,linux/arm/v7

|

||||||

cache-from: type=local,src=/tmp/.buildx-cache

|

cache-from: type=local,src=/tmp/.buildx-cache

|

||||||

cache-to: type=local,dest=/tmp/.buildx-cache

|

cache-to: type=local,dest=/tmp/.buildx-cache

|

||||||

@@ -125,5 +128,3 @@ jobs:

|

|||||||

key: ${{ runner.os }}-buildx-${{ github.sha }}

|

key: ${{ runner.os }}-buildx-${{ github.sha }}

|

||||||

restore-keys: |

|

restore-keys: |

|

||||||

${{ runner.os }}-buildx-

|

${{ runner.os }}-buildx-

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

1

.gitignore

vendored

@@ -8,5 +8,6 @@ __pycache__

|

|||||||

build

|

build

|

||||||

dist

|

dist

|

||||||

venv

|

venv

|

||||||

|

test-datastore

|

||||||

*.egg-info*

|

*.egg-info*

|

||||||

.vscode/settings.json

|

.vscode/settings.json

|

||||||

|

|||||||

34

README.md

@@ -3,16 +3,18 @@

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Self-Hosted, Open Source, Change Monitoring of Web Pages

|

## Web Site Change Detection, Monitoring and Notification - Self-Hosted or SaaS.

|

||||||

|

|

||||||

_Know when web pages change! Stay ontop of new information!_

|

_Know when web pages change! Stay ontop of new information! get notifications when important website content changes_

|

||||||

|

|

||||||

Live your data-life *pro-actively* instead of *re-actively*.

|

Live your data-life *pro-actively* instead of *re-actively*.

|

||||||

|

|

||||||

Free, Open-source web page monitoring, notification and change detection. Don't have time? [**Try our $6.99/month subscription - unlimited checks and watches!**](https://lemonade.changedetection.io/start)

|

Free, Open-source web page monitoring, notification and change detection. Don't have time? [**Try our $6.99/month subscription - unlimited checks and watches!**](https://lemonade.changedetection.io/start)

|

||||||

|

|

||||||

|

[](https://discord.gg/XJZy7QK3ja) [ ](https://www.youtube.com/channel/UCbS09q1TRf0o4N2t-WA3emQ) [](https://www.linkedin.com/company/changedetection-io/)

|

||||||

|

|

||||||

[<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot.png" style="max-width:100%;" alt="Self-hosted web page change monitoring" title="Self-hosted web page change monitoring" />](https://lemonade.changedetection.io/start)

|

|

||||||

|

[<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot.png" style="max-width:100%;" alt="Self-hosted web page change monitoring" title="Self-hosted web page change monitoring" />](https://lemonade.changedetection.io/start)

|

||||||

|

|

||||||

|

|

||||||

**Get your own private instance now! Let us host it for you!**

|

**Get your own private instance now! Let us host it for you!**

|

||||||

@@ -33,6 +35,7 @@ Free, Open-source web page monitoring, notification and change detection. Don't

|

|||||||

- New software releases, security advisories when you're not on their mailing list.

|

- New software releases, security advisories when you're not on their mailing list.

|

||||||

- Festivals with changes

|

- Festivals with changes

|

||||||

- Realestate listing changes

|

- Realestate listing changes

|

||||||

|

- Know when your favourite whiskey is on sale, or other special deals are announced before anyone else

|

||||||

- COVID related news from government websites

|

- COVID related news from government websites

|

||||||

- University/organisation news from their website

|

- University/organisation news from their website

|

||||||

- Detect and monitor changes in JSON API responses

|

- Detect and monitor changes in JSON API responses

|

||||||

@@ -48,26 +51,37 @@ _Need an actual Chrome runner with Javascript support? We support fetching via W

|

|||||||

|

|

||||||

## Screenshots

|

## Screenshots

|

||||||

|

|

||||||

Examining differences in content.

|

### Examine differences in content.

|

||||||

|

|

||||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot-diff.png" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Self-hosted web page change monitoring context difference " />

|

Easily see what changed, examine by word, line, or individual character.

|

||||||

|

|

||||||

|

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot-diff.png" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Self-hosted web page change monitoring context difference " />

|

||||||

|

|

||||||

Please :star: star :star: this project and help it grow! https://github.com/dgtlmoon/changedetection.io/

|

Please :star: star :star: this project and help it grow! https://github.com/dgtlmoon/changedetection.io/

|

||||||

|

|

||||||

|

### Filter by elements using the Visual Selector tool.

|

||||||

|

|

||||||

|

Available when connected to a <a href="https://github.com/dgtlmoon/changedetection.io/wiki/Playwright-content-fetcher">playwright content fetcher</a> (included as part of our subscription service)

|

||||||

|

|

||||||

|

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/visualselector-anim.gif" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Self-hosted web page change monitoring context difference " />

|

||||||

|

|

||||||

## Installation

|

## Installation

|

||||||

|

|

||||||

### Docker

|

### Docker

|

||||||

|

|

||||||

With Docker composer, just clone this repository and..

|

With Docker composer, just clone this repository and..

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

$ docker-compose up -d

|

$ docker-compose up -d

|

||||||

```

|

```

|

||||||

|

|

||||||

Docker standalone

|

Docker standalone

|

||||||

```bash

|

```bash

|

||||||

$ docker run -d --restart always -p "127.0.0.1:5000:5000" -v datastore-volume:/datastore --name changedetection.io dgtlmoon/changedetection.io

|

$ docker run -d --restart always -p "127.0.0.1:5000:5000" -v datastore-volume:/datastore --name changedetection.io dgtlmoon/changedetection.io

|

||||||

```

|

```

|

||||||

|

|

||||||

|

`:latest` tag is our latest stable release, `:dev` tag is our bleeding edge `master` branch.

|

||||||

|

|

||||||

### Windows

|

### Windows

|

||||||

|

|

||||||

See the install instructions at the wiki https://github.com/dgtlmoon/changedetection.io/wiki/Microsoft-Windows

|

See the install instructions at the wiki https://github.com/dgtlmoon/changedetection.io/wiki/Microsoft-Windows

|

||||||

@@ -107,7 +121,7 @@ See the wiki for more information https://github.com/dgtlmoon/changedetection.io

|

|||||||

## Filters

|

## Filters

|

||||||

XPath, JSONPath and CSS support comes baked in! You can be as specific as you need, use XPath exported from various XPath element query creation tools.

|

XPath, JSONPath and CSS support comes baked in! You can be as specific as you need, use XPath exported from various XPath element query creation tools.

|

||||||

|

|

||||||

(We support LXML re:test, re:math and re:replace.)

|

(We support LXML `re:test`, `re:math` and `re:replace`.)

|

||||||

|

|

||||||

## Notifications

|

## Notifications

|

||||||

|

|

||||||

@@ -129,7 +143,7 @@ Just some examples

|

|||||||

|

|

||||||

<a href="https://github.com/caronc/apprise#popular-notification-services">And everything else in this list!</a>

|

<a href="https://github.com/caronc/apprise#popular-notification-services">And everything else in this list!</a>

|

||||||

|

|

||||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot-notifications.png" style="max-width:100%;" alt="Self-hosted web page change monitoring notifications" title="Self-hosted web page change monitoring notifications" />

|

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot-notifications.png" style="max-width:100%;" alt="Self-hosted web page change monitoring notifications" title="Self-hosted web page change monitoring notifications" />

|

||||||

|

|

||||||

Now you can also customise your notification content!

|

Now you can also customise your notification content!

|

||||||

|

|

||||||

@@ -137,11 +151,11 @@ Now you can also customise your notification content!

|

|||||||

|

|

||||||

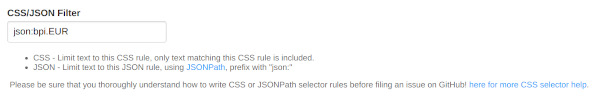

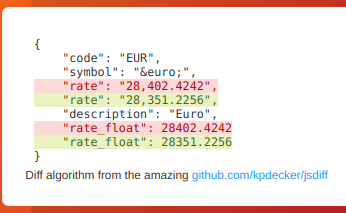

Detect changes and monitor data in JSON API's by using the built-in JSONPath selectors as a filter / selector.

|

Detect changes and monitor data in JSON API's by using the built-in JSONPath selectors as a filter / selector.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

This will re-parse the JSON and apply formatting to the text, making it super easy to monitor and detect changes in JSON API results

|

This will re-parse the JSON and apply formatting to the text, making it super easy to monitor and detect changes in JSON API results

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### Parse JSON embedded in HTML!

|

### Parse JSON embedded in HTML!

|

||||||

|

|

||||||

@@ -177,7 +191,7 @@ Or directly donate an amount PayPal [:

|

||||||

|

import sys

|

||||||

|

print('Shutdown: Got SIGCHLD')

|

||||||

|

# https://stackoverflow.com/questions/40453496/python-multiprocessing-capturing-signals-to-restart-child-processes-or-shut-do

|

||||||

|

pid, status = os.waitpid(-1, os.WNOHANG | os.WUNTRACED | os.WCONTINUED)

|

||||||

|

|

||||||

|

print('Sub-process: pid %d status %d' % (pid, status))

|

||||||

|

if status != 0:

|

||||||

|

sys.exit(1)

|

||||||

|

|

||||||

|

raise SystemExit

|

||||||

|

|

||||||

if __name__ == '__main__':

|

if __name__ == '__main__':

|

||||||

changedetection.main()

|

signal.signal(signal.SIGCHLD, sigterm_handler)

|

||||||

|

# The only way I could find to get Flask to shutdown, is to wrap it and then rely on the subsystem issuing SIGTERM/SIGKILL

|

||||||

|

parse_process = multiprocessing.Process(target=changedetection.main)

|

||||||

|

parse_process.daemon = True

|

||||||

|

parse_process.start()

|

||||||

|

import time

|

||||||

|

|

||||||

|

try:

|

||||||

|

while True:

|

||||||

|

time.sleep(1)

|

||||||

|

|

||||||

|

except KeyboardInterrupt:

|

||||||

|

#parse_process.terminate() not needed, because this process will issue it to the sub-process anyway

|

||||||

|

print ("Exited - CTRL+C")

|

||||||

|

|||||||

1

changedetectionio/.gitignore

vendored

@@ -1 +1,2 @@

|

|||||||

test-datastore

|

test-datastore

|

||||||

|

package-lock.json

|

||||||

|

|||||||

@@ -20,6 +20,7 @@ from copy import deepcopy

|

|||||||

from threading import Event

|

from threading import Event

|

||||||

|

|

||||||

import flask_login

|

import flask_login

|

||||||

|

import logging

|

||||||

import pytz

|

import pytz

|

||||||

import timeago

|

import timeago

|

||||||

from feedgen.feed import FeedGenerator

|

from feedgen.feed import FeedGenerator

|

||||||

@@ -43,7 +44,7 @@ from flask_wtf import CSRFProtect

|

|||||||

from changedetectionio import html_tools

|

from changedetectionio import html_tools

|

||||||

from changedetectionio.api import api_v1

|

from changedetectionio.api import api_v1

|

||||||

|

|

||||||

__version__ = '0.39.14'

|

__version__ = '0.39.17.1'

|

||||||

|

|

||||||

datastore = None

|

datastore = None

|

||||||

|

|

||||||

@@ -53,7 +54,7 @@ ticker_thread = None

|

|||||||

|

|

||||||

extra_stylesheets = []

|

extra_stylesheets = []

|

||||||

|

|

||||||

update_q = queue.Queue()

|

update_q = queue.PriorityQueue()

|

||||||

|

|

||||||

notification_q = queue.Queue()

|

notification_q = queue.Queue()

|

||||||

|

|

||||||

@@ -107,7 +108,7 @@ def _jinja2_filter_datetime(watch_obj, format="%Y-%m-%d %H:%M:%S"):

|

|||||||

# Worker thread tells us which UUID it is currently processing.

|

# Worker thread tells us which UUID it is currently processing.

|

||||||

for t in running_update_threads:

|

for t in running_update_threads:

|

||||||

if t.current_uuid == watch_obj['uuid']:

|

if t.current_uuid == watch_obj['uuid']:

|

||||||

return "Checking now.."

|

return '<span class="loader"></span><span> Checking now</span>'

|

||||||

|

|

||||||

if watch_obj['last_checked'] == 0:

|

if watch_obj['last_checked'] == 0:

|

||||||

return 'Not yet'

|

return 'Not yet'

|

||||||

@@ -178,6 +179,10 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

global datastore

|

global datastore

|

||||||

datastore = datastore_o

|

datastore = datastore_o

|

||||||

|

|

||||||

|

# so far just for read-only via tests, but this will be moved eventually to be the main source

|

||||||

|

# (instead of the global var)

|

||||||

|

app.config['DATASTORE']=datastore_o

|

||||||

|

|

||||||

#app.config.update(config or {})

|

#app.config.update(config or {})

|

||||||

|

|

||||||

login_manager = flask_login.LoginManager(app)

|

login_manager = flask_login.LoginManager(app)

|

||||||

@@ -293,7 +298,7 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

# Sort by last_changed and add the uuid which is usually the key..

|

# Sort by last_changed and add the uuid which is usually the key..

|

||||||

sorted_watches = []

|

sorted_watches = []

|

||||||

|

|

||||||

# @todo needs a .itemsWithTag() or something

|

# @todo needs a .itemsWithTag() or something - then we can use that in Jinaj2 and throw this away

|

||||||

for uuid, watch in datastore.data['watching'].items():

|

for uuid, watch in datastore.data['watching'].items():

|

||||||

|

|

||||||

if limit_tag != None:

|

if limit_tag != None:

|

||||||

@@ -317,25 +322,19 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

for watch in sorted_watches:

|

for watch in sorted_watches:

|

||||||

|

|

||||||

dates = list(watch['history'].keys())

|

dates = list(watch.history.keys())

|

||||||

# Re #521 - Don't bother processing this one if theres less than 2 snapshots, means we never had a change detected.

|

# Re #521 - Don't bother processing this one if theres less than 2 snapshots, means we never had a change detected.

|

||||||

if len(dates) < 2:

|

if len(dates) < 2:

|

||||||

continue

|

continue

|

||||||

|

|

||||||

# Convert to int, sort and back to str again

|

prev_fname = watch.history[dates[-2]]

|

||||||

# @todo replace datastore getter that does this automatically

|

|

||||||

dates = [int(i) for i in dates]

|

|

||||||

dates.sort(reverse=True)

|

|

||||||

dates = [str(i) for i in dates]

|

|

||||||

prev_fname = watch['history'][dates[1]]

|

|

||||||

|

|

||||||

if not watch['viewed']:

|

if not watch.viewed:

|

||||||

# Re #239 - GUID needs to be individual for each event

|

# Re #239 - GUID needs to be individual for each event

|

||||||

# @todo In the future make this a configurable link back (see work on BASE_URL https://github.com/dgtlmoon/changedetection.io/pull/228)

|

# @todo In the future make this a configurable link back (see work on BASE_URL https://github.com/dgtlmoon/changedetection.io/pull/228)

|

||||||

guid = "{}/{}".format(watch['uuid'], watch['last_changed'])

|

guid = "{}/{}".format(watch['uuid'], watch['last_changed'])

|

||||||

fe = fg.add_entry()

|

fe = fg.add_entry()

|

||||||

|

|

||||||

|

|

||||||

# Include a link to the diff page, they will have to login here to see if password protection is enabled.

|

# Include a link to the diff page, they will have to login here to see if password protection is enabled.

|

||||||

# Description is the page you watch, link takes you to the diff JS UI page

|

# Description is the page you watch, link takes you to the diff JS UI page

|

||||||

base_url = datastore.data['settings']['application']['base_url']

|

base_url = datastore.data['settings']['application']['base_url']

|

||||||

@@ -350,18 +349,19 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

watch_title = watch.get('title') if watch.get('title') else watch.get('url')

|

watch_title = watch.get('title') if watch.get('title') else watch.get('url')

|

||||||

fe.title(title=watch_title)

|

fe.title(title=watch_title)

|

||||||

latest_fname = watch['history'][dates[0]]

|

latest_fname = watch.history[dates[-1]]

|

||||||

|

|

||||||

html_diff = diff.render_diff(prev_fname, latest_fname, include_equal=False, line_feed_sep="</br>")

|

html_diff = diff.render_diff(prev_fname, latest_fname, include_equal=False, line_feed_sep="</br>")

|

||||||

fe.description(description="<![CDATA[<html><body><h4>{}</h4>{}</body></html>".format(watch_title, html_diff))

|

fe.content(content="<html><body><h4>{}</h4>{}</body></html>".format(watch_title, html_diff),

|

||||||

|

type='CDATA')

|

||||||

|

|

||||||

fe.guid(guid, permalink=False)

|

fe.guid(guid, permalink=False)

|

||||||

dt = datetime.datetime.fromtimestamp(int(watch['newest_history_key']))

|

dt = datetime.datetime.fromtimestamp(int(watch.newest_history_key))

|

||||||

dt = dt.replace(tzinfo=pytz.UTC)

|

dt = dt.replace(tzinfo=pytz.UTC)

|

||||||

fe.pubDate(dt)

|

fe.pubDate(dt)

|

||||||

|

|

||||||

response = make_response(fg.rss_str())

|

response = make_response(fg.rss_str())

|

||||||

response.headers.set('Content-Type', 'application/rss+xml')

|

response.headers.set('Content-Type', 'application/rss+xml;charset=utf-8')

|

||||||

return response

|

return response

|

||||||

|

|

||||||

@app.route("/", methods=['GET'])

|

@app.route("/", methods=['GET'])

|

||||||

@@ -370,20 +370,20 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

from changedetectionio import forms

|

from changedetectionio import forms

|

||||||

|

|

||||||

limit_tag = request.args.get('tag')

|

limit_tag = request.args.get('tag')

|

||||||

pause_uuid = request.args.get('pause')

|

|

||||||

|

|

||||||

# Redirect for the old rss path which used the /?rss=true

|

# Redirect for the old rss path which used the /?rss=true

|

||||||

if request.args.get('rss'):

|

if request.args.get('rss'):

|

||||||

return redirect(url_for('rss', tag=limit_tag))

|

return redirect(url_for('rss', tag=limit_tag))

|

||||||

|

|

||||||

if pause_uuid:

|

op = request.args.get('op')

|

||||||

try:

|

if op:

|

||||||

datastore.data['watching'][pause_uuid]['paused'] ^= True

|

uuid = request.args.get('uuid')

|

||||||

datastore.needs_write = True

|

if op == 'pause':

|

||||||

|

datastore.data['watching'][uuid]['paused'] ^= True

|

||||||

|

elif op == 'mute':

|

||||||

|

datastore.data['watching'][uuid]['notification_muted'] ^= True

|

||||||

|

|

||||||

|

datastore.needs_write = True

|

||||||

return redirect(url_for('index', tag = limit_tag))

|

return redirect(url_for('index', tag = limit_tag))

|

||||||

except KeyError:

|

|

||||||

pass

|

|

||||||

|

|

||||||

# Sort by last_changed and add the uuid which is usually the key..

|

# Sort by last_changed and add the uuid which is usually the key..

|

||||||

sorted_watches = []

|

sorted_watches = []

|

||||||

@@ -403,23 +403,22 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

watch['uuid'] = uuid

|

watch['uuid'] = uuid

|

||||||

sorted_watches.append(watch)

|

sorted_watches.append(watch)

|

||||||

|

|

||||||

sorted_watches.sort(key=lambda x: x['last_changed'], reverse=True)

|

|

||||||

|

|

||||||

existing_tags = datastore.get_all_tags()

|

existing_tags = datastore.get_all_tags()

|

||||||

|

|

||||||

form = forms.quickWatchForm(request.form)

|

form = forms.quickWatchForm(request.form)

|

||||||

|

|

||||||

output = render_template("watch-overview.html",

|

output = render_template("watch-overview.html",

|

||||||

form=form,

|

form=form,

|

||||||

watches=sorted_watches,

|

watches=sorted_watches,

|

||||||

tags=existing_tags,

|

tags=existing_tags,

|

||||||

active_tag=limit_tag,

|

active_tag=limit_tag,

|

||||||

app_rss_token=datastore.data['settings']['application']['rss_access_token'],

|

app_rss_token=datastore.data['settings']['application']['rss_access_token'],

|

||||||

has_unviewed=datastore.data['has_unviewed'],

|

has_unviewed=datastore.has_unviewed,

|

||||||

# Don't link to hosting when we're on the hosting environment

|

# Don't link to hosting when we're on the hosting environment

|

||||||

hosted_sticky=os.getenv("SALTED_PASS", False) == False,

|

hosted_sticky=os.getenv("SALTED_PASS", False) == False,

|

||||||

guid=datastore.data['app_guid'],

|

guid=datastore.data['app_guid'],

|

||||||

queued_uuids=update_q.queue)

|

queued_uuids=[uuid for p,uuid in update_q.queue])

|

||||||

|

|

||||||

|

|

||||||

if session.get('share-link'):

|

if session.get('share-link'):

|

||||||

del(session['share-link'])

|

del(session['share-link'])

|

||||||

return output

|

return output

|

||||||

@@ -431,7 +430,9 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

def ajax_callback_send_notification_test():

|

def ajax_callback_send_notification_test():

|

||||||

|

|

||||||

import apprise

|

import apprise

|

||||||

apobj = apprise.Apprise()

|

from .apprise_asset import asset

|

||||||

|

apobj = apprise.Apprise(asset=asset)

|

||||||

|

|

||||||

|

|

||||||

# validate URLS

|

# validate URLS

|

||||||

if not len(request.form['notification_urls'].strip()):

|

if not len(request.form['notification_urls'].strip()):

|

||||||

@@ -456,25 +457,39 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

return 'OK'

|

return 'OK'

|

||||||

|

|

||||||

@app.route("/scrub", methods=['GET', 'POST'])

|

|

||||||

|

@app.route("/clear_history/<string:uuid>", methods=['GET'])

|

||||||

@login_required

|

@login_required

|

||||||

def scrub_page():

|

def clear_watch_history(uuid):

|

||||||

|

try:

|

||||||

|

datastore.clear_watch_history(uuid)

|

||||||

|

except KeyError:

|

||||||

|

flash('Watch not found', 'error')

|

||||||

|

else:

|

||||||

|

flash("Cleared snapshot history for watch {}".format(uuid))

|

||||||

|

|

||||||

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

|

@app.route("/clear_history", methods=['GET', 'POST'])

|

||||||

|

@login_required

|

||||||

|

def clear_all_history():

|

||||||

|

|

||||||

if request.method == 'POST':

|

if request.method == 'POST':

|

||||||

confirmtext = request.form.get('confirmtext')

|

confirmtext = request.form.get('confirmtext')

|

||||||

|

|

||||||

if confirmtext == 'scrub':

|

if confirmtext == 'clear':

|

||||||

changes_removed = 0

|

changes_removed = 0

|

||||||

for uuid in datastore.data['watching'].keys():

|

for uuid in datastore.data['watching'].keys():

|

||||||

datastore.scrub_watch(uuid)

|

datastore.clear_watch_history(uuid)

|

||||||

|

#TODO: KeyError not checked, as it is above

|

||||||

|

|

||||||

flash("Cleared all snapshot history")

|

flash("Cleared snapshot history for all watches")

|

||||||

else:

|

else:

|

||||||

flash('Incorrect confirmation text.', 'error')

|

flash('Incorrect confirmation text.', 'error')

|

||||||

|

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

output = render_template("scrub.html")

|

output = render_template("clear_all_history.html")

|

||||||

return output

|

return output

|

||||||

|

|

||||||

|

|

||||||

@@ -491,10 +506,10 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

# 0 means that theres only one, so that there should be no 'unviewed' history available

|

# 0 means that theres only one, so that there should be no 'unviewed' history available

|

||||||

if newest_history_key == 0:

|

if newest_history_key == 0:

|

||||||

newest_history_key = list(datastore.data['watching'][uuid]['history'].keys())[0]

|

newest_history_key = list(datastore.data['watching'][uuid].history.keys())[0]

|

||||||

|

|

||||||

if newest_history_key:

|

if newest_history_key:

|

||||||

with open(datastore.data['watching'][uuid]['history'][newest_history_key],

|

with open(datastore.data['watching'][uuid].history[newest_history_key],

|

||||||

encoding='utf-8') as file:

|

encoding='utf-8') as file:

|

||||||

raw_content = file.read()

|

raw_content = file.read()

|

||||||

|

|

||||||

@@ -564,6 +579,9 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

if request.method == 'POST' and form.validate():

|

if request.method == 'POST' and form.validate():

|

||||||

extra_update_obj = {}

|

extra_update_obj = {}

|

||||||

|

|

||||||

|

if request.args.get('unpause_on_save'):

|

||||||

|

extra_update_obj['paused'] = False

|

||||||

|

|

||||||

# Re #110, if they submit the same as the default value, set it to None, so we continue to follow the default

|

# Re #110, if they submit the same as the default value, set it to None, so we continue to follow the default

|

||||||

# Assume we use the default value, unless something relevant is different, then use the form value

|

# Assume we use the default value, unless something relevant is different, then use the form value

|

||||||

# values could be None, 0 etc.

|

# values could be None, 0 etc.

|

||||||

@@ -588,12 +606,12 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

# Reset the previous_md5 so we process a new snapshot including stripping ignore text.

|

# Reset the previous_md5 so we process a new snapshot including stripping ignore text.

|

||||||

if form_ignore_text:

|

if form_ignore_text:

|

||||||

if len(datastore.data['watching'][uuid]['history']):

|

if len(datastore.data['watching'][uuid].history):

|

||||||

extra_update_obj['previous_md5'] = get_current_checksum_include_ignore_text(uuid=uuid)

|

extra_update_obj['previous_md5'] = get_current_checksum_include_ignore_text(uuid=uuid)

|

||||||

|

|

||||||

# Reset the previous_md5 so we process a new snapshot including stripping ignore text.

|

# Reset the previous_md5 so we process a new snapshot including stripping ignore text.

|

||||||

if form.css_filter.data.strip() != datastore.data['watching'][uuid]['css_filter']:

|

if form.css_filter.data.strip() != datastore.data['watching'][uuid]['css_filter']:

|

||||||

if len(datastore.data['watching'][uuid]['history']):

|

if len(datastore.data['watching'][uuid].history):

|

||||||

extra_update_obj['previous_md5'] = get_current_checksum_include_ignore_text(uuid=uuid)

|

extra_update_obj['previous_md5'] = get_current_checksum_include_ignore_text(uuid=uuid)

|

||||||

|

|

||||||

# Be sure proxy value is None

|

# Be sure proxy value is None

|

||||||

@@ -603,14 +621,17 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

datastore.data['watching'][uuid].update(form.data)

|

datastore.data['watching'][uuid].update(form.data)

|

||||||

datastore.data['watching'][uuid].update(extra_update_obj)

|

datastore.data['watching'][uuid].update(extra_update_obj)

|

||||||

|

|

||||||

|

if request.args.get('unpause_on_save'):

|

||||||

|

flash("Updated watch - unpaused!.")

|

||||||

|

else:

|

||||||

flash("Updated watch.")

|

flash("Updated watch.")

|

||||||

|

|

||||||

# Re #286 - We wait for syncing new data to disk in another thread every 60 seconds

|

# Re #286 - We wait for syncing new data to disk in another thread every 60 seconds

|

||||||

# But in the case something is added we should save straight away

|

# But in the case something is added we should save straight away

|

||||||

datastore.needs_write_urgent = True

|

datastore.needs_write_urgent = True

|

||||||

|

|

||||||

# Queue the watch for immediate recheck

|

# Queue the watch for immediate recheck, with a higher priority

|

||||||

update_q.put(uuid)

|

update_q.put((1, uuid))

|

||||||

|

|

||||||

# Diff page [edit] link should go back to diff page

|

# Diff page [edit] link should go back to diff page

|

||||||

if request.args.get("next") and request.args.get("next") == 'diff' and not form.save_and_preview_button.data:

|

if request.args.get("next") and request.args.get("next") == 'diff' and not form.save_and_preview_button.data:

|

||||||

@@ -641,7 +662,8 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

current_base_url=datastore.data['settings']['application']['base_url'],

|

current_base_url=datastore.data['settings']['application']['base_url'],

|

||||||

emailprefix=os.getenv('NOTIFICATION_MAIL_BUTTON_PREFIX', False),

|

emailprefix=os.getenv('NOTIFICATION_MAIL_BUTTON_PREFIX', False),

|

||||||

visualselector_data_is_ready=visualselector_data_is_ready,

|

visualselector_data_is_ready=visualselector_data_is_ready,

|

||||||

visualselector_enabled=visualselector_enabled

|

visualselector_enabled=visualselector_enabled,

|

||||||

|

playwright_enabled=os.getenv('PLAYWRIGHT_DRIVER_URL', False)

|

||||||

)

|

)

|

||||||

|

|

||||||

return output

|

return output

|

||||||

@@ -723,7 +745,7 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

importer = import_url_list()

|

importer = import_url_list()

|

||||||

importer.run(data=request.values.get('urls'), flash=flash, datastore=datastore)

|

importer.run(data=request.values.get('urls'), flash=flash, datastore=datastore)

|

||||||

for uuid in importer.new_uuids:

|

for uuid in importer.new_uuids:

|

||||||

update_q.put(uuid)

|

update_q.put((1, uuid))

|

||||||

|

|

||||||

if len(importer.remaining_data) == 0:

|

if len(importer.remaining_data) == 0:

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

@@ -736,7 +758,7 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

d_importer = import_distill_io_json()

|

d_importer = import_distill_io_json()

|

||||||

d_importer.run(data=request.values.get('distill-io'), flash=flash, datastore=datastore)

|

d_importer.run(data=request.values.get('distill-io'), flash=flash, datastore=datastore)

|

||||||

for uuid in d_importer.new_uuids:

|

for uuid in d_importer.new_uuids:

|

||||||

update_q.put(uuid)

|

update_q.put((1, uuid))

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -748,15 +770,14 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

return output

|

return output

|

||||||

|

|

||||||

# Clear all statuses, so we do not see the 'unviewed' class

|

# Clear all statuses, so we do not see the 'unviewed' class

|

||||||

@app.route("/api/mark-all-viewed", methods=['GET'])

|

@app.route("/form/mark-all-viewed", methods=['GET'])

|

||||||

@login_required

|

@login_required

|

||||||

def mark_all_viewed():

|

def mark_all_viewed():

|

||||||

|

|

||||||

# Save the current newest history as the most recently viewed

|

# Save the current newest history as the most recently viewed

|

||||||

for watch_uuid, watch in datastore.data['watching'].items():

|

for watch_uuid, watch in datastore.data['watching'].items():

|

||||||

datastore.set_last_viewed(watch_uuid, watch['newest_history_key'])

|

datastore.set_last_viewed(watch_uuid, int(time.time()))

|

||||||

|

|

||||||

flash("Cleared all statuses.")

|

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

@app.route("/diff/<string:uuid>", methods=['GET'])

|

@app.route("/diff/<string:uuid>", methods=['GET'])

|

||||||

@@ -774,20 +795,17 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

flash("No history found for the specified link, bad link?", "error")

|

flash("No history found for the specified link, bad link?", "error")

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

dates = list(watch['history'].keys())

|

history = watch.history

|

||||||

# Convert to int, sort and back to str again

|

dates = list(history.keys())

|

||||||

# @todo replace datastore getter that does this automatically

|

|

||||||

dates = [int(i) for i in dates]

|

|

||||||

dates.sort(reverse=True)

|

|

||||||

dates = [str(i) for i in dates]

|

|

||||||

|

|

||||||

if len(dates) < 2:

|

if len(dates) < 2:

|

||||||

flash("Not enough saved change detection snapshots to produce a report.", "error")

|

flash("Not enough saved change detection snapshots to produce a report.", "error")

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

# Save the current newest history as the most recently viewed

|

# Save the current newest history as the most recently viewed

|

||||||

datastore.set_last_viewed(uuid, dates[0])

|

datastore.set_last_viewed(uuid, time.time())

|

||||||

newest_file = watch['history'][dates[0]]

|

|

||||||

|

newest_file = history[dates[-1]]

|

||||||

|

|

||||||

try:

|

try:

|

||||||

with open(newest_file, 'r') as f:

|

with open(newest_file, 'r') as f:

|

||||||

@@ -797,10 +815,10 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

previous_version = request.args.get('previous_version')

|

previous_version = request.args.get('previous_version')

|

||||||

try:

|

try:

|

||||||

previous_file = watch['history'][previous_version]

|

previous_file = history[previous_version]

|

||||||

except KeyError:

|

except KeyError:

|

||||||

# Not present, use a default value, the second one in the sorted list.

|

# Not present, use a default value, the second one in the sorted list.

|

||||||

previous_file = watch['history'][dates[1]]

|

previous_file = history[dates[-2]]

|

||||||

|

|

||||||

try:

|

try:

|

||||||

with open(previous_file, 'r') as f:

|

with open(previous_file, 'r') as f:

|

||||||

@@ -811,18 +829,25 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

screenshot_url = datastore.get_screenshot(uuid)

|

screenshot_url = datastore.get_screenshot(uuid)

|

||||||

|

|

||||||

output = render_template("diff.html", watch_a=watch,

|

system_uses_webdriver = datastore.data['settings']['application']['fetch_backend'] == 'html_webdriver'

|

||||||

|

|

||||||

|

is_html_webdriver = True if watch.get('fetch_backend') == 'html_webdriver' or (

|

||||||

|

watch.get('fetch_backend', None) is None and system_uses_webdriver) else False

|

||||||

|

|

||||||

|

output = render_template("diff.html",

|

||||||

|

watch_a=watch,

|

||||||

newest=newest_version_file_contents,

|

newest=newest_version_file_contents,

|

||||||

previous=previous_version_file_contents,

|

previous=previous_version_file_contents,

|

||||||

extra_stylesheets=extra_stylesheets,

|

extra_stylesheets=extra_stylesheets,

|

||||||

versions=dates[1:],

|

versions=dates[:-1], # All except current/last

|

||||||

uuid=uuid,

|

uuid=uuid,

|

||||||

newest_version_timestamp=dates[0],

|

newest_version_timestamp=dates[-1],

|

||||||

current_previous_version=str(previous_version),

|

current_previous_version=str(previous_version),

|

||||||

current_diff_url=watch['url'],

|

current_diff_url=watch['url'],

|

||||||

extra_title=" - Diff - {}".format(watch['title'] if watch['title'] else watch['url']),

|

extra_title=" - Diff - {}".format(watch['title'] if watch['title'] else watch['url']),

|

||||||

left_sticky=True,

|

left_sticky=True,

|

||||||

screenshot=screenshot_url)

|

screenshot=screenshot_url,

|

||||||

|

is_html_webdriver=is_html_webdriver)

|

||||||

|

|

||||||

return output

|

return output

|

||||||

|

|

||||||

@@ -837,6 +862,12 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

if uuid == 'first':

|

if uuid == 'first':

|

||||||

uuid = list(datastore.data['watching'].keys()).pop()

|

uuid = list(datastore.data['watching'].keys()).pop()

|

||||||

|

|

||||||

|

# Normally you would never reach this, because the 'preview' button is not available when there's no history

|

||||||

|

# However they may try to clear snapshots and reload the page

|

||||||

|

if datastore.data['watching'][uuid].history_n == 0:

|

||||||

|

flash("Preview unavailable - No fetch/check completed or triggers not reached", "error")

|

||||||

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

extra_stylesheets = [url_for('static_content', group='styles', filename='diff.css')]

|

extra_stylesheets = [url_for('static_content', group='styles', filename='diff.css')]

|

||||||

|

|

||||||

try:

|

try:

|

||||||

@@ -845,9 +876,9 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

flash("No history found for the specified link, bad link?", "error")

|

flash("No history found for the specified link, bad link?", "error")

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

if len(watch['history']):

|

|

||||||

timestamps = sorted(watch['history'].keys(), key=lambda x: int(x))

|

timestamp = list(watch.history.keys())[-1]

|

||||||

filename = watch['history'][timestamps[-1]]

|

filename = watch.history[timestamp]

|

||||||

try:

|

try:

|

||||||

with open(filename, 'r') as f:

|

with open(filename, 'r') as f:

|

||||||

tmp = f.readlines()

|

tmp = f.readlines()

|

||||||

@@ -876,13 +907,16 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

classes.append('triggered')

|

classes.append('triggered')

|

||||||

content.append({'line': l, 'classes': ' '.join(classes)})

|

content.append({'line': l, 'classes': ' '.join(classes)})

|

||||||

|

|

||||||

|

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

content.append({'line': "File doesnt exist or unable to read file {}".format(filename), 'classes': ''})

|

content.append({'line': "File doesnt exist or unable to read file {}".format(filename), 'classes': ''})

|

||||||

else:

|

|

||||||

content.append({'line': "No history found", 'classes': ''})

|

|

||||||

|

|

||||||

screenshot_url = datastore.get_screenshot(uuid)

|

screenshot_url = datastore.get_screenshot(uuid)

|

||||||

|

system_uses_webdriver = datastore.data['settings']['application']['fetch_backend'] == 'html_webdriver'

|

||||||

|

|

||||||

|

is_html_webdriver = True if watch.get('fetch_backend') == 'html_webdriver' or (

|

||||||

|

watch.get('fetch_backend', None) is None and system_uses_webdriver) else False

|

||||||

|

|

||||||

output = render_template("preview.html",

|

output = render_template("preview.html",

|

||||||

content=content,

|

content=content,

|

||||||

extra_stylesheets=extra_stylesheets,

|

extra_stylesheets=extra_stylesheets,

|

||||||

@@ -891,7 +925,8 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

current_diff_url=watch['url'],

|

current_diff_url=watch['url'],

|

||||||

screenshot=screenshot_url,

|

screenshot=screenshot_url,

|

||||||

watch=watch,

|

watch=watch,

|

||||||

uuid=uuid)

|

uuid=uuid,

|

||||||

|

is_html_webdriver=is_html_webdriver)

|

||||||

|

|

||||||

return output

|

return output

|

||||||

|

|

||||||

@@ -900,7 +935,7 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

def notification_logs():

|

def notification_logs():

|

||||||

global notification_debug_log

|

global notification_debug_log

|

||||||

output = render_template("notification-log.html",

|

output = render_template("notification-log.html",

|

||||||

logs=notification_debug_log if len(notification_debug_log) else ["No errors or warnings detected"])

|

logs=notification_debug_log if len(notification_debug_log) else ["Notification logs are empty - no notifications sent yet."])

|

||||||

|

|

||||||

return output

|

return output

|

||||||

|

|

||||||

@@ -1031,9 +1066,9 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

except FileNotFoundError:

|

except FileNotFoundError:

|

||||||

abort(404)

|

abort(404)

|

||||||

|

|

||||||

@app.route("/api/add", methods=['POST'])

|

@app.route("/form/add/quickwatch", methods=['POST'])

|

||||||

@login_required

|

@login_required

|

||||||

def form_watch_add():

|

def form_quick_watch_add():

|

||||||

from changedetectionio import forms

|

from changedetectionio import forms

|

||||||

form = forms.quickWatchForm(request.form)

|

form = forms.quickWatchForm(request.form)

|

||||||

|

|

||||||

@@ -1046,13 +1081,19 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

flash('The URL {} already exists'.format(url), "error")

|

flash('The URL {} already exists'.format(url), "error")

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

# @todo add_watch should throw a custom Exception for validation etc

|

add_paused = request.form.get('edit_and_watch_submit_button') != None

|

||||||

new_uuid = datastore.add_watch(url=url, tag=request.form.get('tag').strip())

|

new_uuid = datastore.add_watch(url=url, tag=request.form.get('tag').strip(), extras={'paused': add_paused})

|

||||||

if new_uuid:

|

|

||||||

|

|

||||||

|

if not add_paused and new_uuid:

|

||||||

# Straight into the queue.

|

# Straight into the queue.

|

||||||

update_q.put(new_uuid)

|

update_q.put((1, new_uuid))

|

||||||

flash("Watch added.")

|

flash("Watch added.")

|

||||||

|

|

||||||

|

if add_paused:

|

||||||

|

flash('Watch added in Paused state, saving will unpause.')

|

||||||

|

return redirect(url_for('edit_page', uuid=new_uuid, unpause_on_save=1))

|

||||||

|

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

|

|

||||||

@@ -1083,7 +1124,7 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

uuid = list(datastore.data['watching'].keys()).pop()

|

uuid = list(datastore.data['watching'].keys()).pop()

|

||||||

|

|

||||||

new_uuid = datastore.clone(uuid)

|

new_uuid = datastore.clone(uuid)

|

||||||

update_q.put(new_uuid)

|

update_q.put((5, new_uuid))

|

||||||

flash('Cloned.')

|

flash('Cloned.')

|

||||||

|

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

@@ -1104,7 +1145,7 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

if uuid:

|

if uuid:

|

||||||

if uuid not in running_uuids:

|

if uuid not in running_uuids:

|

||||||

update_q.put(uuid)

|

update_q.put((1, uuid))

|

||||||

i = 1

|

i = 1

|

||||||

|

|

||||||

elif tag != None:

|

elif tag != None:

|

||||||

@@ -1112,7 +1153,7 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

for watch_uuid, watch in datastore.data['watching'].items():

|

for watch_uuid, watch in datastore.data['watching'].items():

|

||||||

if (tag != None and tag in watch['tag']):

|

if (tag != None and tag in watch['tag']):

|

||||||

if watch_uuid not in running_uuids and not datastore.data['watching'][watch_uuid]['paused']:

|

if watch_uuid not in running_uuids and not datastore.data['watching'][watch_uuid]['paused']:

|

||||||

update_q.put(watch_uuid)

|

update_q.put((1, watch_uuid))

|

||||||

i += 1

|

i += 1

|

||||||

|

|

||||||

else:

|

else:

|

||||||

@@ -1120,7 +1161,7 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

for watch_uuid, watch in datastore.data['watching'].items():

|

for watch_uuid, watch in datastore.data['watching'].items():

|

||||||

|

|

||||||

if watch_uuid not in running_uuids and not datastore.data['watching'][watch_uuid]['paused']:

|

if watch_uuid not in running_uuids and not datastore.data['watching'][watch_uuid]['paused']:

|

||||||

update_q.put(watch_uuid)

|

update_q.put((1, watch_uuid))

|

||||||

i += 1

|

i += 1

|

||||||

flash("{} watches are queued for rechecking.".format(i))

|

flash("{} watches are queued for rechecking.".format(i))

|

||||||

return redirect(url_for('index', tag=tag))

|

return redirect(url_for('index', tag=tag))

|

||||||

@@ -1141,6 +1182,7 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

# copy it to memory as trim off what we dont need (history)

|

# copy it to memory as trim off what we dont need (history)

|

||||||

watch = deepcopy(datastore.data['watching'][uuid])

|

watch = deepcopy(datastore.data['watching'][uuid])

|

||||||

|

# For older versions that are not a @property

|

||||||

if (watch.get('history')):

|

if (watch.get('history')):

|

||||||

del (watch['history'])

|

del (watch['history'])

|

||||||

|

|

||||||

@@ -1170,7 +1212,8 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

|

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

flash("Could not share, something went wrong while communicating with the share server.", 'error')

|

logging.error("Error sharing -{}".format(str(e)))

|

||||||

|

flash("Could not share, something went wrong while communicating with the share server - {}".format(str(e)), 'error')

|

||||||

|

|

||||||

# https://changedetection.io/share/VrMv05wpXyQa

|

# https://changedetection.io/share/VrMv05wpXyQa

|

||||||

# in the browser - should give you a nice info page - wtf

|

# in the browser - should give you a nice info page - wtf

|

||||||

@@ -1218,6 +1261,8 @@ def check_for_new_version():

|

|||||||

|

|

||||||

def notification_runner():

|

def notification_runner():

|

||||||

global notification_debug_log

|

global notification_debug_log

|

||||||

|

from datetime import datetime

|

||||||

|

import json

|

||||||

while not app.config.exit.is_set():

|

while not app.config.exit.is_set():

|

||||||

try:

|

try:

|

||||||

# At the moment only one thread runs (single runner)

|

# At the moment only one thread runs (single runner)

|

||||||

@@ -1226,13 +1271,17 @@ def notification_runner():

|

|||||||

time.sleep(1)

|

time.sleep(1)

|

||||||

|

|

||||||

else:

|

else:

|

||||||

# Process notifications

|

|

||||||

|

now = datetime.now()

|

||||||

|

sent_obj = None

|

||||||

|

|

||||||

try:

|

try:

|

||||||

from changedetectionio import notification

|

from changedetectionio import notification

|

||||||

notification.process_notification(n_object, datastore)

|

|

||||||

|

sent_obj = notification.process_notification(n_object, datastore)

|

||||||

|

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

print("Watch URL: {} Error {}".format(n_object['watch_url'], str(e)))

|

logging.error("Watch URL: {} Error {}".format(n_object['watch_url'], str(e)))

|

||||||

|

|

||||||

# UUID wont be present when we submit a 'test' from the global settings

|

# UUID wont be present when we submit a 'test' from the global settings

|

||||||

if 'uuid' in n_object:

|

if 'uuid' in n_object:

|

||||||

@@ -1242,14 +1291,19 @@ def notification_runner():

|

|||||||

log_lines = str(e).splitlines()

|

log_lines = str(e).splitlines()

|

||||||

notification_debug_log += log_lines

|

notification_debug_log += log_lines

|

||||||

|

|

||||||

|

# Process notifications

|

||||||

|

notification_debug_log+= ["{} - SENDING - {}".format(now.strftime("%Y/%m/%d %H:%M:%S,000"), json.dumps(sent_obj))]

|

||||||

# Trim the log length

|

# Trim the log length

|

||||||

notification_debug_log = notification_debug_log[-100:]

|

notification_debug_log = notification_debug_log[-100:]

|

||||||

|

|

||||||

|

|

||||||

# Thread runner to check every minute, look for new watches to feed into the Queue.

|

# Thread runner to check every minute, look for new watches to feed into the Queue.

|

||||||

def ticker_thread_check_time_launch_checks():

|

def ticker_thread_check_time_launch_checks():

|

||||||

|

import random

|

||||||

from changedetectionio import update_worker

|

from changedetectionio import update_worker

|

||||||

|

|

||||||

|

recheck_time_minimum_seconds = int(os.getenv('MINIMUM_SECONDS_RECHECK_TIME', 20))

|

||||||

|

print("System env MINIMUM_SECONDS_RECHECK_TIME", recheck_time_minimum_seconds)

|

||||||

|

|

||||||

# Spin up Workers that do the fetching

|

# Spin up Workers that do the fetching

|

||||||

# Can be overriden by ENV or use the default settings

|

# Can be overriden by ENV or use the default settings

|

||||||

n_workers = int(os.getenv("FETCH_WORKERS", datastore.data['settings']['requests']['workers']))

|

n_workers = int(os.getenv("FETCH_WORKERS", datastore.data['settings']['requests']['workers']))

|

||||||

@@ -1267,9 +1321,10 @@ def ticker_thread_check_time_launch_checks():

|

|||||||

running_uuids.append(t.current_uuid)

|

running_uuids.append(t.current_uuid)

|

||||||

|

|

||||||

# Re #232 - Deepcopy the data incase it changes while we're iterating through it all

|

# Re #232 - Deepcopy the data incase it changes while we're iterating through it all

|

||||||

|

watch_uuid_list = []

|

||||||

while True:

|

while True:

|

||||||

try:

|

try:

|

||||||

copied_datastore = deepcopy(datastore)

|

watch_uuid_list = datastore.data['watching'].keys()

|

||||||

except RuntimeError as e:

|

except RuntimeError as e:

|

||||||

# RuntimeError: dictionary changed size during iteration

|

# RuntimeError: dictionary changed size during iteration

|

||||||

time.sleep(0.1)

|

time.sleep(0.1)

|

||||||

@@ -1280,33 +1335,49 @@ def ticker_thread_check_time_launch_checks():

|

|||||||

while update_q.qsize() >= 2000:

|

while update_q.qsize() >= 2000:

|

||||||

time.sleep(1)

|

time.sleep(1)

|

||||||

|

|

||||||

|

|

||||||

|

recheck_time_system_seconds = int(datastore.threshold_seconds)

|

||||||

|

|

||||||

# Check for watches outside of the time threshold to put in the thread queue.

|

# Check for watches outside of the time threshold to put in the thread queue.

|

||||||

|

for uuid in watch_uuid_list:

|

||||||

now = time.time()

|

now = time.time()

|

||||||

|

watch = datastore.data['watching'].get(uuid)

|

||||||

recheck_time_minimum_seconds = int(os.getenv('MINIMUM_SECONDS_RECHECK_TIME', 60))

|

if not watch:

|

||||||

recheck_time_system_seconds = datastore.threshold_seconds

|

logging.error("Watch: {} no longer present.".format(uuid))

|

||||||

|

continue

|

||||||

for uuid, watch in copied_datastore.data['watching'].items():

|

|

||||||

|

|

||||||

# No need todo further processing if it's paused

|

# No need todo further processing if it's paused

|

||||||

if watch['paused']:

|

if watch['paused']:

|

||||||

continue

|

continue

|

||||||

|

|

||||||

# If they supplied an individual entry minutes to threshold.

|

# If they supplied an individual entry minutes to threshold.

|

||||||

threshold = now

|

|

||||||

watch_threshold_seconds = watch.threshold_seconds()

|

watch_threshold_seconds = watch.threshold_seconds()

|

||||||

if watch_threshold_seconds:

|

threshold = watch_threshold_seconds if watch_threshold_seconds > 0 else recheck_time_system_seconds

|

||||||

threshold -= watch_threshold_seconds

|

|

||||||

else:

|

|

||||||

threshold -= recheck_time_system_seconds

|

|

||||||

|

|

||||||

# Yeah, put it in the queue, it's more than time

|

# #580 - Jitter plus/minus amount of time to make the check seem more random to the server

|

||||||