Compare commits

12 Commits

improve-lo

...

API-interf

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

fba4b6747e | ||

|

|

4cf2d9d7aa | ||

|

|

6bc1c681ec | ||

|

|

1267512858 | ||

|

|

886ef0c7c1 | ||

|

|

97c6db5e56 | ||

|

|

23dde28399 | ||

|

|

91fe2dd420 | ||

|

|

408c8878f3 | ||

|

|

37614224e5 | ||

|

|

1caff23d2c | ||

|

|

b01ee24d55 |

11

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -1,9 +1,9 @@

|

|||||||

---

|

---

|

||||||

name: Bug report

|

name: Bug report

|

||||||

about: Create a bug report, if you don't follow this template, your report will be DELETED

|

about: Create a report to help us improve

|

||||||

title: ''

|

title: ''

|

||||||

labels: 'triage'

|

labels: ''

|

||||||

assignees: 'dgtlmoon'

|

assignees: ''

|

||||||

|

|

||||||

---

|

---

|

||||||

|

|

||||||

@@ -11,18 +11,15 @@ assignees: 'dgtlmoon'

|

|||||||

A clear and concise description of what the bug is.

|

A clear and concise description of what the bug is.

|

||||||

|

|

||||||

**Version**

|

**Version**

|

||||||

*Exact version* in the top right area: 0....

|

In the top right area: 0....

|

||||||

|

|

||||||

**To Reproduce**

|

**To Reproduce**

|

||||||

|

|

||||||

Steps to reproduce the behavior:

|

Steps to reproduce the behavior:

|

||||||

1. Go to '...'

|

1. Go to '...'

|

||||||

2. Click on '....'

|

2. Click on '....'

|

||||||

3. Scroll down to '....'

|

3. Scroll down to '....'

|

||||||

4. See error

|

4. See error

|

||||||

|

|

||||||

! ALWAYS INCLUDE AN EXAMPLE URL WHERE IT IS POSSIBLE TO RE-CREATE THE ISSUE !

|

|

||||||

|

|

||||||

**Expected behavior**

|

**Expected behavior**

|

||||||

A clear and concise description of what you expected to happen.

|

A clear and concise description of what you expected to happen.

|

||||||

|

|

||||||

|

|||||||

4

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@@ -1,8 +1,8 @@

|

|||||||

---

|

---

|

||||||

name: Feature request

|

name: Feature request

|

||||||

about: Suggest an idea for this project

|

about: Suggest an idea for this project

|

||||||

title: '[feature]'

|

title: ''

|

||||||

labels: 'enhancement'

|

labels: ''

|

||||||

assignees: ''

|

assignees: ''

|

||||||

|

|

||||||

---

|

---

|

||||||

|

|||||||

1

.gitignore

vendored

@@ -8,6 +8,5 @@ __pycache__

|

|||||||

build

|

build

|

||||||

dist

|

dist

|

||||||

venv

|

venv

|

||||||

test-datastore

|

|

||||||

*.egg-info*

|

*.egg-info*

|

||||||

.vscode/settings.json

|

.vscode/settings.json

|

||||||

|

|||||||

@@ -1,4 +1,3 @@

|

|||||||

recursive-include changedetectionio/api *

|

|

||||||

recursive-include changedetectionio/templates *

|

recursive-include changedetectionio/templates *

|

||||||

recursive-include changedetectionio/static *

|

recursive-include changedetectionio/static *

|

||||||

recursive-include changedetectionio/model *

|

recursive-include changedetectionio/model *

|

||||||

|

|||||||

21

README.md

@@ -12,7 +12,7 @@ Live your data-life *pro-actively* instead of *re-actively*.

|

|||||||

Free, Open-source web page monitoring, notification and change detection. Don't have time? [**Try our $6.99/month subscription - unlimited checks and watches!**](https://lemonade.changedetection.io/start)

|

Free, Open-source web page monitoring, notification and change detection. Don't have time? [**Try our $6.99/month subscription - unlimited checks and watches!**](https://lemonade.changedetection.io/start)

|

||||||

|

|

||||||

|

|

||||||

[<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot.png" style="max-width:100%;" alt="Self-hosted web page change monitoring" title="Self-hosted web page change monitoring" />](https://lemonade.changedetection.io/start)

|

[<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot.png" style="max-width:100%;" alt="Self-hosted web page change monitoring" title="Self-hosted web page change monitoring" />](https://lemonade.changedetection.io/start)

|

||||||

|

|

||||||

|

|

||||||

**Get your own private instance now! Let us host it for you!**

|

**Get your own private instance now! Let us host it for you!**

|

||||||

@@ -48,19 +48,12 @@ _Need an actual Chrome runner with Javascript support? We support fetching via W

|

|||||||

|

|

||||||

## Screenshots

|

## Screenshots

|

||||||

|

|

||||||

### Examine differences in content.

|

Examining differences in content.

|

||||||

|

|

||||||

Easily see what changed, examine by word, line, or individual character.

|

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot-diff.png" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Self-hosted web page change monitoring context difference " />

|

||||||

|

|

||||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot-diff.png" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Self-hosted web page change monitoring context difference " />

|

|

||||||

|

|

||||||

Please :star: star :star: this project and help it grow! https://github.com/dgtlmoon/changedetection.io/

|

Please :star: star :star: this project and help it grow! https://github.com/dgtlmoon/changedetection.io/

|

||||||

|

|

||||||

### Target elements with the Visual Selector tool.

|

|

||||||

|

|

||||||

Available when connected to a <a href="https://github.com/dgtlmoon/changedetection.io/wiki/Playwright-content-fetcher">playwright content fetcher</a> (available also as part of our subscription service)

|

|

||||||

|

|

||||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/visualselector-anim.gif" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Self-hosted web page change monitoring context difference " />

|

|

||||||

|

|

||||||

## Installation

|

## Installation

|

||||||

|

|

||||||

@@ -136,7 +129,7 @@ Just some examples

|

|||||||

|

|

||||||

<a href="https://github.com/caronc/apprise#popular-notification-services">And everything else in this list!</a>

|

<a href="https://github.com/caronc/apprise#popular-notification-services">And everything else in this list!</a>

|

||||||

|

|

||||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot-notifications.png" style="max-width:100%;" alt="Self-hosted web page change monitoring notifications" title="Self-hosted web page change monitoring notifications" />

|

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot-notifications.png" style="max-width:100%;" alt="Self-hosted web page change monitoring notifications" title="Self-hosted web page change monitoring notifications" />

|

||||||

|

|

||||||

Now you can also customise your notification content!

|

Now you can also customise your notification content!

|

||||||

|

|

||||||

@@ -144,11 +137,11 @@ Now you can also customise your notification content!

|

|||||||

|

|

||||||

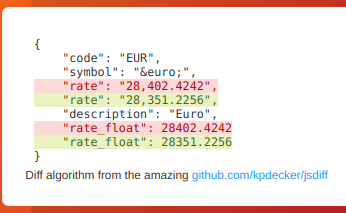

Detect changes and monitor data in JSON API's by using the built-in JSONPath selectors as a filter / selector.

|

Detect changes and monitor data in JSON API's by using the built-in JSONPath selectors as a filter / selector.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

This will re-parse the JSON and apply formatting to the text, making it super easy to monitor and detect changes in JSON API results

|

This will re-parse the JSON and apply formatting to the text, making it super easy to monitor and detect changes in JSON API results

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### Parse JSON embedded in HTML!

|

### Parse JSON embedded in HTML!

|

||||||

|

|

||||||

@@ -184,7 +177,7 @@ Or directly donate an amount PayPal [:

|

|||||||

global datastore

|

global datastore

|

||||||

datastore = datastore_o

|

datastore = datastore_o

|

||||||

|

|

||||||

# so far just for read-only via tests, but this will be moved eventually to be the main source

|

|

||||||

# (instead of the global var)

|

|

||||||

app.config['DATASTORE']=datastore_o

|

|

||||||

|

|

||||||

#app.config.update(config or {})

|

#app.config.update(config or {})

|

||||||

|

|

||||||

login_manager = flask_login.LoginManager(app)

|

login_manager = flask_login.LoginManager(app)

|

||||||

@@ -322,19 +317,25 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

for watch in sorted_watches:

|

for watch in sorted_watches:

|

||||||

|

|

||||||

dates = list(watch.history.keys())

|

dates = list(watch['history'].keys())

|

||||||

# Re #521 - Don't bother processing this one if theres less than 2 snapshots, means we never had a change detected.

|

# Re #521 - Don't bother processing this one if theres less than 2 snapshots, means we never had a change detected.

|

||||||

if len(dates) < 2:

|

if len(dates) < 2:

|

||||||

continue

|

continue

|

||||||

|

|

||||||

prev_fname = watch.history[dates[-2]]

|

# Convert to int, sort and back to str again

|

||||||

|

# @todo replace datastore getter that does this automatically

|

||||||

|

dates = [int(i) for i in dates]

|

||||||

|

dates.sort(reverse=True)

|

||||||

|

dates = [str(i) for i in dates]

|

||||||

|

prev_fname = watch['history'][dates[1]]

|

||||||

|

|

||||||

if not watch.viewed:

|

if not watch['viewed']:

|

||||||

# Re #239 - GUID needs to be individual for each event

|

# Re #239 - GUID needs to be individual for each event

|

||||||

# @todo In the future make this a configurable link back (see work on BASE_URL https://github.com/dgtlmoon/changedetection.io/pull/228)

|

# @todo In the future make this a configurable link back (see work on BASE_URL https://github.com/dgtlmoon/changedetection.io/pull/228)

|

||||||

guid = "{}/{}".format(watch['uuid'], watch['last_changed'])

|

guid = "{}/{}".format(watch['uuid'], watch['last_changed'])

|

||||||

fe = fg.add_entry()

|

fe = fg.add_entry()

|

||||||

|

|

||||||

|

|

||||||

# Include a link to the diff page, they will have to login here to see if password protection is enabled.

|

# Include a link to the diff page, they will have to login here to see if password protection is enabled.

|

||||||

# Description is the page you watch, link takes you to the diff JS UI page

|

# Description is the page you watch, link takes you to the diff JS UI page

|

||||||

base_url = datastore.data['settings']['application']['base_url']

|

base_url = datastore.data['settings']['application']['base_url']

|

||||||

@@ -349,15 +350,13 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

watch_title = watch.get('title') if watch.get('title') else watch.get('url')

|

watch_title = watch.get('title') if watch.get('title') else watch.get('url')

|

||||||

fe.title(title=watch_title)

|

fe.title(title=watch_title)

|

||||||

latest_fname = watch.history[dates[-1]]

|

latest_fname = watch['history'][dates[0]]

|

||||||

|

|

||||||

html_diff = diff.render_diff(prev_fname, latest_fname, include_equal=False, line_feed_sep="</br>")

|

html_diff = diff.render_diff(prev_fname, latest_fname, include_equal=False, line_feed_sep="</br>")

|

||||||

fe.description(description="<![CDATA["

|

fe.description(description="<![CDATA[<html><body><h4>{}</h4>{}</body></html>".format(watch_title, html_diff))

|

||||||

"<html><body><h4>{}</h4>{}</body></html>"

|

|

||||||

"]]>".format(watch_title, html_diff))

|

|

||||||

|

|

||||||

fe.guid(guid, permalink=False)

|

fe.guid(guid, permalink=False)

|

||||||

dt = datetime.datetime.fromtimestamp(int(watch.newest_history_key))

|

dt = datetime.datetime.fromtimestamp(int(watch['newest_history_key']))

|

||||||

dt = dt.replace(tzinfo=pytz.UTC)

|

dt = dt.replace(tzinfo=pytz.UTC)

|

||||||

fe.pubDate(dt)

|

fe.pubDate(dt)

|

||||||

|

|

||||||

@@ -416,13 +415,11 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

tags=existing_tags,

|

tags=existing_tags,

|

||||||

active_tag=limit_tag,

|

active_tag=limit_tag,

|

||||||

app_rss_token=datastore.data['settings']['application']['rss_access_token'],

|

app_rss_token=datastore.data['settings']['application']['rss_access_token'],

|

||||||

has_unviewed=datastore.has_unviewed,

|

has_unviewed=datastore.data['has_unviewed'],

|

||||||

# Don't link to hosting when we're on the hosting environment

|

# Don't link to hosting when we're on the hosting environment

|

||||||

hosted_sticky=os.getenv("SALTED_PASS", False) == False,

|

hosted_sticky=os.getenv("SALTED_PASS", False) == False,

|

||||||

guid=datastore.data['app_guid'],

|

guid=datastore.data['app_guid'],

|

||||||

queued_uuids=update_q.queue)

|

queued_uuids=update_q.queue)

|

||||||

|

|

||||||

|

|

||||||

if session.get('share-link'):

|

if session.get('share-link'):

|

||||||

del(session['share-link'])

|

del(session['share-link'])

|

||||||

return output

|

return output

|

||||||

@@ -494,10 +491,10 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

# 0 means that theres only one, so that there should be no 'unviewed' history available

|

# 0 means that theres only one, so that there should be no 'unviewed' history available

|

||||||

if newest_history_key == 0:

|

if newest_history_key == 0:

|

||||||

newest_history_key = list(datastore.data['watching'][uuid].history.keys())[0]

|

newest_history_key = list(datastore.data['watching'][uuid]['history'].keys())[0]

|

||||||

|

|

||||||

if newest_history_key:

|

if newest_history_key:

|

||||||

with open(datastore.data['watching'][uuid].history[newest_history_key],

|

with open(datastore.data['watching'][uuid]['history'][newest_history_key],

|

||||||

encoding='utf-8') as file:

|

encoding='utf-8') as file:

|

||||||

raw_content = file.read()

|

raw_content = file.read()

|

||||||

|

|

||||||

@@ -591,12 +588,12 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

# Reset the previous_md5 so we process a new snapshot including stripping ignore text.

|

# Reset the previous_md5 so we process a new snapshot including stripping ignore text.

|

||||||

if form_ignore_text:

|

if form_ignore_text:

|

||||||

if len(datastore.data['watching'][uuid].history):

|

if len(datastore.data['watching'][uuid]['history']):

|

||||||

extra_update_obj['previous_md5'] = get_current_checksum_include_ignore_text(uuid=uuid)

|

extra_update_obj['previous_md5'] = get_current_checksum_include_ignore_text(uuid=uuid)

|

||||||

|

|

||||||

# Reset the previous_md5 so we process a new snapshot including stripping ignore text.

|

# Reset the previous_md5 so we process a new snapshot including stripping ignore text.

|

||||||

if form.css_filter.data.strip() != datastore.data['watching'][uuid]['css_filter']:

|

if form.css_filter.data.strip() != datastore.data['watching'][uuid]['css_filter']:

|

||||||

if len(datastore.data['watching'][uuid].history):

|

if len(datastore.data['watching'][uuid]['history']):

|

||||||

extra_update_obj['previous_md5'] = get_current_checksum_include_ignore_text(uuid=uuid)

|

extra_update_obj['previous_md5'] = get_current_checksum_include_ignore_text(uuid=uuid)

|

||||||

|

|

||||||

# Be sure proxy value is None

|

# Be sure proxy value is None

|

||||||

@@ -629,12 +626,6 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

if request.method == 'POST' and not form.validate():

|

if request.method == 'POST' and not form.validate():

|

||||||

flash("An error occurred, please see below.", "error")

|

flash("An error occurred, please see below.", "error")

|

||||||

|

|

||||||

visualselector_data_is_ready = datastore.visualselector_data_is_ready(uuid)

|

|

||||||

|

|

||||||

# Only works reliably with Playwright

|

|

||||||

visualselector_enabled = os.getenv('PLAYWRIGHT_DRIVER_URL', False) and default['fetch_backend'] == 'html_webdriver'

|

|

||||||

|

|

||||||

|

|

||||||

output = render_template("edit.html",

|

output = render_template("edit.html",

|

||||||

uuid=uuid,

|

uuid=uuid,

|

||||||

watch=datastore.data['watching'][uuid],

|

watch=datastore.data['watching'][uuid],

|

||||||

@@ -642,9 +633,7 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

has_empty_checktime=using_default_check_time,

|

has_empty_checktime=using_default_check_time,

|

||||||

using_global_webdriver_wait=default['webdriver_delay'] is None,

|

using_global_webdriver_wait=default['webdriver_delay'] is None,

|

||||||

current_base_url=datastore.data['settings']['application']['base_url'],

|

current_base_url=datastore.data['settings']['application']['base_url'],

|

||||||

emailprefix=os.getenv('NOTIFICATION_MAIL_BUTTON_PREFIX', False),

|

emailprefix=os.getenv('NOTIFICATION_MAIL_BUTTON_PREFIX', False)

|

||||||

visualselector_data_is_ready=visualselector_data_is_ready,

|

|

||||||

visualselector_enabled=visualselector_enabled

|

|

||||||

)

|

)

|

||||||

|

|

||||||

return output

|

return output

|

||||||

@@ -751,14 +740,15 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

return output

|

return output

|

||||||

|

|

||||||

# Clear all statuses, so we do not see the 'unviewed' class

|

# Clear all statuses, so we do not see the 'unviewed' class

|

||||||

@app.route("/form/mark-all-viewed", methods=['GET'])

|

@app.route("/api/mark-all-viewed", methods=['GET'])

|

||||||

@login_required

|

@login_required

|

||||||

def mark_all_viewed():

|

def mark_all_viewed():

|

||||||

|

|

||||||

# Save the current newest history as the most recently viewed

|

# Save the current newest history as the most recently viewed

|

||||||

for watch_uuid, watch in datastore.data['watching'].items():

|

for watch_uuid, watch in datastore.data['watching'].items():

|

||||||

datastore.set_last_viewed(watch_uuid, int(time.time()))

|

datastore.set_last_viewed(watch_uuid, watch['newest_history_key'])

|

||||||

|

|

||||||

|

flash("Cleared all statuses.")

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

@app.route("/diff/<string:uuid>", methods=['GET'])

|

@app.route("/diff/<string:uuid>", methods=['GET'])

|

||||||

@@ -776,17 +766,20 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

flash("No history found for the specified link, bad link?", "error")

|

flash("No history found for the specified link, bad link?", "error")

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

history = watch.history

|

dates = list(watch['history'].keys())

|

||||||

dates = list(history.keys())

|

# Convert to int, sort and back to str again

|

||||||

|

# @todo replace datastore getter that does this automatically

|

||||||

|

dates = [int(i) for i in dates]

|

||||||

|

dates.sort(reverse=True)

|

||||||

|

dates = [str(i) for i in dates]

|

||||||

|

|

||||||

if len(dates) < 2:

|

if len(dates) < 2:

|

||||||

flash("Not enough saved change detection snapshots to produce a report.", "error")

|

flash("Not enough saved change detection snapshots to produce a report.", "error")

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

# Save the current newest history as the most recently viewed

|

# Save the current newest history as the most recently viewed

|

||||||

datastore.set_last_viewed(uuid, time.time())

|

datastore.set_last_viewed(uuid, dates[0])

|

||||||

|

newest_file = watch['history'][dates[0]]

|

||||||

newest_file = history[dates[-1]]

|

|

||||||

|

|

||||||

try:

|

try:

|

||||||

with open(newest_file, 'r') as f:

|

with open(newest_file, 'r') as f:

|

||||||

@@ -796,10 +789,10 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

previous_version = request.args.get('previous_version')

|

previous_version = request.args.get('previous_version')

|

||||||

try:

|

try:

|

||||||

previous_file = history[previous_version]

|

previous_file = watch['history'][previous_version]

|

||||||

except KeyError:

|

except KeyError:

|

||||||

# Not present, use a default value, the second one in the sorted list.

|

# Not present, use a default value, the second one in the sorted list.

|

||||||

previous_file = history[dates[-2]]

|

previous_file = watch['history'][dates[1]]

|

||||||

|

|

||||||

try:

|

try:

|

||||||

with open(previous_file, 'r') as f:

|

with open(previous_file, 'r') as f:

|

||||||

@@ -816,7 +809,7 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

extra_stylesheets=extra_stylesheets,

|

extra_stylesheets=extra_stylesheets,

|

||||||

versions=dates[1:],

|

versions=dates[1:],

|

||||||

uuid=uuid,

|

uuid=uuid,

|

||||||

newest_version_timestamp=dates[-1],

|

newest_version_timestamp=dates[0],

|

||||||

current_previous_version=str(previous_version),

|

current_previous_version=str(previous_version),

|

||||||

current_diff_url=watch['url'],

|

current_diff_url=watch['url'],

|

||||||

extra_title=" - Diff - {}".format(watch['title'] if watch['title'] else watch['url']),

|

extra_title=" - Diff - {}".format(watch['title'] if watch['title'] else watch['url']),

|

||||||

@@ -844,9 +837,9 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

flash("No history found for the specified link, bad link?", "error")

|

flash("No history found for the specified link, bad link?", "error")

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

if watch.history_n >0:

|

if len(watch['history']):

|

||||||

timestamps = sorted(watch.history.keys(), key=lambda x: int(x))

|

timestamps = sorted(watch['history'].keys(), key=lambda x: int(x))

|

||||||

filename = watch.history[timestamps[-1]]

|

filename = watch['history'][timestamps[-1]]

|

||||||

try:

|

try:

|

||||||

with open(filename, 'r') as f:

|

with open(filename, 'r') as f:

|

||||||

tmp = f.readlines()

|

tmp = f.readlines()

|

||||||

@@ -983,9 +976,10 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

@app.route("/static/<string:group>/<string:filename>", methods=['GET'])

|

@app.route("/static/<string:group>/<string:filename>", methods=['GET'])

|

||||||

def static_content(group, filename):

|

def static_content(group, filename):

|

||||||

from flask import make_response

|

|

||||||

|

|

||||||

if group == 'screenshot':

|

if group == 'screenshot':

|

||||||

|

|

||||||

|

from flask import make_response

|

||||||

|

|

||||||

# Could be sensitive, follow password requirements

|

# Could be sensitive, follow password requirements

|

||||||

if datastore.data['settings']['application']['password'] and not flask_login.current_user.is_authenticated:

|

if datastore.data['settings']['application']['password'] and not flask_login.current_user.is_authenticated:

|

||||||

abort(403)

|

abort(403)

|

||||||

@@ -1004,26 +998,6 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

except FileNotFoundError:

|

except FileNotFoundError:

|

||||||

abort(404)

|

abort(404)

|

||||||

|

|

||||||

|

|

||||||

if group == 'visual_selector_data':

|

|

||||||

# Could be sensitive, follow password requirements

|

|

||||||

if datastore.data['settings']['application']['password'] and not flask_login.current_user.is_authenticated:

|

|

||||||

abort(403)

|

|

||||||

|

|

||||||

# These files should be in our subdirectory

|

|

||||||

try:

|

|

||||||

# set nocache, set content-type

|

|

||||||

watch_dir = datastore_o.datastore_path + "/" + filename

|

|

||||||

response = make_response(send_from_directory(filename="elements.json", directory=watch_dir, path=watch_dir + "/elements.json"))

|

|

||||||

response.headers['Content-type'] = 'application/json'

|

|

||||||

response.headers['Cache-Control'] = 'no-cache, no-store, must-revalidate'

|

|

||||||

response.headers['Pragma'] = 'no-cache'

|

|

||||||

response.headers['Expires'] = 0

|

|

||||||

return response

|

|

||||||

|

|

||||||

except FileNotFoundError:

|

|

||||||

abort(404)

|

|

||||||

|

|

||||||

# These files should be in our subdirectory

|

# These files should be in our subdirectory

|

||||||

try:

|

try:

|

||||||

return send_from_directory("static/{}".format(group), path=filename)

|

return send_from_directory("static/{}".format(group), path=filename)

|

||||||

@@ -1140,7 +1114,6 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

# copy it to memory as trim off what we dont need (history)

|

# copy it to memory as trim off what we dont need (history)

|

||||||

watch = deepcopy(datastore.data['watching'][uuid])

|

watch = deepcopy(datastore.data['watching'][uuid])

|

||||||

# For older versions that are not a @property

|

|

||||||

if (watch.get('history')):

|

if (watch.get('history')):

|

||||||

del (watch['history'])

|

del (watch['history'])

|

||||||

|

|

||||||

@@ -1170,14 +1143,14 @@ def changedetection_app(config=None, datastore_o=None):

|

|||||||

|

|

||||||

|

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

logging.error("Error sharing -{}".format(str(e)))

|

flash("Could not share, something went wrong while communicating with the share server.", 'error')

|

||||||

flash("Could not share, something went wrong while communicating with the share server - {}".format(str(e)), 'error')

|

|

||||||

|

|

||||||

# https://changedetection.io/share/VrMv05wpXyQa

|

# https://changedetection.io/share/VrMv05wpXyQa

|

||||||

# in the browser - should give you a nice info page - wtf

|

# in the browser - should give you a nice info page - wtf

|

||||||

# paste in etc

|

# paste in etc

|

||||||

return redirect(url_for('index'))

|

return redirect(url_for('index'))

|

||||||

|

|

||||||

|

|

||||||

# @todo handle ctrl break

|

# @todo handle ctrl break

|

||||||

ticker_thread = threading.Thread(target=ticker_thread_check_time_launch_checks).start()

|

ticker_thread = threading.Thread(target=ticker_thread_check_time_launch_checks).start()

|

||||||

|

|

||||||

@@ -1233,7 +1206,7 @@ def notification_runner():

|

|||||||

notification.process_notification(n_object, datastore)

|

notification.process_notification(n_object, datastore)

|

||||||

|

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

logging.error("Watch URL: {} Error {}".format(n_object['watch_url'], str(e)))

|

print("Watch URL: {} Error {}".format(n_object['watch_url'], str(e)))

|

||||||

|

|

||||||

# UUID wont be present when we submit a 'test' from the global settings

|

# UUID wont be present when we submit a 'test' from the global settings

|

||||||

if 'uuid' in n_object:

|

if 'uuid' in n_object:

|

||||||

@@ -1250,7 +1223,6 @@ def notification_runner():

|

|||||||

# Thread runner to check every minute, look for new watches to feed into the Queue.

|

# Thread runner to check every minute, look for new watches to feed into the Queue.

|

||||||

def ticker_thread_check_time_launch_checks():

|

def ticker_thread_check_time_launch_checks():

|

||||||

from changedetectionio import update_worker

|

from changedetectionio import update_worker

|

||||||

import logging

|

|

||||||

|

|

||||||

# Spin up Workers that do the fetching

|

# Spin up Workers that do the fetching

|

||||||

# Can be overriden by ENV or use the default settings

|

# Can be overriden by ENV or use the default settings

|

||||||

@@ -1269,10 +1241,9 @@ def ticker_thread_check_time_launch_checks():

|

|||||||

running_uuids.append(t.current_uuid)

|

running_uuids.append(t.current_uuid)

|

||||||

|

|

||||||

# Re #232 - Deepcopy the data incase it changes while we're iterating through it all

|

# Re #232 - Deepcopy the data incase it changes while we're iterating through it all

|

||||||

watch_uuid_list = []

|

|

||||||

while True:

|

while True:

|

||||||

try:

|

try:

|

||||||

watch_uuid_list = datastore.data['watching'].keys()

|

copied_datastore = deepcopy(datastore)

|

||||||

except RuntimeError as e:

|

except RuntimeError as e:

|

||||||

# RuntimeError: dictionary changed size during iteration

|

# RuntimeError: dictionary changed size during iteration

|

||||||

time.sleep(0.1)

|

time.sleep(0.1)

|

||||||

@@ -1289,12 +1260,7 @@ def ticker_thread_check_time_launch_checks():

|

|||||||

recheck_time_minimum_seconds = int(os.getenv('MINIMUM_SECONDS_RECHECK_TIME', 60))

|

recheck_time_minimum_seconds = int(os.getenv('MINIMUM_SECONDS_RECHECK_TIME', 60))

|

||||||

recheck_time_system_seconds = datastore.threshold_seconds

|

recheck_time_system_seconds = datastore.threshold_seconds

|

||||||

|

|

||||||

for uuid in watch_uuid_list:

|

for uuid, watch in copied_datastore.data['watching'].items():

|

||||||

|

|

||||||

watch = datastore.data['watching'].get(uuid)

|

|

||||||

if not watch:

|

|

||||||

logging.error("Watch: {} no longer present.".format(uuid))

|

|

||||||

continue

|

|

||||||

|

|

||||||

# No need todo further processing if it's paused

|

# No need todo further processing if it's paused

|

||||||

if watch['paused']:

|

if watch['paused']:

|

||||||

|

|||||||

@@ -28,7 +28,8 @@ class Watch(Resource):

|

|||||||

return "OK", 200

|

return "OK", 200

|

||||||

|

|

||||||

# Return without history, get that via another API call

|

# Return without history, get that via another API call

|

||||||

watch['history_n'] = watch.history_n

|

watch['history_n'] = len(watch['history'])

|

||||||

|

del (watch['history'])

|

||||||

return watch

|

return watch

|

||||||

|

|

||||||

@auth.check_token

|

@auth.check_token

|

||||||

@@ -51,7 +52,7 @@ class WatchHistory(Resource):

|

|||||||

watch = self.datastore.data['watching'].get(uuid)

|

watch = self.datastore.data['watching'].get(uuid)

|

||||||

if not watch:

|

if not watch:

|

||||||

abort(404, message='No watch exists with the UUID of {}'.format(uuid))

|

abort(404, message='No watch exists with the UUID of {}'.format(uuid))

|

||||||

return watch.history, 200

|

return watch['history'], 200

|

||||||

|

|

||||||

|

|

||||||

class WatchSingleHistory(Resource):

|

class WatchSingleHistory(Resource):

|

||||||

@@ -68,13 +69,13 @@ class WatchSingleHistory(Resource):

|

|||||||

if not watch:

|

if not watch:

|

||||||

abort(404, message='No watch exists with the UUID of {}'.format(uuid))

|

abort(404, message='No watch exists with the UUID of {}'.format(uuid))

|

||||||

|

|

||||||

if not len(watch.history):

|

if not len(watch['history']):

|

||||||

abort(404, message='Watch found but no history exists for the UUID {}'.format(uuid))

|

abort(404, message='Watch found but no history exists for the UUID {}'.format(uuid))

|

||||||

|

|

||||||

if timestamp == 'latest':

|

if timestamp == 'latest':

|

||||||

timestamp = list(watch.history.keys())[-1]

|

timestamp = list(watch['history'].keys())[-1]

|

||||||

|

|

||||||

with open(watch.history[timestamp], 'r') as f:

|

with open(watch['history'][timestamp], 'r') as f:

|

||||||

content = f.read()

|

content = f.read()

|

||||||

|

|

||||||

response = make_response(content, 200)

|

response = make_response(content, 200)

|

||||||

|

|||||||

@@ -1,19 +1,10 @@

|

|||||||

from abc import ABC, abstractmethod

|

from abc import ABC, abstractmethod

|

||||||

import chardet

|

import chardet

|

||||||

import json

|

|

||||||

import os

|

import os

|

||||||

import requests

|

import requests

|

||||||

import time

|

import time

|

||||||

import sys

|

import sys

|

||||||

|

|

||||||

class PageUnloadable(Exception):

|

|

||||||

def __init__(self, status_code, url):

|

|

||||||

# Set this so we can use it in other parts of the app

|

|

||||||

self.status_code = status_code

|

|

||||||

self.url = url

|

|

||||||

return

|

|

||||||

pass

|

|

||||||

|

|

||||||

class EmptyReply(Exception):

|

class EmptyReply(Exception):

|

||||||

def __init__(self, status_code, url):

|

def __init__(self, status_code, url):

|

||||||

# Set this so we can use it in other parts of the app

|

# Set this so we can use it in other parts of the app

|

||||||

@@ -22,14 +13,6 @@ class EmptyReply(Exception):

|

|||||||

return

|

return

|

||||||

pass

|

pass

|

||||||

|

|

||||||

class ScreenshotUnavailable(Exception):

|

|

||||||

def __init__(self, status_code, url):

|

|

||||||

# Set this so we can use it in other parts of the app

|

|

||||||

self.status_code = status_code

|

|

||||||

self.url = url

|

|

||||||

return

|

|

||||||

pass

|

|

||||||

|

|

||||||

class ReplyWithContentButNoText(Exception):

|

class ReplyWithContentButNoText(Exception):

|

||||||

def __init__(self, status_code, url):

|

def __init__(self, status_code, url):

|

||||||

# Set this so we can use it in other parts of the app

|

# Set this so we can use it in other parts of the app

|

||||||

@@ -44,135 +27,6 @@ class Fetcher():

|

|||||||

status_code = None

|

status_code = None

|

||||||

content = None

|

content = None

|

||||||

headers = None

|

headers = None

|

||||||

|

|

||||||

fetcher_description = "No description"

|

|

||||||

xpath_element_js = """

|

|

||||||

// Include the getXpath script directly, easier than fetching

|

|

||||||

!function(e,n){"object"==typeof exports&&"undefined"!=typeof module?module.exports=n():"function"==typeof define&&define.amd?define(n):(e=e||self).getXPath=n()}(this,function(){return function(e){var n=e;if(n&&n.id)return'//*[@id="'+n.id+'"]';for(var o=[];n&&Node.ELEMENT_NODE===n.nodeType;){for(var i=0,r=!1,d=n.previousSibling;d;)d.nodeType!==Node.DOCUMENT_TYPE_NODE&&d.nodeName===n.nodeName&&i++,d=d.previousSibling;for(d=n.nextSibling;d;){if(d.nodeName===n.nodeName){r=!0;break}d=d.nextSibling}o.push((n.prefix?n.prefix+":":"")+n.localName+(i||r?"["+(i+1)+"]":"")),n=n.parentNode}return o.length?"/"+o.reverse().join("/"):""}});

|

|

||||||

|

|

||||||

|

|

||||||

const findUpTag = (el) => {

|

|

||||||

let r = el

|

|

||||||

chained_css = [];

|

|

||||||

depth=0;

|

|

||||||

|

|

||||||

// Strategy 1: Keep going up until we hit an ID tag, imagine it's like #list-widget div h4

|

|

||||||

while (r.parentNode) {

|

|

||||||

if(depth==5) {

|

|

||||||

break;

|

|

||||||

}

|

|

||||||

if('' !==r.id) {

|

|

||||||

chained_css.unshift("#"+r.id);

|

|

||||||

final_selector= chained_css.join('>');

|

|

||||||

// Be sure theres only one, some sites have multiples of the same ID tag :-(

|

|

||||||

if (window.document.querySelectorAll(final_selector).length ==1 ) {

|

|

||||||

return final_selector;

|

|

||||||

}

|

|

||||||

return null;

|

|

||||||

} else {

|

|

||||||

chained_css.unshift(r.tagName.toLowerCase());

|

|

||||||

}

|

|

||||||

r=r.parentNode;

|

|

||||||

depth+=1;

|

|

||||||

}

|

|

||||||

return null;

|

|

||||||

}

|

|

||||||

|

|

||||||

|

|

||||||

// @todo - if it's SVG or IMG, go into image diff mode

|

|

||||||

var elements = window.document.querySelectorAll("div,span,form,table,tbody,tr,td,a,p,ul,li,h1,h2,h3,h4, header, footer, section, article, aside, details, main, nav, section, summary");

|

|

||||||

var size_pos=[];

|

|

||||||

// after page fetch, inject this JS

|

|

||||||

// build a map of all elements and their positions (maybe that only include text?)

|

|

||||||

var bbox;

|

|

||||||

for (var i = 0; i < elements.length; i++) {

|

|

||||||

bbox = elements[i].getBoundingClientRect();

|

|

||||||

|

|

||||||

// forget really small ones

|

|

||||||

if (bbox['width'] <20 && bbox['height'] < 20 ) {

|

|

||||||

continue;

|

|

||||||

}

|

|

||||||

|

|

||||||

// @todo the getXpath kind of sucks, it doesnt know when there is for example just one ID sometimes

|

|

||||||

// it should not traverse when we know we can anchor off just an ID one level up etc..

|

|

||||||

// maybe, get current class or id, keep traversing up looking for only class or id until there is just one match

|

|

||||||

|

|

||||||

// 1st primitive - if it has class, try joining it all and select, if theres only one.. well thats us.

|

|

||||||

xpath_result=false;

|

|

||||||

|

|

||||||

try {

|

|

||||||

var d= findUpTag(elements[i]);

|

|

||||||

if (d) {

|

|

||||||

xpath_result =d;

|

|

||||||

}

|

|

||||||

} catch (e) {

|

|

||||||

console.log(e);

|

|

||||||

}

|

|

||||||

|

|

||||||

// You could swap it and default to getXpath and then try the smarter one

|

|

||||||

// default back to the less intelligent one

|

|

||||||

if (!xpath_result) {

|

|

||||||

try {

|

|

||||||

// I've seen on FB and eBay that this doesnt work

|

|

||||||

// ReferenceError: getXPath is not defined at eval (eval at evaluate (:152:29), <anonymous>:67:20) at UtilityScript.evaluate (<anonymous>:159:18) at UtilityScript.<anonymous> (<anonymous>:1:44)

|

|

||||||

xpath_result = getXPath(elements[i]);

|

|

||||||

} catch (e) {

|

|

||||||

console.log(e);

|

|

||||||

continue;

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

if(window.getComputedStyle(elements[i]).visibility === "hidden") {

|

|

||||||

continue;

|

|

||||||

}

|

|

||||||

|

|

||||||

size_pos.push({

|

|

||||||

xpath: xpath_result,

|

|

||||||

width: Math.round(bbox['width']),

|

|

||||||

height: Math.round(bbox['height']),

|

|

||||||

left: Math.floor(bbox['left']),

|

|

||||||

top: Math.floor(bbox['top']),

|

|

||||||

childCount: elements[i].childElementCount

|

|

||||||

});

|

|

||||||

}

|

|

||||||

|

|

||||||

|

|

||||||

// inject the current one set in the css_filter, which may be a CSS rule

|

|

||||||

// used for displaying the current one in VisualSelector, where its not one we generated.

|

|

||||||

if (css_filter.length) {

|

|

||||||

q=false;

|

|

||||||

try {

|

|

||||||

// is it xpath?

|

|

||||||

if (css_filter.startsWith('/') || css_filter.startsWith('xpath:')) {

|

|

||||||

q=document.evaluate(css_filter.replace('xpath:',''), document, null, XPathResult.FIRST_ORDERED_NODE_TYPE, null).singleNodeValue;

|

|

||||||

} else {

|

|

||||||

q=document.querySelector(css_filter);

|

|

||||||

}

|

|

||||||

} catch (e) {

|

|

||||||

// Maybe catch DOMException and alert?

|

|

||||||

console.log(e);

|

|

||||||

}

|

|

||||||

bbox=false;

|

|

||||||

if(q) {

|

|

||||||

bbox = q.getBoundingClientRect();

|

|

||||||

}

|

|

||||||

|

|

||||||

if (bbox && bbox['width'] >0 && bbox['height']>0) {

|

|

||||||

size_pos.push({

|

|

||||||

xpath: css_filter,

|

|

||||||

width: bbox['width'],

|

|

||||||

height: bbox['height'],

|

|

||||||

left: bbox['left'],

|

|

||||||

top: bbox['top'],

|

|

||||||

childCount: q.childElementCount

|

|

||||||

});

|

|

||||||

}

|

|

||||||

}

|

|

||||||

// Window.width required for proper scaling in the frontend

|

|

||||||

return {'size_pos':size_pos, 'browser_width': window.innerWidth};

|

|

||||||

"""

|

|

||||||

xpath_data = None

|

|

||||||

|

|

||||||

# Will be needed in the future by the VisualSelector, always get this where possible.

|

# Will be needed in the future by the VisualSelector, always get this where possible.

|

||||||

screenshot = False

|

screenshot = False

|

||||||

fetcher_description = "No description"

|

fetcher_description = "No description"

|

||||||

@@ -193,8 +47,7 @@ class Fetcher():

|

|||||||

request_headers,

|

request_headers,

|

||||||

request_body,

|

request_body,

|

||||||

request_method,

|

request_method,

|

||||||

ignore_status_codes=False,

|

ignore_status_codes=False):

|

||||||

current_css_filter=None):

|

|

||||||

# Should set self.error, self.status_code and self.content

|

# Should set self.error, self.status_code and self.content

|

||||||

pass

|

pass

|

||||||

|

|

||||||

@@ -275,8 +128,7 @@ class base_html_playwright(Fetcher):

|

|||||||

request_headers,

|

request_headers,

|

||||||

request_body,

|

request_body,

|

||||||

request_method,

|

request_method,

|

||||||

ignore_status_codes=False,

|

ignore_status_codes=False):

|

||||||

current_css_filter=None):

|

|

||||||

|

|

||||||

from playwright.sync_api import sync_playwright

|

from playwright.sync_api import sync_playwright

|

||||||

import playwright._impl._api_types

|

import playwright._impl._api_types

|

||||||

@@ -293,16 +145,11 @@ class base_html_playwright(Fetcher):

|

|||||||

# Use the default one configured in the App.py model that's passed from fetch_site_status.py

|

# Use the default one configured in the App.py model that's passed from fetch_site_status.py

|

||||||

context = browser.new_context(

|

context = browser.new_context(

|

||||||

user_agent=request_headers['User-Agent'] if request_headers.get('User-Agent') else 'Mozilla/5.0',

|

user_agent=request_headers['User-Agent'] if request_headers.get('User-Agent') else 'Mozilla/5.0',

|

||||||

proxy=self.proxy,

|

proxy=self.proxy

|

||||||

# This is needed to enable JavaScript execution on GitHub and others

|

|

||||||

bypass_csp=True,

|

|

||||||

# Should never be needed

|

|

||||||

accept_downloads=False

|

|

||||||

)

|

)

|

||||||

|

|

||||||

page = context.new_page()

|

page = context.new_page()

|

||||||

|

page.set_viewport_size({"width": 1280, "height": 1024})

|

||||||

try:

|

try:

|

||||||

# Bug - never set viewport size BEFORE page.goto

|

|

||||||

response = page.goto(url, timeout=timeout * 1000, wait_until='commit')

|

response = page.goto(url, timeout=timeout * 1000, wait_until='commit')

|

||||||

# Wait_until = commit

|

# Wait_until = commit

|

||||||

# - `'commit'` - consider operation to be finished when network response is received and the document started loading.

|

# - `'commit'` - consider operation to be finished when network response is received and the document started loading.

|

||||||

@@ -311,49 +158,22 @@ class base_html_playwright(Fetcher):

|

|||||||

extra_wait = int(os.getenv("WEBDRIVER_DELAY_BEFORE_CONTENT_READY", 5)) + self.render_extract_delay

|

extra_wait = int(os.getenv("WEBDRIVER_DELAY_BEFORE_CONTENT_READY", 5)) + self.render_extract_delay

|

||||||

page.wait_for_timeout(extra_wait * 1000)

|

page.wait_for_timeout(extra_wait * 1000)

|

||||||

except playwright._impl._api_types.TimeoutError as e:

|

except playwright._impl._api_types.TimeoutError as e:

|

||||||

context.close()

|

|

||||||

browser.close()

|

|

||||||

raise EmptyReply(url=url, status_code=None)

|

raise EmptyReply(url=url, status_code=None)

|

||||||

except Exception as e:

|

|

||||||

context.close()

|

|

||||||

browser.close()

|

|

||||||

raise PageUnloadable(url=url, status_code=None)

|

|

||||||

|

|

||||||

if response is None:

|

if response is None:

|

||||||

context.close()

|

|

||||||

browser.close()

|

|

||||||

raise EmptyReply(url=url, status_code=None)

|

raise EmptyReply(url=url, status_code=None)

|

||||||

|

|

||||||

if len(page.content().strip()) == 0:

|

if len(page.content().strip()) == 0:

|

||||||

context.close()

|

|

||||||

browser.close()

|

|

||||||

raise EmptyReply(url=url, status_code=None)

|

raise EmptyReply(url=url, status_code=None)

|

||||||

|

|

||||||

# Bug 2(?) Set the viewport size AFTER loading the page

|

|

||||||

page.set_viewport_size({"width": 1280, "height": 1024})

|

|

||||||

|

|

||||||

self.status_code = response.status

|

self.status_code = response.status

|

||||||

self.content = page.content()

|

self.content = page.content()

|

||||||

self.headers = response.all_headers()

|

self.headers = response.all_headers()

|

||||||

|

|

||||||

if current_css_filter is not None:

|

|

||||||

page.evaluate("var css_filter={}".format(json.dumps(current_css_filter)))

|

|

||||||

else:

|

|

||||||

page.evaluate("var css_filter=''")

|

|

||||||

|

|

||||||

self.xpath_data = page.evaluate("async () => {" + self.xpath_element_js + "}")

|

|

||||||

|

|

||||||

# Bug 3 in Playwright screenshot handling

|

|

||||||

# Some bug where it gives the wrong screenshot size, but making a request with the clip set first seems to solve it

|

# Some bug where it gives the wrong screenshot size, but making a request with the clip set first seems to solve it

|

||||||

# JPEG is better here because the screenshots can be very very large

|

# JPEG is better here because the screenshots can be very very large

|

||||||

try:

|

page.screenshot(type='jpeg', clip={'x': 1.0, 'y': 1.0, 'width': 1280, 'height': 1024})

|

||||||

page.screenshot(type='jpeg', clip={'x': 1.0, 'y': 1.0, 'width': 1280, 'height': 1024})

|

self.screenshot = page.screenshot(type='jpeg', full_page=True, quality=90)

|

||||||

self.screenshot = page.screenshot(type='jpeg', full_page=True, quality=92)

|

|

||||||

except Exception as e:

|

|

||||||

context.close()

|

|

||||||

browser.close()

|

|

||||||

raise ScreenshotUnavailable(url=url, status_code=None)

|

|

||||||

|

|

||||||

context.close()

|

context.close()

|

||||||

browser.close()

|

browser.close()

|

||||||

|

|

||||||

@@ -405,8 +225,7 @@ class base_html_webdriver(Fetcher):

|

|||||||

request_headers,

|

request_headers,

|

||||||

request_body,

|

request_body,

|

||||||

request_method,

|

request_method,

|

||||||

ignore_status_codes=False,

|

ignore_status_codes=False):

|

||||||

current_css_filter=None):

|

|

||||||

|

|

||||||

from selenium import webdriver

|

from selenium import webdriver

|

||||||

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

|

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

|

||||||

@@ -426,10 +245,6 @@ class base_html_webdriver(Fetcher):

|

|||||||

self.quit()

|

self.quit()

|

||||||

raise

|

raise

|

||||||

|

|

||||||

self.driver.set_window_size(1280, 1024)

|

|

||||||

self.driver.implicitly_wait(int(os.getenv("WEBDRIVER_DELAY_BEFORE_CONTENT_READY", 5)))

|

|

||||||

self.screenshot = self.driver.get_screenshot_as_png()

|

|

||||||

|

|

||||||

# @todo - how to check this? is it possible?

|

# @todo - how to check this? is it possible?

|

||||||

self.status_code = 200

|

self.status_code = 200

|

||||||

# @todo somehow we should try to get this working for WebDriver

|

# @todo somehow we should try to get this working for WebDriver

|

||||||

@@ -439,6 +254,8 @@ class base_html_webdriver(Fetcher):

|

|||||||

time.sleep(int(os.getenv("WEBDRIVER_DELAY_BEFORE_CONTENT_READY", 5)) + self.render_extract_delay)

|

time.sleep(int(os.getenv("WEBDRIVER_DELAY_BEFORE_CONTENT_READY", 5)) + self.render_extract_delay)

|

||||||

self.content = self.driver.page_source

|

self.content = self.driver.page_source

|

||||||

self.headers = {}

|

self.headers = {}

|

||||||

|

self.screenshot = self.driver.get_screenshot_as_png()

|

||||||

|

self.quit()

|

||||||

|

|

||||||

# Does the connection to the webdriver work? run a test connection.

|

# Does the connection to the webdriver work? run a test connection.

|

||||||

def is_ready(self):

|

def is_ready(self):

|

||||||

@@ -475,8 +292,7 @@ class html_requests(Fetcher):

|

|||||||

request_headers,

|

request_headers,

|

||||||

request_body,

|

request_body,

|

||||||

request_method,

|

request_method,

|

||||||

ignore_status_codes=False,

|

ignore_status_codes=False):

|

||||||

current_css_filter=None):

|

|

||||||

|

|

||||||

proxies={}

|

proxies={}

|

||||||

|

|

||||||

|

|||||||

@@ -94,7 +94,6 @@ class perform_site_check():

|

|||||||

# If the klass doesnt exist, just use a default

|

# If the klass doesnt exist, just use a default

|

||||||

klass = getattr(content_fetcher, "html_requests")

|

klass = getattr(content_fetcher, "html_requests")

|

||||||

|

|

||||||

|

|

||||||

proxy_args = self.set_proxy_from_list(watch)

|

proxy_args = self.set_proxy_from_list(watch)

|

||||||

fetcher = klass(proxy_override=proxy_args)

|

fetcher = klass(proxy_override=proxy_args)

|

||||||

|

|

||||||

@@ -105,8 +104,7 @@ class perform_site_check():

|

|||||||

elif system_webdriver_delay is not None:

|

elif system_webdriver_delay is not None:

|

||||||

fetcher.render_extract_delay = system_webdriver_delay

|

fetcher.render_extract_delay = system_webdriver_delay

|

||||||

|

|

||||||

fetcher.run(url, timeout, request_headers, request_body, request_method, ignore_status_code, watch['css_filter'])

|

fetcher.run(url, timeout, request_headers, request_body, request_method, ignore_status_code)

|

||||||

fetcher.quit()

|

|

||||||

|

|

||||||

# Fetching complete, now filters

|

# Fetching complete, now filters

|

||||||

# @todo move to class / maybe inside of fetcher abstract base?

|

# @todo move to class / maybe inside of fetcher abstract base?

|

||||||

@@ -204,20 +202,6 @@ class perform_site_check():

|

|||||||

else:

|

else:

|

||||||

stripped_text_from_html = stripped_text_from_html.encode('utf8')

|

stripped_text_from_html = stripped_text_from_html.encode('utf8')

|

||||||

|

|

||||||

# 615 Extract text by regex

|

|

||||||

extract_text = watch.get('extract_text', [])

|

|

||||||

if len(extract_text) > 0:

|

|

||||||

regex_matched_output = []

|

|

||||||

for s_re in extract_text:

|

|

||||||

result = re.findall(s_re.encode('utf8'), stripped_text_from_html,

|

|

||||||

flags=re.MULTILINE | re.DOTALL | re.LOCALE)

|

|

||||||

if result:

|

|

||||||

regex_matched_output.append(result[0])

|

|

||||||

|

|

||||||

if regex_matched_output:

|

|

||||||

stripped_text_from_html = b'\n'.join(regex_matched_output)

|

|

||||||

text_content_before_ignored_filter = stripped_text_from_html

|

|

||||||

|

|

||||||

# Re #133 - if we should strip whitespaces from triggering the change detected comparison

|

# Re #133 - if we should strip whitespaces from triggering the change detected comparison

|

||||||

if self.datastore.data['settings']['application'].get('ignore_whitespace', False):

|

if self.datastore.data['settings']['application'].get('ignore_whitespace', False):

|

||||||

fetched_md5 = hashlib.md5(stripped_text_from_html.translate(None, b'\r\n\t ')).hexdigest()

|

fetched_md5 = hashlib.md5(stripped_text_from_html.translate(None, b'\r\n\t ')).hexdigest()

|

||||||

@@ -235,11 +219,9 @@ class perform_site_check():

|

|||||||

# Yeah, lets block first until something matches

|

# Yeah, lets block first until something matches

|

||||||

blocked_by_not_found_trigger_text = True

|

blocked_by_not_found_trigger_text = True

|

||||||

# Filter and trigger works the same, so reuse it

|

# Filter and trigger works the same, so reuse it

|

||||||

# It should return the line numbers that match

|

|

||||||

result = html_tools.strip_ignore_text(content=str(stripped_text_from_html),

|

result = html_tools.strip_ignore_text(content=str(stripped_text_from_html),

|

||||||

wordlist=watch['trigger_text'],

|

wordlist=watch['trigger_text'],

|

||||||

mode="line numbers")

|

mode="line numbers")

|

||||||

# If it returned any lines that matched..

|

|

||||||

if result:

|

if result:

|

||||||

blocked_by_not_found_trigger_text = False

|

blocked_by_not_found_trigger_text = False

|

||||||

|

|

||||||

@@ -254,4 +236,4 @@ class perform_site_check():

|

|||||||

if not watch['title'] or not len(watch['title']):

|

if not watch['title'] or not len(watch['title']):

|

||||||

update_obj['title'] = html_tools.extract_element(find='title', html_content=fetcher.content)

|

update_obj['title'] = html_tools.extract_element(find='title', html_content=fetcher.content)

|

||||||

|

|

||||||

return changed_detected, update_obj, text_content_before_ignored_filter, fetcher.screenshot, fetcher.xpath_data

|

return changed_detected, update_obj, text_content_before_ignored_filter, fetcher.screenshot

|

||||||

|

|||||||

@@ -223,7 +223,7 @@ class validateURL(object):

|

|||||||

except validators.ValidationFailure:

|

except validators.ValidationFailure:

|

||||||

message = field.gettext('\'%s\' is not a valid URL.' % (field.data.strip()))

|

message = field.gettext('\'%s\' is not a valid URL.' % (field.data.strip()))

|

||||||

raise ValidationError(message)

|

raise ValidationError(message)

|

||||||

|

|

||||||

class ValidateListRegex(object):

|

class ValidateListRegex(object):

|

||||||

"""

|

"""

|

||||||

Validates that anything that looks like a regex passes as a regex

|

Validates that anything that looks like a regex passes as a regex

|

||||||

@@ -307,7 +307,7 @@ class ValidateCSSJSONXPATHInput(object):

|

|||||||

|

|

||||||

class quickWatchForm(Form):

|

class quickWatchForm(Form):

|

||||||

url = fields.URLField('URL', validators=[validateURL()])

|

url = fields.URLField('URL', validators=[validateURL()])

|

||||||

tag = StringField('Group tag', [validators.Optional()])

|

tag = StringField('Group tag', [validators.Optional(), validators.Length(max=35)])

|

||||||

|

|

||||||

# Common to a single watch and the global settings

|

# Common to a single watch and the global settings

|

||||||

class commonSettingsForm(Form):

|

class commonSettingsForm(Form):

|

||||||

@@ -323,16 +323,13 @@ class commonSettingsForm(Form):

|

|||||||

class watchForm(commonSettingsForm):

|

class watchForm(commonSettingsForm):

|

||||||

|

|

||||||

url = fields.URLField('URL', validators=[validateURL()])

|

url = fields.URLField('URL', validators=[validateURL()])

|

||||||

tag = StringField('Group tag', [validators.Optional()], default='')

|

tag = StringField('Group tag', [validators.Optional(), validators.Length(max=35)], default='')

|

||||||

|

|

||||||

time_between_check = FormField(TimeBetweenCheckForm)

|

time_between_check = FormField(TimeBetweenCheckForm)

|

||||||

|

|

||||||

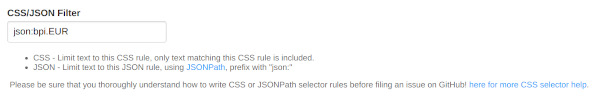

css_filter = StringField('CSS/JSON/XPATH Filter', [ValidateCSSJSONXPATHInput()], default='')

|

css_filter = StringField('CSS/JSON/XPATH Filter', [ValidateCSSJSONXPATHInput()], default='')

|

||||||

|

|

||||||

subtractive_selectors = StringListField('Remove elements', [ValidateCSSJSONXPATHInput(allow_xpath=False, allow_json=False)])

|

subtractive_selectors = StringListField('Remove elements', [ValidateCSSJSONXPATHInput(allow_xpath=False, allow_json=False)])

|

||||||

|

|

||||||

extract_text = StringListField('Extract text', [ValidateListRegex()])

|

|

||||||

|

|

||||||

title = StringField('Title', default='')

|

title = StringField('Title', default='')

|

||||||

|

|

||||||

ignore_text = StringListField('Ignore text', [ValidateListRegex()])

|

ignore_text = StringListField('Ignore text', [ValidateListRegex()])

|

||||||

|

|||||||

@@ -39,7 +39,7 @@ def element_removal(selectors: List[str], html_content):

|

|||||||

def xpath_filter(xpath_filter, html_content):

|

def xpath_filter(xpath_filter, html_content):

|

||||||

from lxml import etree, html

|

from lxml import etree, html

|

||||||

|

|

||||||

tree = html.fromstring(bytes(html_content, encoding='utf-8'))

|

tree = html.fromstring(html_content)

|

||||||

html_block = ""

|

html_block = ""

|

||||||

|

|

||||||

for item in tree.xpath(xpath_filter.strip(), namespaces={'re':'http://exslt.org/regular-expressions'}):

|

for item in tree.xpath(xpath_filter.strip(), namespaces={'re':'http://exslt.org/regular-expressions'}):

|

||||||

|

|||||||

@@ -92,7 +92,7 @@ class import_distill_io_json(Importer):

|

|||||||

|

|

||||||

for d in data.get('data'):

|

for d in data.get('data'):

|

||||||

d_config = json.loads(d['config'])

|

d_config = json.loads(d['config'])

|

||||||

extras = {'title': d.get('name', None)}

|

extras = {'title': d['name']}

|

||||||

|

|

||||||

if len(d['uri']) and good < 5000:

|

if len(d['uri']) and good < 5000:

|

||||||

try:

|

try:

|

||||||

@@ -114,9 +114,12 @@ class import_distill_io_json(Importer):

|

|||||||

except IndexError:

|

except IndexError:

|

||||||

pass

|

pass

|

||||||

|

|

||||||

|

try:

|

||||||

if d.get('tags', False):

|

|

||||||

extras['tag'] = ", ".join(d['tags'])

|

extras['tag'] = ", ".join(d['tags'])

|

||||||

|

except KeyError:

|

||||||

|

pass

|

||||||

|

except IndexError:

|

||||||

|

pass

|

||||||

|

|

||||||

new_uuid = datastore.add_watch(url=d['uri'].strip(),

|

new_uuid = datastore.add_watch(url=d['uri'].strip(),

|

||||||

extras=extras,

|

extras=extras,

|

||||||

|

|||||||

@@ -35,7 +35,7 @@ class model(dict):

|

|||||||

'fetch_backend': os.getenv("DEFAULT_FETCH_BACKEND", "html_requests"),

|

'fetch_backend': os.getenv("DEFAULT_FETCH_BACKEND", "html_requests"),

|

||||||

'global_ignore_text': [], # List of text to ignore when calculating the comparison checksum

|

'global_ignore_text': [], # List of text to ignore when calculating the comparison checksum

|

||||||

'global_subtractive_selectors': [],

|

'global_subtractive_selectors': [],

|

||||||

'ignore_whitespace': True,

|

'ignore_whitespace': False,

|

||||||

'render_anchor_tag_content': False,

|

'render_anchor_tag_content': False,

|

||||||

'notification_urls': [], # Apprise URL list

|

'notification_urls': [], # Apprise URL list

|

||||||

# Custom notification content

|

# Custom notification content

|

||||||

|

|||||||

@@ -1,4 +1,5 @@

|

|||||||

import os

|

import os

|

||||||

|

|

||||||

import uuid as uuid_builder

|

import uuid as uuid_builder

|

||||||

|

|

||||||

minimum_seconds_recheck_time = int(os.getenv('MINIMUM_SECONDS_RECHECK_TIME', 60))

|

minimum_seconds_recheck_time = int(os.getenv('MINIMUM_SECONDS_RECHECK_TIME', 60))

|

||||||

@@ -11,32 +12,29 @@ from changedetectionio.notification import (

|

|||||||

|

|

||||||

|

|

||||||

class model(dict):

|

class model(dict):

|

||||||

__newest_history_key = None

|

base_config = {

|

||||||

__history_n=0

|

|

||||||

|

|

||||||

__base_config = {

|

|

||||||

'url': None,

|

'url': None,

|

||||||

'tag': None,

|

'tag': None,

|

||||||

'last_checked': 0,

|

'last_checked': 0,

|

||||||

'last_changed': 0,

|

'last_changed': 0,

|

||||||

'paused': False,

|

'paused': False,

|

||||||