Compare commits

436 Commits

quick-setu

...

dont-creat

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

51fd45624c | ||

|

|

8e5ea2cc93 | ||

|

|

9f6dc6cd04 | ||

|

|

2bc988dffc | ||

|

|

a578de36c5 | ||

|

|

4c74d39df0 | ||

|

|

c454cbb808 | ||

|

|

6f1eec0d5a | ||

|

|

0d05ee1586 | ||

|

|

23476f0e70 | ||

|

|

cf363971c1 | ||

|

|

35409f79bf | ||

|

|

fc88306805 | ||

|

|

8253074d56 | ||

|

|

5f9c8db3e1 | ||

|

|

abf234298c | ||

|

|

0e1032a36a | ||

|

|

3b96e40464 | ||

|

|

c747cf7ba8 | ||

|

|

3e98c8ae4b | ||

|

|

aaad71fc19 | ||

|

|

78f93113d8 | ||

|

|

e9e586205a | ||

|

|

89f1ba58b6 | ||

|

|

6f4fd011e3 | ||

|

|

900dc5ee78 | ||

|

|

7b8b50138b | ||

|

|

01af21f856 | ||

|

|

f7f4ab314b | ||

|

|

ce0355c0ad | ||

|

|

0f43213d9d | ||

|

|

93c57d9fad | ||

|

|

3cdd075baf | ||

|

|

5c617e8530 | ||

|

|

1a48965ba1 | ||

|

|

41856c4ed8 | ||

|

|

0ed897c50f | ||

|

|

f8e587c415 | ||

|

|

d47a25eb6d | ||

|

|

9a0792d185 | ||

|

|

948ef7ade4 | ||

|

|

0ba139f8f9 | ||

|

|

a9431191fc | ||

|

|

774451f256 | ||

|

|

04577cbf32 | ||

|

|

f2864af8f1 | ||

|

|

9a36d081c4 | ||

|

|

7048a0acbd | ||

|

|

fba719ab8d | ||

|

|

7c5e2d00af | ||

|

|

02b8fc0c18 | ||

|

|

de15dfd80d | ||

|

|

024c8d8fd5 | ||

|

|

fab7d325f7 | ||

|

|

58c7cbeac7 | ||

|

|

ab9efdfd14 | ||

|

|

65d5a5d34c | ||

|

|

93c157ee7f | ||

|

|

de85db887c | ||

|

|

50805ca38a | ||

|

|

fc6424c39e | ||

|

|

f0966eb23a | ||

|

|

e4fb5ab4da | ||

|

|

e99f07a51d | ||

|

|

08ee223b5f | ||

|

|

572f9b8a31 | ||

|

|

fcfd1b5e10 | ||

|

|

0790dd555e | ||

|

|

0b20dc7712 | ||

|

|

13c4121f52 | ||

|

|

e8e176f3bd | ||

|

|

7a1d2d924e | ||

|

|

c3731cf055 | ||

|

|

a287e5a86c | ||

|

|

235535c327 | ||

|

|

44dc62da2d | ||

|

|

0c380c170f | ||

|

|

b7a2501d64 | ||

|

|

e970fef991 | ||

|

|

b76148a0f4 | ||

|

|

93cc30437f | ||

|

|

6562d6e0d4 | ||

|

|

6c217cc3b6 | ||

|

|

f30cdf0674 | ||

|

|

14da0646a7 | ||

|

|

b413cdecc7 | ||

|

|

7bf52d9275 | ||

|

|

09e6624afd | ||

|

|

b58fd995b5 | ||

|

|

f7bb8a0afa | ||

|

|

3e333496c1 | ||

|

|

ee776a9627 | ||

|

|

65db4d68e3 | ||

|

|

74d93d10c3 | ||

|

|

37aef0530a | ||

|

|

f86763dc7a | ||

|

|

13c25f9b92 | ||

|

|

265f622e75 | ||

|

|

c12db2b725 | ||

|

|

a048e4a02d | ||

|

|

69662ff91c | ||

|

|

fc94c57d7f | ||

|

|

7b94ba6f23 | ||

|

|

2345b6b558 | ||

|

|

b8d5a12ad0 | ||

|

|

9e67a572c5 | ||

|

|

378d7b7362 | ||

|

|

d1d4045c49 | ||

|

|

77409eeb3a | ||

|

|

87726e0bb2 | ||

|

|

72222158e9 | ||

|

|

1814924c19 | ||

|

|

8aae4197d7 | ||

|

|

3a8a41a3ff | ||

|

|

64caeea491 | ||

|

|

3838bff397 | ||

|

|

55ea983bda | ||

|

|

b4d79839bf | ||

|

|

0b8c3add34 | ||

|

|

51d57f0963 | ||

|

|

6d932149e3 | ||

|

|

2c764e8f84 | ||

|

|

07765b0d38 | ||

|

|

7c3faa8e38 | ||

|

|

4624974b91 | ||

|

|

991841f1f9 | ||

|

|

e3db324698 | ||

|

|

0988bef2cd | ||

|

|

5b281f2c34 | ||

|

|

a224f64cd6 | ||

|

|

7ee97ae37f | ||

|

|

69756f20f2 | ||

|

|

326b7aacbb | ||

|

|

fde7b3fd97 | ||

|

|

9d04cb014a | ||

|

|

5b530ff61c | ||

|

|

c98536ace4 | ||

|

|

463747d3b7 | ||

|

|

791bdb42aa | ||

|

|

ce6c2737a8 | ||

|

|

ade9e1138b | ||

|

|

68d5178367 | ||

|

|

41dc57aee3 | ||

|

|

943704cd04 | ||

|

|

883561f979 | ||

|

|

35d44c8277 | ||

|

|

d07d7a1b18 | ||

|

|

f066a1c38f | ||

|

|

d0d191a7d1 | ||

|

|

d7482c8d6a | ||

|

|

bcf7417f63 | ||

|

|

df6e835035 | ||

|

|

ab28f20eba | ||

|

|

1174b95ab4 | ||

|

|

a564475325 | ||

|

|

85d8d57997 | ||

|

|

359dcb63e3 | ||

|

|

b043d477dc | ||

|

|

06bcfb28e5 | ||

|

|

ca3b351bae | ||

|

|

b7e0f0a5e4 | ||

|

|

61f0ac2937 | ||

|

|

fca66eb558 | ||

|

|

359fc48fb4 | ||

|

|

d0efeb9770 | ||

|

|

3416532cd6 | ||

|

|

defc7a340e | ||

|

|

c197c062e1 | ||

|

|

77b59809ca | ||

|

|

f90b170e68 | ||

|

|

c93ca1841c | ||

|

|

57f604dff1 | ||

|

|

8499468749 | ||

|

|

7f6a13ea6c | ||

|

|

9874f0cbc7 | ||

|

|

72834a42fd | ||

|

|

724cb17224 | ||

|

|

4eb4b401a1 | ||

|

|

5d40e16c73 | ||

|

|

492bbce6b6 | ||

|

|

0394a56be5 | ||

|

|

7839551d6b | ||

|

|

9c5588c791 | ||

|

|

5a43a350de | ||

|

|

3c31f023ce | ||

|

|

4cbcc59461 | ||

|

|

4be0260381 | ||

|

|

957a3c1c16 | ||

|

|

85897e0bf9 | ||

|

|

63095f70ea | ||

|

|

8d5b0b5576 | ||

|

|

1b077abd93 | ||

|

|

32ea1a8721 | ||

|

|

fff32cef0d | ||

|

|

8fb146f3e4 | ||

|

|

770b0faa45 | ||

|

|

f6faa90340 | ||

|

|

669fd3ae0b | ||

|

|

17d37fb626 | ||

|

|

dfa7fc3a81 | ||

|

|

cd467df97a | ||

|

|

71bc2fed82 | ||

|

|

738fcfe01c | ||

|

|

3ebb2ab9ba | ||

|

|

ac98bc9144 | ||

|

|

3705ce6681 | ||

|

|

f7ea99412f | ||

|

|

d4715e2bc8 | ||

|

|

8567a83c47 | ||

|

|

77fdf59ae3 | ||

|

|

0e194aa4b4 | ||

|

|

2ba55bb477 | ||

|

|

4c759490da | ||

|

|

58a52c1f60 | ||

|

|

22638399c1 | ||

|

|

e3381776f2 | ||

|

|

26e2f21a80 | ||

|

|

b6009ae9ff | ||

|

|

b046d6ef32 | ||

|

|

e154a3cb7a | ||

|

|

1262700263 | ||

|

|

434c5813b9 | ||

|

|

0a3dc7d77b | ||

|

|

a7e296de65 | ||

|

|

bd0fbaaf27 | ||

|

|

0c111bd9ae | ||

|

|

ed9ac0b7fb | ||

|

|

743a3069bb | ||

|

|

fefc39427b | ||

|

|

2c6faa7c4e | ||

|

|

6168cd2899 | ||

|

|

f3c7c969d8 | ||

|

|

1355c2a245 | ||

|

|

96cf1a06df | ||

|

|

019a4a0375 | ||

|

|

db2f7b80ea | ||

|

|

bfabd7b094 | ||

|

|

d92dbfe765 | ||

|

|

67d2441334 | ||

|

|

3c30bc02d5 | ||

|

|

dcb54117d5 | ||

|

|

b1e32275dc | ||

|

|

e2a6865932 | ||

|

|

f04adb7202 | ||

|

|

1193a7f22c | ||

|

|

0b976827bb | ||

|

|

280e916033 | ||

|

|

5494e61a05 | ||

|

|

e461c0b819 | ||

|

|

d67c654f37 | ||

|

|

06ab34b6af | ||

|

|

ba8676c4ba | ||

|

|

4899c1a4f9 | ||

|

|

9bff1582f7 | ||

|

|

269e3bb7c5 | ||

|

|

9976f3f969 | ||

|

|

1f250aa868 | ||

|

|

1c08d9f150 | ||

|

|

9942107016 | ||

|

|

1eb5726cbf | ||

|

|

b3271ff7bb | ||

|

|

f82d3b648a | ||

|

|

034b1330d4 | ||

|

|

a7d005109f | ||

|

|

048c355e04 | ||

|

|

4026575b0b | ||

|

|

8c466b4826 | ||

|

|

6f072b42e8 | ||

|

|

e318253f31 | ||

|

|

f0f2fe94ce | ||

|

|

26f5c56ba4 | ||

|

|

a1c3107cd6 | ||

|

|

8fef3ff4ab | ||

|

|

baa25c9f9e | ||

|

|

488699b7d4 | ||

|

|

cf3a1ee3e3 | ||

|

|

daae43e9f9 | ||

|

|

cdeedaa65c | ||

|

|

3c9d2ded38 | ||

|

|

9f4364a130 | ||

|

|

5bd9eaf99d | ||

|

|

b1c51c0a65 | ||

|

|

232bd92389 | ||

|

|

e6173357a9 | ||

|

|

f2b8888aff | ||

|

|

9c46f175f9 | ||

|

|

1f27865fdf | ||

|

|

faa42d75e0 | ||

|

|

3b6e6d85bb | ||

|

|

30d6a272ce | ||

|

|

291700554e | ||

|

|

a82fad7059 | ||

|

|

c2fe5ae0d1 | ||

|

|

5beefdb7cc | ||

|

|

872bbba71c | ||

|

|

d578de1a35 | ||

|

|

cdc104be10 | ||

|

|

dd0eeca056 | ||

|

|

a95468be08 | ||

|

|

ace44d0e00 | ||

|

|

ebb8b88621 | ||

|

|

12fc2200de | ||

|

|

52d3d375ba | ||

|

|

08117089e6 | ||

|

|

2ba3a6d53f | ||

|

|

2f636553a9 | ||

|

|

0bde48b282 | ||

|

|

fae1164c0b | ||

|

|

169c293143 | ||

|

|

46cb5cff66 | ||

|

|

05584ea886 | ||

|

|

176a591357 | ||

|

|

15569f9592 | ||

|

|

5f9e475fe0 | ||

|

|

34b8784f50 | ||

|

|

2b054ced8c | ||

|

|

6553980cd5 | ||

|

|

7c12c47204 | ||

|

|

dbd9b470d7 | ||

|

|

83555a9991 | ||

|

|

5bfdb28bd2 | ||

|

|

31a6a6717b | ||

|

|

7da32f9ac3 | ||

|

|

bb732d3d2e | ||

|

|

485e55f9ed | ||

|

|

601a20ea49 | ||

|

|

76996b9eb8 | ||

|

|

fba2b1a39d | ||

|

|

4a91505af5 | ||

|

|

4841c79b4c | ||

|

|

2ba00d2e1d | ||

|

|

19c96f4bdd | ||

|

|

82b900fbf4 | ||

|

|

358a365303 | ||

|

|

a07ca4b136 | ||

|

|

ba8cf2c8cf | ||

|

|

3106b6688e | ||

|

|

2c83845dac | ||

|

|

111266d6fa | ||

|

|

ead610151f | ||

|

|

7e1e763989 | ||

|

|

327cc4af34 | ||

|

|

6008ff516e | ||

|

|

cdcf4b353f | ||

|

|

1ab70f8e86 | ||

|

|

8227c012a7 | ||

|

|

c113d5fb24 | ||

|

|

8c8d4066d7 | ||

|

|

277dc9e1c1 | ||

|

|

fc0fd1ce9d | ||

|

|

bd6127728a | ||

|

|

4101ae00c6 | ||

|

|

62f14df3cb | ||

|

|

560d465c59 | ||

|

|

7929aeddfc | ||

|

|

8294519f43 | ||

|

|

8ba8a220b6 | ||

|

|

aa3c8a9370 | ||

|

|

dbb5468cdc | ||

|

|

329c7620fb | ||

|

|

1f974bfbb0 | ||

|

|

437c8525af | ||

|

|

a2a1d5ae90 | ||

|

|

2566de2aae | ||

|

|

dfec8dbb39 | ||

|

|

5cefb16e52 | ||

|

|

341ae24b73 | ||

|

|

f47c2fb7f6 | ||

|

|

9d742446ab | ||

|

|

e3e022b0f4 | ||

|

|

6de4027c27 | ||

|

|

cda3837355 | ||

|

|

7983675325 | ||

|

|

eef56e52c6 | ||

|

|

8e3195f394 | ||

|

|

e17c2121f7 | ||

|

|

07e279b38d | ||

|

|

2c834cfe37 | ||

|

|

dbb5c666f0 | ||

|

|

70b3493866 | ||

|

|

3b11c474d1 | ||

|

|

890e1e6dcd | ||

|

|

6734fb91a2 | ||

|

|

16809b48f8 | ||

|

|

67c833d2bc | ||

|

|

31fea55ee4 | ||

|

|

b6c50d3b1a | ||

|

|

034507f14f | ||

|

|

0e385b1c22 | ||

|

|

f28c260576 | ||

|

|

18f0b63b7d | ||

|

|

97045e7a7b | ||

|

|

9807cf0cda | ||

|

|

d4b5237103 | ||

|

|

dc6f76ba64 | ||

|

|

1f2f93184e | ||

|

|

0f08c8dda3 | ||

|

|

68db20168e | ||

|

|

1d4474f5a3 | ||

|

|

613308881c | ||

|

|

f69585b276 | ||

|

|

0179940df1 | ||

|

|

c0d0424e7e | ||

|

|

014dc61222 | ||

|

|

06517bfd22 | ||

|

|

b3a115dd4a | ||

|

|

ffc4215411 | ||

|

|

9e708810d1 | ||

|

|

1e8aa6158b | ||

|

|

015353eccc | ||

|

|

501183e66b | ||

|

|

def74f27e6 | ||

|

|

37775a46c6 | ||

|

|

e4eaa0c817 | ||

|

|

206ded4201 | ||

|

|

9e71f2aa35 | ||

|

|

f9594aeffb | ||

|

|

b4e1353376 | ||

|

|

5b670c38d3 | ||

|

|

2a9fb12451 | ||

|

|

6c3c5dc28a | ||

|

|

8f062bfec9 | ||

|

|

380c512cc2 | ||

|

|

d7ed7c44ed | ||

|

|

34a87c0f41 | ||

|

|

4074fe53f1 | ||

|

|

44d599d0d1 | ||

|

|

615fe9290a | ||

|

|

2cc6955bc3 | ||

|

|

9809af142d | ||

|

|

1890881977 | ||

|

|

9fc2fe85d5 | ||

|

|

bb3c546838 | ||

|

|

165f794595 | ||

|

|

a440eece9e | ||

|

|

34c83f0e7c |

25

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -1,25 +1,42 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

about: Create a bug report, if you don't follow this template, your report will be DELETED

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

labels: 'triage'

|

||||

assignees: 'dgtlmoon'

|

||||

|

||||

---

|

||||

|

||||

**DO NOT USE THIS FORM TO REPORT THAT A PARTICULAR WEBSITE IS NOT SCRAPING/WATCHING AS EXPECTED**

|

||||

|

||||

This form is only for direct bugs and feature requests todo directly with the software.

|

||||

|

||||

Please report watched websites (full URL and _any_ settings) that do not work with changedetection.io as expected [**IN THE DISCUSSION FORUMS**](https://github.com/dgtlmoon/changedetection.io/discussions) or your report will be deleted

|

||||

|

||||

CONSIDER TAKING OUT A SUBSCRIPTION FOR A SMALL PRICE PER MONTH, YOU GET THE BENEFIT OF USING OUR PAID PROXIES AND FURTHERING THE DEVELOPMENT OF CHANGEDETECTION.IO

|

||||

|

||||

THANK YOU

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

**Describe the bug**

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

**Version**

|

||||

In the top right area: 0....

|

||||

*Exact version* in the top right area: 0....

|

||||

|

||||

**To Reproduce**

|

||||

|

||||

Steps to reproduce the behavior:

|

||||

1. Go to '...'

|

||||

2. Click on '....'

|

||||

3. Scroll down to '....'

|

||||

4. See error

|

||||

|

||||

! ALWAYS INCLUDE AN EXAMPLE URL WHERE IT IS POSSIBLE TO RE-CREATE THE ISSUE - USE THE 'SHARE WATCH' FEATURE AND PASTE IN THE SHARE-LINK!

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

|

||||

4

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@@ -1,8 +1,8 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: ''

|

||||

title: '[feature]'

|

||||

labels: 'enhancement'

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

31

.github/test/Dockerfile-alpine

vendored

Normal file

@@ -0,0 +1,31 @@

|

||||

# Taken from https://github.com/linuxserver/docker-changedetection.io/blob/main/Dockerfile

|

||||

# Test that we can still build on Alpine (musl modified libc https://musl.libc.org/)

|

||||

# Some packages wont install via pypi because they dont have a wheel available under this architecture.

|

||||

|

||||

FROM ghcr.io/linuxserver/baseimage-alpine:3.16

|

||||

ENV PYTHONUNBUFFERED=1

|

||||

|

||||

COPY requirements.txt /requirements.txt

|

||||

|

||||

RUN \

|

||||

apk add --update --no-cache --virtual=build-dependencies \

|

||||

cargo \

|

||||

g++ \

|

||||

gcc \

|

||||

libc-dev \

|

||||

libffi-dev \

|

||||

libxslt-dev \

|

||||

make \

|

||||

openssl-dev \

|

||||

py3-wheel \

|

||||

python3-dev \

|

||||

zlib-dev && \

|

||||

apk add --update --no-cache \

|

||||

libxslt \

|

||||

python3 \

|

||||

py3-pip && \

|

||||

echo "**** pip3 install test of changedetection.io ****" && \

|

||||

pip3 install -U pip wheel setuptools && \

|

||||

pip3 install -U --no-cache-dir --find-links https://wheel-index.linuxserver.io/alpine-3.16/ -r /requirements.txt && \

|

||||

apk del --purge \

|

||||

build-dependencies

|

||||

18

.github/workflows/containers.yml

vendored

@@ -50,7 +50,6 @@ jobs:

|

||||

python -m pip install --upgrade pip

|

||||

pip install flake8 pytest

|

||||

if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

|

||||

if [ -f requirements-dev.txt ]; then pip install -r requirements-dev.txt; fi

|

||||

|

||||

- name: Create release metadata

|

||||

run: |

|

||||

@@ -85,8 +84,8 @@ jobs:

|

||||

version: latest

|

||||

driver-opts: image=moby/buildkit:master

|

||||

|

||||

# master always builds :latest

|

||||

- name: Build and push :latest

|

||||

# master branch -> :dev container tag

|

||||

- name: Build and push :dev

|

||||

id: docker_build

|

||||

if: ${{ github.ref }} == "refs/heads/master"

|

||||

uses: docker/build-push-action@v2

|

||||

@@ -95,12 +94,13 @@ jobs:

|

||||

file: ./Dockerfile

|

||||

push: true

|

||||

tags: |

|

||||

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:latest,ghcr.io/${{ github.repository }}:latest

|

||||

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:dev,ghcr.io/${{ github.repository }}:dev

|

||||

platforms: linux/amd64,linux/arm64,linux/arm/v6,linux/arm/v7

|

||||

cache-from: type=local,src=/tmp/.buildx-cache

|

||||

cache-to: type=local,dest=/tmp/.buildx-cache

|

||||

provenance: false

|

||||

|

||||

# A new tagged release is required, which builds :tag

|

||||

# A new tagged release is required, which builds :tag and :latest

|

||||

- name: Build and push :tag

|

||||

id: docker_build_tag_release

|

||||

if: github.event_name == 'release' && startsWith(github.event.release.tag_name, '0.')

|

||||

@@ -110,10 +110,14 @@ jobs:

|

||||

file: ./Dockerfile

|

||||

push: true

|

||||

tags: |

|

||||

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:${{ github.event.release.tag_name }},ghcr.io/dgtlmoon/changedetection.io:${{ github.event.release.tag_name }}

|

||||

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:${{ github.event.release.tag_name }}

|

||||

ghcr.io/dgtlmoon/changedetection.io:${{ github.event.release.tag_name }}

|

||||

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:latest

|

||||

ghcr.io/dgtlmoon/changedetection.io:latest

|

||||

platforms: linux/amd64,linux/arm64,linux/arm/v6,linux/arm/v7

|

||||

cache-from: type=local,src=/tmp/.buildx-cache

|

||||

cache-to: type=local,dest=/tmp/.buildx-cache

|

||||

provenance: false

|

||||

|

||||

- name: Image digest

|

||||

run: echo step SHA ${{ steps.vars.outputs.sha_short }} tag ${{steps.vars.outputs.tag}} branch ${{steps.vars.outputs.branch}} digest ${{ steps.docker_build.outputs.digest }}

|

||||

@@ -125,5 +129,3 @@ jobs:

|

||||

key: ${{ runner.os }}-buildx-${{ github.sha }}

|

||||

restore-keys: |

|

||||

${{ runner.os }}-buildx-

|

||||

|

||||

|

||||

|

||||

6

.github/workflows/pypi.yml

vendored

@@ -19,12 +19,6 @@ jobs:

|

||||

with:

|

||||

python-version: 3.9

|

||||

|

||||

# - name: Install dependencies

|

||||

# run: |

|

||||

# python -m pip install --upgrade pip

|

||||

# pip install flake8 pytest

|

||||

# if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

|

||||

# if [ -f requirements-dev.txt ]; then pip install -r requirements-dev.txt; fi

|

||||

|

||||

- name: Test that pip builds without error

|

||||

run: |

|

||||

|

||||

68

.github/workflows/test-container-build.yml

vendored

Normal file

@@ -0,0 +1,68 @@

|

||||

name: ChangeDetection.io Container Build Test

|

||||

|

||||

# Triggers the workflow on push or pull request events

|

||||

|

||||

# This line doesnt work, even tho it is the documented one

|

||||

#on: [push, pull_request]

|

||||

|

||||

on:

|

||||

push:

|

||||

paths:

|

||||

- requirements.txt

|

||||

- Dockerfile

|

||||

- .github/workflows/*

|

||||

|

||||

pull_request:

|

||||

paths:

|

||||

- requirements.txt

|

||||

- Dockerfile

|

||||

- .github/workflows/*

|

||||

|

||||

# Changes to requirements.txt packages and Dockerfile may or may not always be compatible with arm etc, so worth testing

|

||||

# @todo: some kind of path filter for requirements.txt and Dockerfile

|

||||

jobs:

|

||||

test-container-build:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- name: Set up Python 3.9

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: 3.9

|

||||

|

||||

# Just test that the build works, some libraries won't compile on ARM/rPi etc

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v1

|

||||

with:

|

||||

image: tonistiigi/binfmt:latest

|

||||

platforms: all

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

id: buildx

|

||||

uses: docker/setup-buildx-action@v1

|

||||

with:

|

||||

install: true

|

||||

version: latest

|

||||

driver-opts: image=moby/buildkit:master

|

||||

|

||||

# https://github.com/dgtlmoon/changedetection.io/pull/1067

|

||||

# Check we can still build under alpine/musl

|

||||

- name: Test that the docker containers can build (musl via alpine check)

|

||||

id: docker_build_musl

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

context: ./

|

||||

file: ./.github/test/Dockerfile-alpine

|

||||

platforms: linux/amd64,linux/arm64

|

||||

|

||||

- name: Test that the docker containers can build

|

||||

id: docker_build

|

||||

uses: docker/build-push-action@v2

|

||||

# https://github.com/docker/build-push-action#customizing

|

||||

with:

|

||||

context: ./

|

||||

file: ./Dockerfile

|

||||

platforms: linux/arm/v7,linux/arm/v6,linux/amd64,linux/arm64,

|

||||

cache-from: type=local,src=/tmp/.buildx-cache

|

||||

cache-to: type=local,dest=/tmp/.buildx-cache

|

||||

|

||||

76

.github/workflows/test-only.yml

vendored

@@ -1,45 +1,77 @@

|

||||

name: ChangeDetection.io Test

|

||||

name: ChangeDetection.io App Test

|

||||

|

||||

# Triggers the workflow on push or pull request events

|

||||

on: [push, pull_request]

|

||||

|

||||

jobs:

|

||||

test-build:

|

||||

test-application:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

|

||||

- uses: actions/checkout@v2

|

||||

- name: Set up Python 3.9

|

||||

|

||||

# Mainly just for link/flake8

|

||||

- name: Set up Python 3.10

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: 3.9

|

||||

python-version: '3.10'

|

||||

|

||||

- name: Show env vars

|

||||

run: set

|

||||

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install flake8 pytest

|

||||

if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

|

||||

if [ -f requirements-dev.txt ]; then pip install -r requirements-dev.txt; fi

|

||||

- name: Lint with flake8

|

||||

run: |

|

||||

pip3 install flake8

|

||||

# stop the build if there are Python syntax errors or undefined names

|

||||

flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

|

||||

# exit-zero treats all errors as warnings. The GitHub editor is 127 chars wide

|

||||

flake8 . --count --exit-zero --max-complexity=10 --max-line-length=127 --statistics

|

||||

|

||||

- name: Unit tests

|

||||

- name: Spin up ancillary testable services

|

||||

run: |

|

||||

python3 -m unittest changedetectionio.tests.unit.test_notification_diff

|

||||

|

||||

docker network create changedet-network

|

||||

|

||||

- name: Test with pytest

|

||||

# Selenium+browserless

|

||||

docker run --network changedet-network -d --hostname selenium -p 4444:4444 --rm --shm-size="2g" selenium/standalone-chrome-debug:3.141.59

|

||||

docker run --network changedet-network -d --hostname browserless -e "DEFAULT_LAUNCH_ARGS=[\"--window-size=1920,1080\"]" --rm -p 3000:3000 --shm-size="2g" browserless/chrome:1.53-chrome-stable

|

||||

|

||||

- name: Build changedetection.io container for testing

|

||||

run: |

|

||||

# Build a changedetection.io container and start testing inside

|

||||

docker build . -t test-changedetectionio

|

||||

|

||||

- name: Test built container with pytest

|

||||

run: |

|

||||

# Each test is totally isolated and performs its own cleanup/reset

|

||||

cd changedetectionio; ./run_all_tests.sh

|

||||

|

||||

# Unit tests

|

||||

docker run test-changedetectionio bash -c 'python3 -m unittest changedetectionio.tests.unit.test_notification_diff'

|

||||

|

||||

# All tests

|

||||

docker run --network changedet-network test-changedetectionio bash -c 'cd changedetectionio && ./run_basic_tests.sh'

|

||||

|

||||

# https://github.com/docker/build-push-action/blob/master/docs/advanced/test-before-push.md ?

|

||||

# https://github.com/docker/buildx/issues/59 ? Needs to be one platform?

|

||||

- name: Test built container selenium+browserless/playwright

|

||||

run: |

|

||||

|

||||

# Selenium fetch

|

||||

docker run -e "WEBDRIVER_URL=http://selenium:4444/wd/hub" --network changedet-network test-changedetectionio bash -c 'cd changedetectionio;pytest tests/fetchers/test_content.py && pytest tests/test_errorhandling.py'

|

||||

|

||||

# Playwright/Browserless fetch

|

||||

docker run -e "PLAYWRIGHT_DRIVER_URL=ws://browserless:3000" --network changedet-network test-changedetectionio bash -c 'cd changedetectionio;pytest tests/fetchers/test_content.py && pytest tests/test_errorhandling.py && pytest tests/visualselector/test_fetch_data.py'

|

||||

|

||||

# https://github.com/docker/buildx/issues/495#issuecomment-918925854

|

||||

- name: Test proxy interaction

|

||||

run: |

|

||||

cd changedetectionio

|

||||

./run_proxy_tests.sh

|

||||

cd ..

|

||||

|

||||

- name: Test changedetection.io container starts+runs basically without error

|

||||

run: |

|

||||

docker run -p 5556:5000 -d test-changedetectionio

|

||||

sleep 3

|

||||

# Should return 0 (no error) when grep finds it

|

||||

curl -s http://localhost:5556 |grep -q checkbox-uuid

|

||||

|

||||

# and IPv6

|

||||

curl -s -g -6 "http://[::1]:5556"|grep -q checkbox-uuid

|

||||

|

||||

|

||||

#export WEBDRIVER_URL=http://localhost:4444/wd/hub

|

||||

#pytest tests/fetchers/test_content.py

|

||||

#pytest tests/test_errorhandling.py

|

||||

2

.gitignore

vendored

@@ -8,5 +8,7 @@ __pycache__

|

||||

build

|

||||

dist

|

||||

venv

|

||||

test-datastore/*

|

||||

test-datastore

|

||||

*.egg-info*

|

||||

.vscode/settings.json

|

||||

|

||||

@@ -6,10 +6,4 @@ Otherwise, it's always best to PR into the `dev` branch.

|

||||

|

||||

Please be sure that all new functionality has a matching test!

|

||||

|

||||

Use `pytest` to validate/test, you can run the existing tests as `pytest tests/test_notifications.py` for example

|

||||

|

||||

```

|

||||

pip3 install -r requirements-dev

|

||||

```

|

||||

|

||||

this is from https://github.com/dgtlmoon/changedetection.io/blob/master/requirements-dev.txt

|

||||

Use `pytest` to validate/test, you can run the existing tests as `pytest tests/test_notification.py` for example

|

||||

|

||||

42

Dockerfile

@@ -1,17 +1,19 @@

|

||||

# pip dependencies install stage

|

||||

FROM python:3.8-slim as builder

|

||||

FROM python:3.10-slim as builder

|

||||

|

||||

# rustc compiler would be needed on ARM type devices but theres an issue with some deps not building..

|

||||

# See `cryptography` pin comment in requirements.txt

|

||||

ARG CRYPTOGRAPHY_DONT_BUILD_RUST=1

|

||||

|

||||

RUN apt-get update && apt-get install -y --no-install-recommends \

|

||||

libssl-dev \

|

||||

libffi-dev \

|

||||

g++ \

|

||||

gcc \

|

||||

libc-dev \

|

||||

libffi-dev \

|

||||

libjpeg-dev \

|

||||

libssl-dev \

|

||||

libxslt-dev \

|

||||

zlib1g-dev \

|

||||

g++

|

||||

make \

|

||||

zlib1g-dev

|

||||

|

||||

RUN mkdir /install

|

||||

WORKDIR /install

|

||||

@@ -20,22 +22,23 @@ COPY requirements.txt /requirements.txt

|

||||

|

||||

RUN pip install --target=/dependencies -r /requirements.txt

|

||||

|

||||

# Playwright is an alternative to Selenium

|

||||

# Excluded this package from requirements.txt to prevent arm/v6 and arm/v7 builds from failing

|

||||

# https://github.com/dgtlmoon/changedetection.io/pull/1067 also musl/alpine (not supported)

|

||||

RUN pip install --target=/dependencies playwright~=1.27.1 \

|

||||

|| echo "WARN: Failed to install Playwright. The application can still run, but the Playwright option will be disabled."

|

||||

|

||||

# Final image stage

|

||||

FROM python:3.8-slim

|

||||

FROM python:3.10-slim

|

||||

|

||||

# Actual packages needed at runtime, usually due to the notification (apprise) backend

|

||||

# rustc compiler would be needed on ARM type devices but theres an issue with some deps not building..

|

||||

ARG CRYPTOGRAPHY_DONT_BUILD_RUST=1

|

||||

|

||||

# Re #93, #73, excluding rustc (adds another 430Mb~)

|

||||

RUN apt-get update && apt-get install -y --no-install-recommends \

|

||||

libssl-dev \

|

||||

libffi-dev \

|

||||

gcc \

|

||||

libc-dev \

|

||||

libxslt-dev \

|

||||

zlib1g-dev \

|

||||

g++

|

||||

libssl1.1 \

|

||||

libxslt1.1 \

|

||||

# For pdftohtml

|

||||

poppler-utils \

|

||||

zlib1g \

|

||||

&& apt-get clean && rm -rf /var/lib/apt/lists/*

|

||||

|

||||

|

||||

# https://stackoverflow.com/questions/58701233/docker-logs-erroneously-appears-empty-until-container-stops

|

||||

ENV PYTHONUNBUFFERED=1

|

||||

@@ -53,6 +56,7 @@ EXPOSE 5000

|

||||

|

||||

# The actual flask app

|

||||

COPY changedetectionio /app/changedetectionio

|

||||

|

||||

# The eventlet server wrapper

|

||||

COPY changedetection.py /app/changedetection.py

|

||||

|

||||

|

||||

12

MANIFEST.in

@@ -1,6 +1,14 @@

|

||||

recursive-include changedetectionio/templates *

|

||||

recursive-include changedetectionio/api *

|

||||

recursive-include changedetectionio/blueprint *

|

||||

recursive-include changedetectionio/model *

|

||||

recursive-include changedetectionio/res *

|

||||

recursive-include changedetectionio/static *

|

||||

recursive-include changedetectionio/templates *

|

||||

recursive-include changedetectionio/tests *

|

||||

prune changedetectionio/static/package-lock.json

|

||||

prune changedetectionio/static/styles/node_modules

|

||||

prune changedetectionio/static/styles/package-lock.json

|

||||

include changedetection.py

|

||||

global-exclude *.pyc

|

||||

global-exclude node_modules

|

||||

global-exclude venv

|

||||

global-exclude venv

|

||||

|

||||

@@ -1,38 +1,48 @@

|

||||

# changedetection.io

|

||||

|

||||

<a href="https://hub.docker.com/r/dgtlmoon/changedetection.io" target="_blank" title="Change detection docker hub">

|

||||

<img src="https://img.shields.io/docker/pulls/dgtlmoon/changedetection.io" alt="Docker Pulls"/>

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/dgtlmoon/changedetection.io" target="_blank" title="Change detection docker hub">

|

||||

<img src="https://img.shields.io/github/v/release/dgtlmoon/changedetection.io" alt="Change detection latest tag version"/>

|

||||

</a>

|

||||

## Web Site Change Detection, Monitoring and Notification.

|

||||

|

||||

## Self-hosted open source change monitoring of web pages.

|

||||

Live your data-life pro-actively, track website content changes and receive notifications via Discord, Email, Slack, Telegram and 70+ more

|

||||

|

||||

_Know when web pages change! Stay ontop of new information!_

|

||||

|

||||

Live your data-life *pro-actively* instead of *re-actively*, do not rely on manipulative social media for consuming important information.

|

||||

[<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot.png" style="max-width:100%;" alt="Self-hosted web page change monitoring" title="Self-hosted web page change monitoring" />](https://lemonade.changedetection.io/start?src=pip)

|

||||

|

||||

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot.png" style="max-width:100%;" alt="Self-hosted web page change monitoring" title="Self-hosted web page change monitoring" />

|

||||

[**Don't have time? Let us host it for you! try our extremely affordable subscription use our proxies and support!**](https://lemonade.changedetection.io/start)

|

||||

|

||||

|

||||

#### Example use cases

|

||||

|

||||

Know when ...

|

||||

|

||||

- Government department updates (changes are often only on their websites)

|

||||

- Local government news (changes are often only on their websites)

|

||||

- Products and services have a change in pricing

|

||||

- _Out of stock notification_ and _Back In stock notification_

|

||||

- Governmental department updates (changes are often only on their websites)

|

||||

- New software releases, security advisories when you're not on their mailing list.

|

||||

- Festivals with changes

|

||||

- Realestate listing changes

|

||||

- Know when your favourite whiskey is on sale, or other special deals are announced before anyone else

|

||||

- COVID related news from government websites

|

||||

- University/organisation news from their website

|

||||

- Detect and monitor changes in JSON API responses

|

||||

- API monitoring and alerting

|

||||

- JSON API monitoring and alerting

|

||||

- Changes in legal and other documents

|

||||

- Trigger API calls via notifications when text appears on a website

|

||||

- Glue together APIs using the JSON filter and JSON notifications

|

||||

- Create RSS feeds based on changes in web content

|

||||

- Monitor HTML source code for unexpected changes, strengthen your PCI compliance

|

||||

- You have a very sensitive list of URLs to watch and you do _not_ want to use the paid alternatives. (Remember, _you_ are the product)

|

||||

|

||||

_Need an actual Chrome runner with Javascript support? We support fetching via WebDriver and Playwright!</a>_

|

||||

|

||||

#### Key Features

|

||||

|

||||

- Lots of trigger filters, such as "Trigger on text", "Remove text by selector", "Ignore text", "Extract text", also using regular-expressions!

|

||||

- Target elements with xPath and CSS Selectors, Easily monitor complex JSON with JSONPath or jq

|

||||

- Switch between fast non-JS and Chrome JS based "fetchers"

|

||||

- Easily specify how often a site should be checked

|

||||

- Execute JS before extracting text (Good for logging in, see examples in the UI!)

|

||||

- Override Request Headers, Specify `POST` or `GET` and other methods

|

||||

- Use the "Visual Selector" to help target specific elements

|

||||

|

||||

**Get monitoring now!**

|

||||

|

||||

```bash

|

||||

$ pip3 install changedetection.io

|

||||

$ pip3 install changedetection.io

|

||||

```

|

||||

|

||||

Specify a target for the *datastore path* with `-d` (required) and a *listening port* with `-p` (defaults to `5000`)

|

||||

@@ -44,28 +54,5 @@ $ changedetection.io -d /path/to/empty/data/dir -p 5000

|

||||

|

||||

Then visit http://127.0.0.1:5000 , You should now be able to access the UI.

|

||||

|

||||

### Features

|

||||

- Website monitoring

|

||||

- Change detection of content and analyses

|

||||

- Filters on change (Select by CSS or JSON)

|

||||

- Triggers (Wait for text, wait for regex)

|

||||

- Notification support

|

||||

- JSON API Monitoring

|

||||

- Parse JSON embedded in HTML

|

||||

- (Reverse) Proxy support

|

||||

- Javascript support via WebDriver

|

||||

- RaspberriPi (arm v6/v7/64 support)

|

||||

|

||||

See https://github.com/dgtlmoon/changedetection.io for more information.

|

||||

|

||||

|

||||

|

||||

### Support us

|

||||

|

||||

Do you use changedetection.io to make money? does it save you time or money? Does it make your life easier? less stressful? Remember, we write this software when we should be doing actual paid work, we have to buy food and pay rent just like you.

|

||||

|

||||

Please support us, even small amounts help a LOT.

|

||||

|

||||

BTC `1PLFN327GyUarpJd7nVe7Reqg9qHx5frNn`

|

||||

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/btc-support.png" style="max-width:50%;" alt="Support us!" />

|

||||

|

||||

156

README.md

@@ -1,75 +1,109 @@

|

||||

# changedetection.io

|

||||

## Web Site Change Detection, Monitoring and Notification.

|

||||

|

||||

**_Detect website content changes and perform meaningful actions - trigger notifications via Discord, Email, Slack, Telegram, API calls and many more._**

|

||||

|

||||

_Live your data-life pro-actively._

|

||||

|

||||

|

||||

[<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot.png" style="max-width:100%;" alt="Self-hosted web page change monitoring" title="Self-hosted web page change monitoring" />](https://lemonade.changedetection.io/start?src=github)

|

||||

|

||||

[![Release Version][release-shield]][release-link] [![Docker Pulls][docker-pulls]][docker-link] [![License][license-shield]](LICENSE.md)

|

||||

|

||||

|

||||

|

||||

## Self-Hosted, Open Source, Change Monitoring of Web Pages

|

||||

[**Don't have time? Let us host it for you! try our $8.99/month subscription - use our proxies and support!**](https://lemonade.changedetection.io/start) , _half the price of other website change monitoring services and comes with unlimited watches & checks!_

|

||||

|

||||

_Know when web pages change! Stay ontop of new information!_

|

||||

|

||||

Live your data-life *pro-actively* instead of *re-actively*.

|

||||

|

||||

Free, Open-source web page monitoring, notification and change detection. Don't have time? [Try our $6.99/month plan - unlimited checks and watches!](https://lemonade.changedetection.io/start)

|

||||

- Chrome browser included.

|

||||

- Super fast, no registration needed setup.

|

||||

- Get started watching and receiving website change notifications straight away.

|

||||

|

||||

|

||||

[<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot.png" style="max-width:100%;" alt="Self-hosted web page change monitoring" title="Self-hosted web page change monitoring" />](https://lemonade.changedetection.io/start)

|

||||

### Target specific parts of the webpage using the Visual Selector tool.

|

||||

|

||||

Available when connected to a <a href="https://github.com/dgtlmoon/changedetection.io/wiki/Playwright-content-fetcher">playwright content fetcher</a> (included as part of our subscription service)

|

||||

|

||||

[<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/visualselector-anim.gif" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Self-hosted web page change monitoring context difference " />](https://lemonade.changedetection.io/start?src=github)

|

||||

|

||||

### Easily see what changed, examine by word, line, or individual character.

|

||||

|

||||

[<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot-diff.png" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Self-hosted web page change monitoring context difference " />](https://lemonade.changedetection.io/start?src=github)

|

||||

|

||||

|

||||

**Get your own private instance now! Let us host it for you!**

|

||||

### Perform interactive browser steps

|

||||

|

||||

[](https://lemonade.changedetection.io/start)

|

||||

Fill in text boxes, click buttons and more, setup your changedetection scenario.

|

||||

|

||||

Using the **Browser Steps** configuration, add basic steps before performing change detection, such as logging into websites, adding a product to a cart, accept cookie logins, entering dates and refining searches.

|

||||

|

||||

[<img src="docs/browsersteps-anim.gif" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Website change detection with interactive browser steps, login, cookies etc" />](https://lemonade.changedetection.io/start?src=github)

|

||||

|

||||

After **Browser Steps** have been run, then visit the **Visual Selector** tab to refine the content you're interested in.

|

||||

Requires Playwright to be enabled.

|

||||

|

||||

|

||||

[_Let us host your own private instance - We accept PayPal and Bitcoin, Support the further development of changedetection.io!_](https://lemonade.changedetection.io/start)

|

||||

|

||||

|

||||

|

||||

- Automatic Updates, Automatic Backups, No Heroku "paused application", don't miss a change!

|

||||

- Javascript browser included

|

||||

- Unlimited checks and watches!

|

||||

|

||||

|

||||

#### Example use cases

|

||||

### Example use cases

|

||||

|

||||

- Products and services have a change in pricing

|

||||

- _Out of stock notification_ and _Back In stock notification_

|

||||

- Monitor and track PDF file changes, know when a PDF file has text changes.

|

||||

- Governmental department updates (changes are often only on their websites)

|

||||

- New software releases, security advisories when you're not on their mailing list.

|

||||

- Festivals with changes

|

||||

- Discogs restock alerts and monitoring

|

||||

- Realestate listing changes

|

||||

- Know when your favourite whiskey is on sale, or other special deals are announced before anyone else

|

||||

- COVID related news from government websites

|

||||

- University/organisation news from their website

|

||||

- Detect and monitor changes in JSON API responses

|

||||

- API monitoring and alerting

|

||||

- JSON API monitoring and alerting

|

||||

- Changes in legal and other documents

|

||||

- Trigger API calls via notifications when text appears on a website

|

||||

- Glue together APIs using the JSON filter and JSON notifications

|

||||

- Create RSS feeds based on changes in web content

|

||||

- Monitor HTML source code for unexpected changes, strengthen your PCI compliance

|

||||

- You have a very sensitive list of URLs to watch and you do _not_ want to use the paid alternatives. (Remember, _you_ are the product)

|

||||

|

||||

_Need an actual Chrome runner with Javascript support? We support fetching via WebDriver!</a>_

|

||||

- Get notified when certain keywords appear in Twitter search results

|

||||

- Proactively search for jobs, get notified when companies update their careers page, search job portals for keywords.

|

||||

- Get alerts when new job positions are open on Bamboo HR and other job platforms

|

||||

|

||||

## Screenshots

|

||||

_Need an actual Chrome runner with Javascript support? We support fetching via WebDriver and Playwright!</a>_

|

||||

|

||||

Examining differences in content.

|

||||

#### Key Features

|

||||

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot-diff.png" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Self-hosted web page change monitoring context difference " />

|

||||

- Lots of trigger filters, such as "Trigger on text", "Remove text by selector", "Ignore text", "Extract text", also using regular-expressions!

|

||||

- Target elements with xPath and CSS Selectors, Easily monitor complex JSON with JSONPath or jq

|

||||

- Switch between fast non-JS and Chrome JS based "fetchers"

|

||||

- Track changes in PDF files (Monitor text changed in the PDF, Also monitor PDF filesize and checksums)

|

||||

- Easily specify how often a site should be checked

|

||||

- Execute JS before extracting text (Good for logging in, see examples in the UI!)

|

||||

- Override Request Headers, Specify `POST` or `GET` and other methods

|

||||

- Use the "Visual Selector" to help target specific elements

|

||||

- Configurable [proxy per watch](https://github.com/dgtlmoon/changedetection.io/wiki/Proxy-configuration)

|

||||

- Send a screenshot with the notification when a change is detected in the web page

|

||||

|

||||

We [recommend and use Bright Data](https://brightdata.grsm.io/n0r16zf7eivq) global proxy services, Bright Data will match any first deposit up to $100 using our signup link.

|

||||

|

||||

Please :star: star :star: this project and help it grow! https://github.com/dgtlmoon/changedetection.io/

|

||||

|

||||

|

||||

## Installation

|

||||

|

||||

### Docker

|

||||

|

||||

With Docker composer, just clone this repository and..

|

||||

|

||||

```bash

|

||||

$ docker-compose up -d

|

||||

```

|

||||

|

||||

Docker standalone

|

||||

```bash

|

||||

$ docker run -d --restart always -p "127.0.0.1:5000:5000" -v datastore-volume:/datastore --name changedetection.io dgtlmoon/changedetection.io

|

||||

```

|

||||

|

||||

`:latest` tag is our latest stable release, `:dev` tag is our bleeding edge `master` branch.

|

||||

|

||||

Alternative docker repository over at ghcr - [ghcr.io/dgtlmoon/changedetection.io](https://ghcr.io/dgtlmoon/changedetection.io)

|

||||

|

||||

### Windows

|

||||

|

||||

See the install instructions at the wiki https://github.com/dgtlmoon/changedetection.io/wiki/Microsoft-Windows

|

||||

@@ -92,8 +126,8 @@ _Now with per-site configurable support for using a fast built in HTTP fetcher o

|

||||

### Docker

|

||||

```

|

||||

docker pull dgtlmoon/changedetection.io

|

||||

docker kill $(docker ps -a|grep changedetection.io|awk '{print $1}')

|

||||

docker rm $(docker ps -a|grep changedetection.io|awk '{print $1}')

|

||||

docker kill $(docker ps -a -f name=changedetection.io -q)

|

||||

docker rm $(docker ps -a -f name=changedetection.io -q)

|

||||

docker run -d --restart always -p "127.0.0.1:5000:5000" -v datastore-volume:/datastore --name changedetection.io dgtlmoon/changedetection.io

|

||||

```

|

||||

|

||||

@@ -107,9 +141,9 @@ See the wiki for more information https://github.com/dgtlmoon/changedetection.io

|

||||

|

||||

|

||||

## Filters

|

||||

XPath, JSONPath and CSS support comes baked in! You can be as specific as you need, use XPath exported from various XPath element query creation tools.

|

||||

|

||||

(We support LXML re:test, re:math and re:replace.)

|

||||

XPath, JSONPath, jq, and CSS support comes baked in! You can be as specific as you need, use XPath exported from various XPath element query creation tools.

|

||||

(We support LXML `re:test`, `re:math` and `re:replace`.)

|

||||

|

||||

## Notifications

|

||||

|

||||

@@ -131,52 +165,84 @@ Just some examples

|

||||

|

||||

<a href="https://github.com/caronc/apprise#popular-notification-services">And everything else in this list!</a>

|

||||

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot-notifications.png" style="max-width:100%;" alt="Self-hosted web page change monitoring notifications" title="Self-hosted web page change monitoring notifications" />

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot-notifications.png" style="max-width:100%;" alt="Self-hosted web page change monitoring notifications" title="Self-hosted web page change monitoring notifications" />

|

||||

|

||||

Now you can also customise your notification content!

|

||||

Now you can also customise your notification content and use <a target="_new" href="https://jinja.palletsprojects.com/en/3.0.x/templates/">Jinja2 templating</a> for their title and body!

|

||||

|

||||

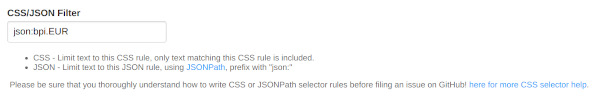

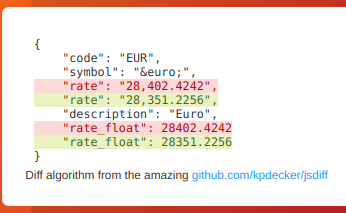

## JSON API Monitoring

|

||||

|

||||

Detect changes and monitor data in JSON API's by using the built-in JSONPath selectors as a filter / selector.

|

||||

Detect changes and monitor data in JSON API's by using either JSONPath or jq to filter, parse, and restructure JSON as needed.

|

||||

|

||||

|

||||

|

||||

|

||||

This will re-parse the JSON and apply formatting to the text, making it super easy to monitor and detect changes in JSON API results

|

||||

|

||||

|

||||

|

||||

|

||||

### JSONPath or jq?

|

||||

|

||||

For more complex parsing, filtering, and modifying of JSON data, jq is recommended due to the built-in operators and functions. Refer to the [documentation](https://stedolan.github.io/jq/manual/) for more specifc information on jq.

|

||||

|

||||

One big advantage of `jq` is that you can use logic in your JSON filter, such as filters to only show items that have a value greater than/less than etc.

|

||||

|

||||

See the wiki https://github.com/dgtlmoon/changedetection.io/wiki/JSON-Selector-Filter-help for more information and examples

|

||||

|

||||

### Parse JSON embedded in HTML!

|

||||

|

||||

When you enable a `json:` filter, you can even automatically extract and parse embedded JSON inside a HTML page! Amazingly handy for sites that build content based on JSON, such as many e-commerce websites.

|

||||

When you enable a `json:` or `jq:` filter, you can even automatically extract and parse embedded JSON inside a HTML page! Amazingly handy for sites that build content based on JSON, such as many e-commerce websites.

|

||||

|

||||

```

|

||||

<html>

|

||||

...

|

||||

<script type="application/ld+json">

|

||||

{"@context":"http://schema.org","@type":"Product","name":"Nan Optipro Stage 1 Baby Formula 800g","price": 23.50 }

|

||||

|

||||

{

|

||||

"@context":"http://schema.org/",

|

||||

"@type":"Product",

|

||||

"offers":{

|

||||

"@type":"Offer",

|

||||

"availability":"http://schema.org/InStock",

|

||||

"price":"3949.99",

|

||||

"priceCurrency":"USD",

|

||||

"url":"https://www.newegg.com/p/3D5-000D-001T1"

|

||||

},

|

||||

"description":"Cobratype King Cobra Hero Desktop Gaming PC",

|

||||

"name":"Cobratype King Cobra Hero Desktop Gaming PC",

|

||||

"sku":"3D5-000D-001T1",

|

||||

"itemCondition":"NewCondition"

|

||||

}

|

||||

</script>

|

||||

```

|

||||

|

||||

`json:$.price` would give `23.50`, or you can extract the whole structure

|

||||

`json:$..price` or `jq:..price` would give `3949.99`, or you can extract the whole structure (use a JSONpath test website to validate with)

|

||||

|

||||

## Proxy configuration

|

||||

The application also supports notifying you that it can follow this information automatically

|

||||

|

||||

See the wiki https://github.com/dgtlmoon/changedetection.io/wiki/Proxy-configuration

|

||||

|

||||

## Proxy Configuration

|

||||

|

||||

See the wiki https://github.com/dgtlmoon/changedetection.io/wiki/Proxy-configuration , we also support using [BrightData proxy services where possible]( https://github.com/dgtlmoon/changedetection.io/wiki/Proxy-configuration#brightdata-proxy-support)

|

||||

|

||||

## Raspberry Pi support?

|

||||

|

||||

Raspberry Pi and linux/arm/v6 linux/arm/v7 arm64 devices are supported! See the wiki for [details](https://github.com/dgtlmoon/changedetection.io/wiki/Fetching-pages-with-WebDriver)

|

||||

|

||||

## API Support

|

||||

|

||||

Supports managing the website watch list [via our API](https://changedetection.io/docs/api_v1/index.html)

|

||||

|

||||

## Support us

|

||||

|

||||

Do you use changedetection.io to make money? does it save you time or money? Does it make your life easier? less stressful? Remember, we write this software when we should be doing actual paid work, we have to buy food and pay rent just like you.

|

||||

|

||||

Please support us, even small amounts help a LOT.

|

||||

|

||||

BTC `1PLFN327GyUarpJd7nVe7Reqg9qHx5frNn`

|

||||

Firstly, consider taking out a [change detection monthly subscription - unlimited checks and watches](https://lemonade.changedetection.io/start) , even if you don't use it, you still get the warm fuzzy feeling of helping out the project. (And who knows, you might just use it!)

|

||||

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/btc-support.png" style="max-width:50%;" alt="Support us!" />

|

||||

Or directly donate an amount PayPal [](https://www.paypal.com/donate/?hosted_button_id=7CP6HR9ZCNDYJ)

|

||||

|

||||

Or BTC `1PLFN327GyUarpJd7nVe7Reqg9qHx5frNn`

|

||||

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/btc-support.png" style="max-width:50%;" alt="Support us!" />

|

||||

|

||||

## Commercial Support

|

||||

|

||||

@@ -188,5 +254,5 @@ I offer commercial support, this software is depended on by network security, ae

|

||||

[test-shield]: https://github.com/dgtlmoon/changedetection.io/actions/workflows/test-only.yml/badge.svg?branch=master

|

||||

|

||||

[license-shield]: https://img.shields.io/github/license/dgtlmoon/changedetection.io.svg?style=for-the-badge

|

||||

[release-link]: https://github.com/dgtlmoon.com/changedetection.io/releases

|

||||

[release-link]: https://github.com/dgtlmoon/changedetection.io/releases

|

||||

[docker-link]: https://hub.docker.com/r/dgtlmoon/changedetection.io

|

||||

|

||||

@@ -6,6 +6,39 @@

|

||||

# Read more https://github.com/dgtlmoon/changedetection.io/wiki

|

||||

|

||||

from changedetectionio import changedetection

|

||||

import multiprocessing

|

||||

import sys

|

||||

import os

|

||||

|

||||

def sigchld_handler(_signo, _stack_frame):

|

||||

import sys

|

||||

print('Shutdown: Got SIGCHLD')

|

||||

# https://stackoverflow.com/questions/40453496/python-multiprocessing-capturing-signals-to-restart-child-processes-or-shut-do

|

||||

pid, status = os.waitpid(-1, os.WNOHANG | os.WUNTRACED | os.WCONTINUED)

|

||||

|

||||

print('Sub-process: pid %d status %d' % (pid, status))

|

||||

if status != 0:

|

||||

sys.exit(1)

|

||||

|

||||

raise SystemExit

|

||||

|

||||

if __name__ == '__main__':

|

||||

changedetection.main()

|

||||

|

||||

#signal.signal(signal.SIGCHLD, sigchld_handler)

|

||||

|

||||

# The only way I could find to get Flask to shutdown, is to wrap it and then rely on the subsystem issuing SIGTERM/SIGKILL

|

||||

parse_process = multiprocessing.Process(target=changedetection.main)

|

||||

parse_process.daemon = True

|

||||

parse_process.start()

|

||||

import time

|

||||

|

||||

try:

|

||||

while True:

|

||||

time.sleep(1)

|

||||

if not parse_process.is_alive():

|

||||

# Process died/crashed for some reason, exit with error set

|

||||

sys.exit(1)

|

||||

|

||||

except KeyboardInterrupt:

|

||||

#parse_process.terminate() not needed, because this process will issue it to the sub-process anyway

|

||||

print ("Exited - CTRL+C")

|

||||

|

||||

1

changedetectionio/.gitignore

vendored

@@ -1 +1,2 @@

|

||||

test-datastore

|

||||

package-lock.json

|

||||

|

||||

0

changedetectionio/api/__init__.py

Normal file

117

changedetectionio/api/api_schema.py

Normal file

@@ -0,0 +1,117 @@

|

||||

# Responsible for building the storage dict into a set of rules ("JSON Schema") acceptable via the API

|

||||

# Probably other ways to solve this when the backend switches to some ORM

|

||||

|

||||

def build_time_between_check_json_schema():

|

||||

# Setup time between check schema

|

||||

schema_properties_time_between_check = {

|

||||

"type": "object",

|

||||

"additionalProperties": False,

|

||||

"properties": {}

|

||||

}

|

||||

for p in ['weeks', 'days', 'hours', 'minutes', 'seconds']:

|

||||

schema_properties_time_between_check['properties'][p] = {

|

||||

"anyOf": [

|

||||

{

|

||||

"type": "integer"

|

||||

},

|

||||

{

|

||||

"type": "null"

|

||||

}

|

||||

]

|

||||

}

|

||||

|

||||

return schema_properties_time_between_check

|

||||

|

||||

def build_watch_json_schema(d):

|

||||

# Base JSON schema

|

||||

schema = {

|

||||

'type': 'object',

|

||||

'properties': {},

|

||||

}

|

||||

|

||||

for k, v in d.items():

|

||||

# @todo 'integer' is not covered here because its almost always for internal usage

|

||||

|

||||

if isinstance(v, type(None)):

|

||||

schema['properties'][k] = {

|

||||

"anyOf": [

|

||||

{"type": "null"},

|

||||

]

|

||||

}

|

||||

elif isinstance(v, list):

|

||||

schema['properties'][k] = {

|

||||

"anyOf": [

|

||||

{"type": "array",

|

||||

# Always is an array of strings, like text or regex or something

|

||||

"items": {

|

||||

"type": "string",

|

||||

"maxLength": 5000

|

||||

}

|

||||

},

|

||||

]

|

||||

}

|

||||

elif isinstance(v, bool):

|

||||

schema['properties'][k] = {

|

||||

"anyOf": [

|

||||

{"type": "boolean"},

|

||||

]

|

||||

}

|

||||

elif isinstance(v, str):

|

||||

schema['properties'][k] = {

|

||||

"anyOf": [

|

||||

{"type": "string",

|

||||

"maxLength": 5000},

|

||||

]

|

||||

}

|

||||

|

||||

# Can also be a string (or None by default above)

|

||||

for v in ['body',

|

||||

'notification_body',

|

||||

'notification_format',

|

||||

'notification_title',

|

||||

'proxy',

|

||||

'tag',

|

||||

'title',

|

||||

'webdriver_js_execute_code'

|

||||

]:

|

||||

schema['properties'][v]['anyOf'].append({'type': 'string', "maxLength": 5000})

|

||||

|

||||

# None or Boolean

|

||||

schema['properties']['track_ldjson_price_data']['anyOf'].append({'type': 'boolean'})

|

||||

|

||||

schema['properties']['method'] = {"type": "string",

|

||||

"enum": ["GET", "POST", "DELETE", "PUT"]

|

||||

}

|

||||

|

||||

schema['properties']['fetch_backend']['anyOf'].append({"type": "string",

|

||||

"enum": ["html_requests", "html_webdriver"]

|

||||

})

|

||||

|

||||

|

||||

|

||||

# All headers must be key/value type dict

|

||||

schema['properties']['headers'] = {

|

||||

"type": "object",

|

||||

"patternProperties": {

|

||||

# Should always be a string:string type value

|

||||

".*": {"type": "string"},

|

||||

}

|

||||

}

|

||||

|

||||

from changedetectionio.notification import valid_notification_formats

|

||||

|

||||

schema['properties']['notification_format'] = {'type': 'string',

|

||||

'enum': list(valid_notification_formats.keys())

|

||||

}

|

||||

|

||||

# Stuff that shouldn't be available but is just state-storage

|

||||

for v in ['previous_md5', 'last_error', 'has_ldjson_price_data', 'previous_md5_before_filters', 'uuid']:

|

||||

del schema['properties'][v]

|

||||

|

||||

schema['properties']['webdriver_delay']['anyOf'].append({'type': 'integer'})

|

||||

|

||||

schema['properties']['time_between_check'] = build_time_between_check_json_schema()

|

||||

|

||||

# headers ?

|

||||

return schema

|

||||

|

||||