Compare commits

1 Commits

watch-queu

...

quick-setu

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

30b0130837 |

11

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -1,9 +1,9 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a bug report, if you don't follow this template, your report will be DELETED

|

||||

about: Create a report to help us improve

|

||||

title: ''

|

||||

labels: 'triage'

|

||||

assignees: 'dgtlmoon'

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

@@ -11,18 +11,15 @@ assignees: 'dgtlmoon'

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

**Version**

|

||||

*Exact version* in the top right area: 0....

|

||||

In the top right area: 0....

|

||||

|

||||

**To Reproduce**

|

||||

|

||||

Steps to reproduce the behavior:

|

||||

1. Go to '...'

|

||||

2. Click on '....'

|

||||

3. Scroll down to '....'

|

||||

4. See error

|

||||

|

||||

! ALWAYS INCLUDE AN EXAMPLE URL WHERE IT IS POSSIBLE TO RE-CREATE THE ISSUE - USE THE 'SHARE WATCH' FEATURE AND PASTE IN THE SHARE-LINK!

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

|

||||

4

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@@ -1,8 +1,8 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: '[feature]'

|

||||

labels: 'enhancement'

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

15

.github/workflows/containers.yml

vendored

@@ -85,8 +85,8 @@ jobs:

|

||||

version: latest

|

||||

driver-opts: image=moby/buildkit:master

|

||||

|

||||

# master branch -> :dev container tag

|

||||

- name: Build and push :dev

|

||||

# master always builds :latest

|

||||

- name: Build and push :latest

|

||||

id: docker_build

|

||||

if: ${{ github.ref }} == "refs/heads/master"

|

||||

uses: docker/build-push-action@v2

|

||||

@@ -95,12 +95,12 @@ jobs:

|

||||

file: ./Dockerfile

|

||||

push: true

|

||||

tags: |

|

||||

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:dev,ghcr.io/${{ github.repository }}:dev

|

||||

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:latest,ghcr.io/${{ github.repository }}:latest

|

||||

platforms: linux/amd64,linux/arm64,linux/arm/v6,linux/arm/v7

|

||||

cache-from: type=local,src=/tmp/.buildx-cache

|

||||

cache-to: type=local,dest=/tmp/.buildx-cache

|

||||

|

||||

# A new tagged release is required, which builds :tag and :latest

|

||||

# A new tagged release is required, which builds :tag

|

||||

- name: Build and push :tag

|

||||

id: docker_build_tag_release

|

||||

if: github.event_name == 'release' && startsWith(github.event.release.tag_name, '0.')

|

||||

@@ -110,10 +110,7 @@ jobs:

|

||||

file: ./Dockerfile

|

||||

push: true

|

||||

tags: |

|

||||

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:${{ github.event.release.tag_name }}

|

||||

ghcr.io/dgtlmoon/changedetection.io:${{ github.event.release.tag_name }}

|

||||

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:latest

|

||||

ghcr.io/dgtlmoon/changedetection.io:latest

|

||||

${{ secrets.DOCKER_HUB_USERNAME }}/changedetection.io:${{ github.event.release.tag_name }},ghcr.io/dgtlmoon/changedetection.io:${{ github.event.release.tag_name }}

|

||||

platforms: linux/amd64,linux/arm64,linux/arm/v6,linux/arm/v7

|

||||

cache-from: type=local,src=/tmp/.buildx-cache

|

||||

cache-to: type=local,dest=/tmp/.buildx-cache

|

||||

@@ -128,3 +125,5 @@ jobs:

|

||||

key: ${{ runner.os }}-buildx-${{ github.sha }}

|

||||

restore-keys: |

|

||||

${{ runner.os }}-buildx-

|

||||

|

||||

|

||||

|

||||

1

.gitignore

vendored

@@ -8,6 +8,5 @@ __pycache__

|

||||

build

|

||||

dist

|

||||

venv

|

||||

test-datastore

|

||||

*.egg-info*

|

||||

.vscode/settings.json

|

||||

|

||||

@@ -20,11 +20,6 @@ COPY requirements.txt /requirements.txt

|

||||

|

||||

RUN pip install --target=/dependencies -r /requirements.txt

|

||||

|

||||

# Playwright is an alternative to Selenium

|

||||

# Excluded this package from requirements.txt to prevent arm/v6 and arm/v7 builds from failing

|

||||

RUN pip install --target=/dependencies playwright~=1.24 \

|

||||

|| echo "WARN: Failed to install Playwright. The application can still run, but the Playwright option will be disabled."

|

||||

|

||||

# Final image stage

|

||||

FROM python:3.8-slim

|

||||

|

||||

|

||||

@@ -1,7 +1,5 @@

|

||||

recursive-include changedetectionio/api *

|

||||

recursive-include changedetectionio/templates *

|

||||

recursive-include changedetectionio/static *

|

||||

recursive-include changedetectionio/model *

|

||||

include changedetection.py

|

||||

global-exclude *.pyc

|

||||

global-exclude node_modules

|

||||

|

||||

@@ -16,13 +16,6 @@ Live your data-life *pro-actively* instead of *re-actively*, do not rely on mani

|

||||

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot.png" style="max-width:100%;" alt="Self-hosted web page change monitoring" title="Self-hosted web page change monitoring" />

|

||||

|

||||

|

||||

**Get your own private instance now! Let us host it for you!**

|

||||

|

||||

[**Try our $6.99/month subscription - unlimited checks, watches and notifications!**](https://lemonade.changedetection.io/start), choose from different geographical locations, let us handle everything for you.

|

||||

|

||||

|

||||

|

||||

#### Example use cases

|

||||

|

||||

Know when ...

|

||||

@@ -65,3 +58,14 @@ Then visit http://127.0.0.1:5000 , You should now be able to access the UI.

|

||||

|

||||

See https://github.com/dgtlmoon/changedetection.io for more information.

|

||||

|

||||

|

||||

|

||||

### Support us

|

||||

|

||||

Do you use changedetection.io to make money? does it save you time or money? Does it make your life easier? less stressful? Remember, we write this software when we should be doing actual paid work, we have to buy food and pay rent just like you.

|

||||

|

||||

Please support us, even small amounts help a LOT.

|

||||

|

||||

BTC `1PLFN327GyUarpJd7nVe7Reqg9qHx5frNn`

|

||||

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/btc-support.png" style="max-width:50%;" alt="Support us!" />

|

||||

|

||||

66

README.md

@@ -1,17 +1,26 @@

|

||||

## Web Site Change Detection, Monitoring and Notification.

|

||||

|

||||

[**Try our $6.99/month subscription - Unlimited checks and watches!**](https://lemonade.changedetection.io/start)

|

||||

|

||||

|

||||

[<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot.png" style="max-width:100%;" alt="Self-hosted web page change monitoring" title="Self-hosted web page change monitoring" />](https://lemonade.changedetection.io/start)

|

||||

|

||||

# changedetection.io

|

||||

[![Release Version][release-shield]][release-link] [![Docker Pulls][docker-pulls]][docker-link] [![License][license-shield]](LICENSE.md)

|

||||

|

||||

|

||||

|

||||

Know when important content changes, we support notifications via Discord, Telegram, Home-Assistant, Slack, Email and 70+ more

|

||||

## Self-Hosted, Open Source, Change Monitoring of Web Pages

|

||||

|

||||

[**Try our $6.99/month subscription - unlimited checks and watches!**](https://lemonade.changedetection.io/start) , _half the price of other website change monitoring services and comes with unlimited watches & checks!_

|

||||

_Know when web pages change! Stay ontop of new information!_

|

||||

|

||||

Live your data-life *pro-actively* instead of *re-actively*.

|

||||

|

||||

Free, Open-source web page monitoring, notification and change detection. Don't have time? [Try our $6.99/month plan - unlimited checks and watches!](https://lemonade.changedetection.io/start)

|

||||

|

||||

|

||||

[<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot.png" style="max-width:100%;" alt="Self-hosted web page change monitoring" title="Self-hosted web page change monitoring" />](https://lemonade.changedetection.io/start)

|

||||

|

||||

|

||||

**Get your own private instance now! Let us host it for you!**

|

||||

|

||||

[](https://lemonade.changedetection.io/start)

|

||||

|

||||

|

||||

[_Let us host your own private instance - We accept PayPal and Bitcoin, Support the further development of changedetection.io!_](https://lemonade.changedetection.io/start)

|

||||

|

||||

|

||||

|

||||

@@ -23,58 +32,44 @@ Know when important content changes, we support notifications via Discord, Teleg

|

||||

#### Example use cases

|

||||

|

||||

- Products and services have a change in pricing

|

||||

- _Out of stock notification_ and _Back In stock notification_

|

||||

- Governmental department updates (changes are often only on their websites)

|

||||

- New software releases, security advisories when you're not on their mailing list.

|

||||

- Festivals with changes

|

||||

- Realestate listing changes

|

||||

- Know when your favourite whiskey is on sale, or other special deals are announced before anyone else

|

||||

- COVID related news from government websites

|

||||

- University/organisation news from their website

|

||||

- Detect and monitor changes in JSON API responses

|

||||

- JSON API monitoring and alerting

|

||||

- API monitoring and alerting

|

||||

- Changes in legal and other documents

|

||||

- Trigger API calls via notifications when text appears on a website

|

||||

- Glue together APIs using the JSON filter and JSON notifications

|

||||

- Create RSS feeds based on changes in web content

|

||||

- Monitor HTML source code for unexpected changes, strengthen your PCI compliance

|

||||

- You have a very sensitive list of URLs to watch and you do _not_ want to use the paid alternatives. (Remember, _you_ are the product)

|

||||

|

||||

|

||||

_Need an actual Chrome runner with Javascript support? We support fetching via WebDriver!</a>_

|

||||

|

||||

## Screenshots

|

||||

|

||||

### Examine differences in content.

|

||||

Examining differences in content.

|

||||

|

||||

Easily see what changed, examine by word, line, or individual character.

|

||||

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot-diff.png" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Self-hosted web page change monitoring context difference " />

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot-diff.png" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Self-hosted web page change monitoring context difference " />

|

||||

|

||||

Please :star: star :star: this project and help it grow! https://github.com/dgtlmoon/changedetection.io/

|

||||

|

||||

### Filter by elements using the Visual Selector tool.

|

||||

|

||||

Available when connected to a <a href="https://github.com/dgtlmoon/changedetection.io/wiki/Playwright-content-fetcher">playwright content fetcher</a> (included as part of our subscription service)

|

||||

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/visualselector-anim.gif" style="max-width:100%;" alt="Self-hosted web page change monitoring context difference " title="Self-hosted web page change monitoring context difference " />

|

||||

|

||||

## Installation

|

||||

|

||||

### Docker

|

||||

|

||||

With Docker composer, just clone this repository and..

|

||||

|

||||

```bash

|

||||

$ docker-compose up -d

|

||||

```

|

||||

|

||||

Docker standalone

|

||||

```bash

|

||||

$ docker run -d --restart always -p "127.0.0.1:5000:5000" -v datastore-volume:/datastore --name changedetection.io dgtlmoon/changedetection.io

|

||||

```

|

||||

|

||||

`:latest` tag is our latest stable release, `:dev` tag is our bleeding edge `master` branch.

|

||||

|

||||

### Windows

|

||||

|

||||

See the install instructions at the wiki https://github.com/dgtlmoon/changedetection.io/wiki/Microsoft-Windows

|

||||

@@ -114,7 +109,7 @@ See the wiki for more information https://github.com/dgtlmoon/changedetection.io

|

||||

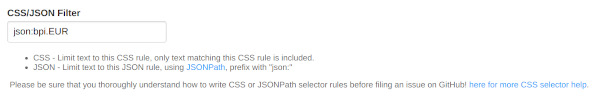

## Filters

|

||||

XPath, JSONPath and CSS support comes baked in! You can be as specific as you need, use XPath exported from various XPath element query creation tools.

|

||||

|

||||

(We support LXML `re:test`, `re:math` and `re:replace`.)

|

||||

(We support LXML re:test, re:math and re:replace.)

|

||||

|

||||

## Notifications

|

||||

|

||||

@@ -136,7 +131,7 @@ Just some examples

|

||||

|

||||

<a href="https://github.com/caronc/apprise#popular-notification-services">And everything else in this list!</a>

|

||||

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/screenshot-notifications.png" style="max-width:100%;" alt="Self-hosted web page change monitoring notifications" title="Self-hosted web page change monitoring notifications" />

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/screenshot-notifications.png" style="max-width:100%;" alt="Self-hosted web page change monitoring notifications" title="Self-hosted web page change monitoring notifications" />

|

||||

|

||||

Now you can also customise your notification content!

|

||||

|

||||

@@ -144,11 +139,11 @@ Now you can also customise your notification content!

|

||||

|

||||

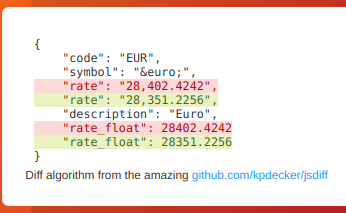

Detect changes and monitor data in JSON API's by using the built-in JSONPath selectors as a filter / selector.

|

||||

|

||||

|

||||

|

||||

|

||||

This will re-parse the JSON and apply formatting to the text, making it super easy to monitor and detect changes in JSON API results

|

||||

|

||||

|

||||

|

||||

|

||||

### Parse JSON embedded in HTML!

|

||||

|

||||

@@ -177,14 +172,11 @@ Raspberry Pi and linux/arm/v6 linux/arm/v7 arm64 devices are supported! See the

|

||||

|

||||

Do you use changedetection.io to make money? does it save you time or money? Does it make your life easier? less stressful? Remember, we write this software when we should be doing actual paid work, we have to buy food and pay rent just like you.

|

||||

|

||||

Please support us, even small amounts help a LOT.

|

||||

|

||||

Firstly, consider taking out a [change detection monthly subscription - unlimited checks and watches](https://lemonade.changedetection.io/start) , even if you don't use it, you still get the warm fuzzy feeling of helping out the project. (And who knows, you might just use it!)

|

||||

BTC `1PLFN327GyUarpJd7nVe7Reqg9qHx5frNn`

|

||||

|

||||

Or directly donate an amount PayPal [](https://www.paypal.com/donate/?hosted_button_id=7CP6HR9ZCNDYJ)

|

||||

|

||||

Or BTC `1PLFN327GyUarpJd7nVe7Reqg9qHx5frNn`

|

||||

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/docs/btc-support.png" style="max-width:50%;" alt="Support us!" />

|

||||

<img src="https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/btc-support.png" style="max-width:50%;" alt="Support us!" />

|

||||

|

||||

## Commercial Support

|

||||

|

||||

|

||||

|

Before Width: | Height: | Size: 894 B After Width: | Height: | Size: 894 B |

@@ -6,36 +6,6 @@

|

||||

# Read more https://github.com/dgtlmoon/changedetection.io/wiki

|

||||

|

||||

from changedetectionio import changedetection

|

||||

import multiprocessing

|

||||

import signal

|

||||

import os

|

||||

|

||||

def sigchld_handler(_signo, _stack_frame):

|

||||

import sys

|

||||

print('Shutdown: Got SIGCHLD')

|

||||

# https://stackoverflow.com/questions/40453496/python-multiprocessing-capturing-signals-to-restart-child-processes-or-shut-do

|

||||

pid, status = os.waitpid(-1, os.WNOHANG | os.WUNTRACED | os.WCONTINUED)

|

||||

|

||||

print('Sub-process: pid %d status %d' % (pid, status))

|

||||

if status != 0:

|

||||

sys.exit(1)

|

||||

|

||||

raise SystemExit

|

||||

|

||||

if __name__ == '__main__':

|

||||

|

||||

#signal.signal(signal.SIGCHLD, sigchld_handler)

|

||||

|

||||

# The only way I could find to get Flask to shutdown, is to wrap it and then rely on the subsystem issuing SIGTERM/SIGKILL

|

||||

parse_process = multiprocessing.Process(target=changedetection.main)

|

||||

parse_process.daemon = True

|

||||

parse_process.start()

|

||||

import time

|

||||

|

||||

try:

|

||||

while True:

|

||||

time.sleep(1)

|

||||

|

||||

except KeyboardInterrupt:

|

||||

#parse_process.terminate() not needed, because this process will issue it to the sub-process anyway

|

||||

print ("Exited - CTRL+C")

|

||||

changedetection.main()

|

||||

|

||||

1

changedetectionio/.gitignore

vendored

@@ -1,2 +1 @@

|

||||

test-datastore

|

||||

package-lock.json

|

||||

|

||||

@@ -1,124 +0,0 @@

|

||||

from flask_restful import abort, Resource

|

||||

from flask import request, make_response

|

||||

import validators

|

||||

from . import auth

|

||||

|

||||

|

||||

|

||||

# https://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html

|

||||

|

||||

class Watch(Resource):

|

||||

def __init__(self, **kwargs):

|

||||

# datastore is a black box dependency

|

||||

self.datastore = kwargs['datastore']

|

||||

self.update_q = kwargs['update_q']

|

||||

|

||||

# Get information about a single watch, excluding the history list (can be large)

|

||||

# curl http://localhost:4000/api/v1/watch/<string:uuid>

|

||||

# ?recheck=true

|

||||

@auth.check_token

|

||||

def get(self, uuid):

|

||||

from copy import deepcopy

|

||||

watch = deepcopy(self.datastore.data['watching'].get(uuid))

|

||||

if not watch:

|

||||

abort(404, message='No watch exists with the UUID of {}'.format(uuid))

|

||||

|

||||

if request.args.get('recheck'):

|

||||

self.update_q.put((1, uuid))

|

||||

return "OK", 200

|

||||

|

||||

# Return without history, get that via another API call

|

||||

watch['history_n'] = watch.history_n

|

||||

return watch

|

||||

|

||||

@auth.check_token

|

||||

def delete(self, uuid):

|

||||

if not self.datastore.data['watching'].get(uuid):

|

||||

abort(400, message='No watch exists with the UUID of {}'.format(uuid))

|

||||

|

||||

self.datastore.delete(uuid)

|

||||

return 'OK', 204

|

||||

|

||||

|

||||

class WatchHistory(Resource):

|

||||

def __init__(self, **kwargs):

|

||||

# datastore is a black box dependency

|

||||

self.datastore = kwargs['datastore']

|

||||

|

||||

# Get a list of available history for a watch by UUID

|

||||

# curl http://localhost:4000/api/v1/watch/<string:uuid>/history

|

||||

def get(self, uuid):

|

||||

watch = self.datastore.data['watching'].get(uuid)

|

||||

if not watch:

|

||||

abort(404, message='No watch exists with the UUID of {}'.format(uuid))

|

||||

return watch.history, 200

|

||||

|

||||

|

||||

class WatchSingleHistory(Resource):

|

||||

def __init__(self, **kwargs):

|

||||

# datastore is a black box dependency

|

||||

self.datastore = kwargs['datastore']

|

||||

|

||||

# Read a given history snapshot and return its content

|

||||

# <string:timestamp> or "latest"

|

||||

# curl http://localhost:4000/api/v1/watch/<string:uuid>/history/<int:timestamp>

|

||||

@auth.check_token

|

||||

def get(self, uuid, timestamp):

|

||||

watch = self.datastore.data['watching'].get(uuid)

|

||||

if not watch:

|

||||

abort(404, message='No watch exists with the UUID of {}'.format(uuid))

|

||||

|

||||

if not len(watch.history):

|

||||

abort(404, message='Watch found but no history exists for the UUID {}'.format(uuid))

|

||||

|

||||

if timestamp == 'latest':

|

||||

timestamp = list(watch.history.keys())[-1]

|

||||

|

||||

with open(watch.history[timestamp], 'r') as f:

|

||||

content = f.read()

|

||||

|

||||

response = make_response(content, 200)

|

||||

response.mimetype = "text/plain"

|

||||

return response

|

||||

|

||||

|

||||

class CreateWatch(Resource):

|

||||

def __init__(self, **kwargs):

|

||||

# datastore is a black box dependency

|

||||

self.datastore = kwargs['datastore']

|

||||

self.update_q = kwargs['update_q']

|

||||

|

||||

@auth.check_token

|

||||

def post(self):

|

||||

# curl http://localhost:4000/api/v1/watch -H "Content-Type: application/json" -d '{"url": "https://my-nice.com", "tag": "one, two" }'

|

||||

json_data = request.get_json()

|

||||

tag = json_data['tag'].strip() if json_data.get('tag') else ''

|

||||

|

||||

if not validators.url(json_data['url'].strip()):

|

||||

return "Invalid or unsupported URL", 400

|

||||

|

||||

extras = {'title': json_data['title'].strip()} if json_data.get('title') else {}

|

||||

|

||||

new_uuid = self.datastore.add_watch(url=json_data['url'].strip(), tag=tag, extras=extras)

|

||||

self.update_q.put((1, new_uuid))

|

||||

return {'uuid': new_uuid}, 201

|

||||

|

||||

# Return concise list of available watches and some very basic info

|

||||

# curl http://localhost:4000/api/v1/watch|python -mjson.tool

|

||||

# ?recheck_all=1 to recheck all

|

||||

@auth.check_token

|

||||

def get(self):

|

||||

list = {}

|

||||

for k, v in self.datastore.data['watching'].items():

|

||||

list[k] = {'url': v['url'],

|

||||

'title': v['title'],

|

||||

'last_checked': v['last_checked'],

|

||||

'last_changed': v.last_changed,

|

||||

'last_error': v['last_error']}

|

||||

|

||||

if request.args.get('recheck_all'):

|

||||

for uuid in self.datastore.data['watching'].keys():

|

||||

self.update_q.put((1, uuid))

|

||||

return {'status': "OK"}, 200

|

||||

|

||||

return list, 200

|

||||

@@ -1,33 +0,0 @@

|

||||

from flask import request, make_response, jsonify

|

||||

from functools import wraps

|

||||

|

||||

|

||||

# Simple API auth key comparison

|

||||

# @todo - Maybe short lived token in the future?

|

||||

|

||||

def check_token(f):

|

||||

@wraps(f)

|

||||

def decorated(*args, **kwargs):

|

||||

datastore = args[0].datastore

|

||||

|

||||

config_api_token_enabled = datastore.data['settings']['application'].get('api_access_token_enabled')

|

||||

if not config_api_token_enabled:

|

||||

return

|

||||

|

||||

try:

|

||||

api_key_header = request.headers['x-api-key']

|

||||

except KeyError:

|

||||

return make_response(

|

||||

jsonify("No authorization x-api-key header."), 403

|

||||

)

|

||||

|

||||

config_api_token = datastore.data['settings']['application'].get('api_access_token')

|

||||

|

||||

if api_key_header != config_api_token:

|

||||

return make_response(

|

||||

jsonify("Invalid access - API key invalid."), 403

|

||||

)

|

||||

|

||||

return f(*args, **kwargs)

|

||||

|

||||

return decorated

|

||||

@@ -1,11 +0,0 @@

|

||||

import apprise

|

||||

|

||||

# Create our AppriseAsset and populate it with some of our new values:

|

||||

# https://github.com/caronc/apprise/wiki/Development_API#the-apprise-asset-object

|

||||

asset = apprise.AppriseAsset(

|

||||

image_url_logo='https://raw.githubusercontent.com/dgtlmoon/changedetection.io/master/changedetectionio/static/images/avatar-256x256.png'

|

||||

)

|

||||

|

||||

asset.app_id = "changedetection.io"

|

||||

asset.app_desc = "ChangeDetection.io best and simplest website monitoring and change detection"

|

||||

asset.app_url = "https://changedetection.io"

|

||||

@@ -4,29 +4,14 @@

|

||||

|

||||

import getopt

|

||||

import os

|

||||

import signal

|

||||

import sys

|

||||

|

||||

import eventlet

|

||||

import eventlet.wsgi

|

||||

from . import store, changedetection_app, content_fetcher

|

||||

from . import store, changedetection_app

|

||||

from . import __version__

|

||||

|

||||

# Only global so we can access it in the signal handler

|

||||

datastore = None

|

||||

app = None

|

||||

|

||||

def sigterm_handler(_signo, _stack_frame):

|

||||

global app

|

||||

global datastore

|

||||

# app.config.exit.set()

|

||||

print('Shutdown: Got SIGTERM, DB saved to disk')

|

||||

datastore.sync_to_json()

|

||||

# raise SystemExit

|

||||

|

||||

def main():

|

||||

global datastore

|

||||

global app

|

||||

ssl_mode = False

|

||||

host = ''

|

||||

port = os.environ.get('PORT') or 5000

|

||||

@@ -50,6 +35,11 @@ def main():

|

||||

create_datastore_dir = False

|

||||

|

||||

for opt, arg in opts:

|

||||

# if opt == '--purge':

|

||||

# Remove history, the actual files you need to delete manually.

|

||||

# for uuid, watch in datastore.data['watching'].items():

|

||||

# watch.update({'history': {}, 'last_checked': 0, 'last_changed': 0, 'previous_md5': None})

|

||||

|

||||

if opt == '-s':

|

||||

ssl_mode = True

|

||||

|

||||

@@ -82,12 +72,9 @@ def main():

|

||||

"Or use the -C parameter to create the directory.".format(app_config['datastore_path']), file=sys.stderr)

|

||||

sys.exit(2)

|

||||

|

||||

|

||||

datastore = store.ChangeDetectionStore(datastore_path=app_config['datastore_path'], version_tag=__version__)

|

||||

app = changedetection_app(app_config, datastore)

|

||||

|

||||

signal.signal(signal.SIGTERM, sigterm_handler)

|

||||

|

||||

# Go into cleanup mode

|

||||

if do_cleanup:

|

||||

datastore.remove_unused_snapshots()

|

||||

@@ -124,3 +111,4 @@ def main():

|

||||

else:

|

||||

eventlet.wsgi.server(eventlet.listen((host, int(port))), app)

|

||||

|

||||

|

||||

|

||||

@@ -1,69 +1,23 @@

|

||||

from abc import ABC, abstractmethod

|

||||

import chardet

|

||||

import json

|

||||

import os

|

||||

from selenium import webdriver

|

||||

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

|

||||

from selenium.webdriver.common.proxy import Proxy as SeleniumProxy

|

||||

from selenium.common.exceptions import WebDriverException

|

||||

import requests

|

||||

import time

|

||||

import sys

|

||||

import urllib3.exceptions

|

||||

|

||||

|

||||

class Non200ErrorCodeReceived(Exception):

|

||||

def __init__(self, status_code, url, screenshot=None, xpath_data=None, page_html=None):

|

||||

# Set this so we can use it in other parts of the app

|

||||

self.status_code = status_code

|

||||

self.url = url

|

||||

self.screenshot = screenshot

|

||||

self.xpath_data = xpath_data

|

||||

self.page_text = None

|

||||

|

||||

if page_html:

|

||||

from changedetectionio import html_tools

|

||||

self.page_text = html_tools.html_to_text(page_html)

|

||||

return

|

||||

|

||||

|

||||

class JSActionExceptions(Exception):

|

||||

def __init__(self, status_code, url, screenshot, message=''):

|

||||

self.status_code = status_code

|

||||

self.url = url

|

||||

self.screenshot = screenshot

|

||||

self.message = message

|

||||

return

|

||||

|

||||

class PageUnloadable(Exception):

|

||||

def __init__(self, status_code, url, screenshot=False, message=False):

|

||||

# Set this so we can use it in other parts of the app

|

||||

self.status_code = status_code

|

||||

self.url = url

|

||||

self.screenshot = screenshot

|

||||

self.message = message

|

||||

return

|

||||

|

||||

class EmptyReply(Exception):

|

||||

def __init__(self, status_code, url, screenshot=None):

|

||||

def __init__(self, status_code, url):

|

||||

# Set this so we can use it in other parts of the app

|

||||

self.status_code = status_code

|

||||

self.url = url

|

||||

self.screenshot = screenshot

|

||||

return

|

||||

|

||||

class ScreenshotUnavailable(Exception):

|

||||

def __init__(self, status_code, url, page_html=None):

|

||||

# Set this so we can use it in other parts of the app

|

||||

self.status_code = status_code

|

||||

self.url = url

|

||||

if page_html:

|

||||

from html_tools import html_to_text

|

||||

self.page_text = html_to_text(page_html)

|

||||

return

|

||||

|

||||

class ReplyWithContentButNoText(Exception):

|

||||

def __init__(self, status_code, url, screenshot=None):

|

||||

# Set this so we can use it in other parts of the app

|

||||

self.status_code = status_code

|

||||

self.url = url

|

||||

self.screenshot = screenshot

|

||||

return

|

||||

pass

|

||||

|

||||

class Fetcher():

|

||||

error = None

|

||||

@@ -71,142 +25,7 @@ class Fetcher():

|

||||

content = None

|

||||

headers = None

|

||||

|

||||

fetcher_description = "No description"

|

||||

webdriver_js_execute_code = None

|

||||

xpath_element_js = """

|

||||

// Include the getXpath script directly, easier than fetching

|

||||

!function(e,n){"object"==typeof exports&&"undefined"!=typeof module?module.exports=n():"function"==typeof define&&define.amd?define(n):(e=e||self).getXPath=n()}(this,function(){return function(e){var n=e;if(n&&n.id)return'//*[@id="'+n.id+'"]';for(var o=[];n&&Node.ELEMENT_NODE===n.nodeType;){for(var i=0,r=!1,d=n.previousSibling;d;)d.nodeType!==Node.DOCUMENT_TYPE_NODE&&d.nodeName===n.nodeName&&i++,d=d.previousSibling;for(d=n.nextSibling;d;){if(d.nodeName===n.nodeName){r=!0;break}d=d.nextSibling}o.push((n.prefix?n.prefix+":":"")+n.localName+(i||r?"["+(i+1)+"]":"")),n=n.parentNode}return o.length?"/"+o.reverse().join("/"):""}});

|

||||

|

||||

|

||||

const findUpTag = (el) => {

|

||||

let r = el

|

||||

chained_css = [];

|

||||

depth=0;

|

||||

|

||||

// Strategy 1: Keep going up until we hit an ID tag, imagine it's like #list-widget div h4

|

||||

while (r.parentNode) {

|

||||

if(depth==5) {

|

||||

break;

|

||||

}

|

||||

if('' !==r.id) {

|

||||

chained_css.unshift("#"+CSS.escape(r.id));

|

||||

final_selector= chained_css.join(' > ');

|

||||

// Be sure theres only one, some sites have multiples of the same ID tag :-(

|

||||

if (window.document.querySelectorAll(final_selector).length ==1 ) {

|

||||

return final_selector;

|

||||

}

|

||||

return null;

|

||||

} else {

|

||||

chained_css.unshift(r.tagName.toLowerCase());

|

||||

}

|

||||

r=r.parentNode;

|

||||

depth+=1;

|

||||

}

|

||||

return null;

|

||||

}

|

||||

|

||||

|

||||

// @todo - if it's SVG or IMG, go into image diff mode

|

||||

var elements = window.document.querySelectorAll("div,span,form,table,tbody,tr,td,a,p,ul,li,h1,h2,h3,h4, header, footer, section, article, aside, details, main, nav, section, summary");

|

||||

var size_pos=[];

|

||||

// after page fetch, inject this JS

|

||||

// build a map of all elements and their positions (maybe that only include text?)

|

||||

var bbox;

|

||||

for (var i = 0; i < elements.length; i++) {

|

||||

bbox = elements[i].getBoundingClientRect();

|

||||

|

||||

// forget really small ones

|

||||

if (bbox['width'] <20 && bbox['height'] < 20 ) {

|

||||

continue;

|

||||

}

|

||||

|

||||

// @todo the getXpath kind of sucks, it doesnt know when there is for example just one ID sometimes

|

||||

// it should not traverse when we know we can anchor off just an ID one level up etc..

|

||||

// maybe, get current class or id, keep traversing up looking for only class or id until there is just one match

|

||||

|

||||

// 1st primitive - if it has class, try joining it all and select, if theres only one.. well thats us.

|

||||

xpath_result=false;

|

||||

|

||||

try {

|

||||

var d= findUpTag(elements[i]);

|

||||

if (d) {

|

||||

xpath_result =d;

|

||||

}

|

||||

} catch (e) {

|

||||

console.log(e);

|

||||

}

|

||||

|

||||

// You could swap it and default to getXpath and then try the smarter one

|

||||

// default back to the less intelligent one

|

||||

if (!xpath_result) {

|

||||

try {

|

||||

// I've seen on FB and eBay that this doesnt work

|

||||

// ReferenceError: getXPath is not defined at eval (eval at evaluate (:152:29), <anonymous>:67:20) at UtilityScript.evaluate (<anonymous>:159:18) at UtilityScript.<anonymous> (<anonymous>:1:44)

|

||||

xpath_result = getXPath(elements[i]);

|

||||

} catch (e) {

|

||||

console.log(e);

|

||||

continue;

|

||||

}

|

||||

}

|

||||

|

||||

if(window.getComputedStyle(elements[i]).visibility === "hidden") {

|

||||

continue;

|

||||

}

|

||||

|

||||

size_pos.push({

|

||||

xpath: xpath_result,

|

||||

width: Math.round(bbox['width']),

|

||||

height: Math.round(bbox['height']),

|

||||

left: Math.floor(bbox['left']),

|

||||

top: Math.floor(bbox['top']),

|

||||

childCount: elements[i].childElementCount

|

||||

});

|

||||

}

|

||||

|

||||

|

||||

// inject the current one set in the css_filter, which may be a CSS rule

|

||||

// used for displaying the current one in VisualSelector, where its not one we generated.

|

||||

if (css_filter.length) {

|

||||

q=false;

|

||||

try {

|

||||

// is it xpath?

|

||||

if (css_filter.startsWith('/') || css_filter.startsWith('xpath:')) {

|

||||

q=document.evaluate(css_filter.replace('xpath:',''), document, null, XPathResult.FIRST_ORDERED_NODE_TYPE, null).singleNodeValue;

|

||||

} else {

|

||||

q=document.querySelector(css_filter);

|

||||

}

|

||||

} catch (e) {

|

||||

// Maybe catch DOMException and alert?

|

||||

console.log(e);

|

||||

}

|

||||

bbox=false;

|

||||

if(q) {

|

||||

bbox = q.getBoundingClientRect();

|

||||

}

|

||||

|

||||

if (bbox && bbox['width'] >0 && bbox['height']>0) {

|

||||

size_pos.push({

|

||||

xpath: css_filter,

|

||||

width: bbox['width'],

|

||||

height: bbox['height'],

|

||||

left: bbox['left'],

|

||||

top: bbox['top'],

|

||||

childCount: q.childElementCount

|

||||

});

|

||||

}

|

||||

}

|

||||

// Window.width required for proper scaling in the frontend

|

||||

return {'size_pos':size_pos, 'browser_width': window.innerWidth};

|

||||

"""

|

||||

xpath_data = None

|

||||

|

||||

# Will be needed in the future by the VisualSelector, always get this where possible.

|

||||

screenshot = False

|

||||

system_http_proxy = os.getenv('HTTP_PROXY')

|

||||

system_https_proxy = os.getenv('HTTPS_PROXY')

|

||||

|

||||

# Time ONTOP of the system defined env minimum time

|

||||

render_extract_delay = 0

|

||||

fetcher_description ="No description"

|

||||

|

||||

@abstractmethod

|

||||

def get_error(self):

|

||||

@@ -219,8 +38,7 @@ class Fetcher():

|

||||

request_headers,

|

||||

request_body,

|

||||

request_method,

|

||||

ignore_status_codes=False,

|

||||

current_css_filter=None):

|

||||

ignore_status_codes=False):

|

||||

# Should set self.error, self.status_code and self.content

|

||||

pass

|

||||

|

||||

@@ -228,6 +46,10 @@ class Fetcher():

|

||||

def quit(self):

|

||||

return

|

||||

|

||||

@abstractmethod

|

||||

def screenshot(self):

|

||||

return

|

||||

|

||||

@abstractmethod

|

||||

def get_last_status_code(self):

|

||||

return self.status_code

|

||||

@@ -237,208 +59,29 @@ class Fetcher():

|

||||

def is_ready(self):

|

||||

return True

|

||||

|

||||

|

||||

# Maybe for the future, each fetcher provides its own diff output, could be used for text, image

|

||||

# the current one would return javascript output (as we use JS to generate the diff)

|

||||

#

|

||||

# Returns tuple(mime_type, stream)

|

||||

# @abstractmethod

|

||||

# def return_diff(self, stream_a, stream_b):

|

||||

# return

|

||||

|

||||

def available_fetchers():

|

||||

# See the if statement at the bottom of this file for how we switch between playwright and webdriver

|

||||

import inspect

|

||||

p = []

|

||||

for name, obj in inspect.getmembers(sys.modules[__name__], inspect.isclass):

|

||||

if inspect.isclass(obj):

|

||||

# @todo html_ is maybe better as fetcher_ or something

|

||||

# In this case, make sure to edit the default one in store.py and fetch_site_status.py

|

||||

if name.startswith('html_'):

|

||||

t = tuple([name, obj.fetcher_description])

|

||||

p.append(t)

|

||||

import inspect

|

||||

from changedetectionio import content_fetcher

|

||||

p=[]

|

||||

for name, obj in inspect.getmembers(content_fetcher):

|

||||

if inspect.isclass(obj):

|

||||

# @todo html_ is maybe better as fetcher_ or something

|

||||

# In this case, make sure to edit the default one in store.py and fetch_site_status.py

|

||||

if "html_" in name:

|

||||

t=tuple([name,obj.fetcher_description])

|

||||

p.append(t)

|

||||

|

||||

return p

|

||||

return p

|

||||

|

||||

|

||||

class base_html_playwright(Fetcher):

|

||||

fetcher_description = "Playwright {}/Javascript".format(

|

||||

os.getenv("PLAYWRIGHT_BROWSER_TYPE", 'chromium').capitalize()

|

||||

)

|

||||

if os.getenv("PLAYWRIGHT_DRIVER_URL"):

|

||||

fetcher_description += " via '{}'".format(os.getenv("PLAYWRIGHT_DRIVER_URL"))

|

||||

|

||||

browser_type = ''

|

||||

command_executor = ''

|

||||

|

||||

# Configs for Proxy setup

|

||||

# In the ENV vars, is prefixed with "playwright_proxy_", so it is for example "playwright_proxy_server"

|

||||

playwright_proxy_settings_mappings = ['bypass', 'server', 'username', 'password']

|

||||

|

||||

proxy = None

|

||||

|

||||

def __init__(self, proxy_override=None):

|

||||

|

||||

# .strip('"') is going to save someone a lot of time when they accidently wrap the env value

|

||||

self.browser_type = os.getenv("PLAYWRIGHT_BROWSER_TYPE", 'chromium').strip('"')

|

||||

self.command_executor = os.getenv(

|

||||

"PLAYWRIGHT_DRIVER_URL",

|

||||

'ws://playwright-chrome:3000'

|

||||

).strip('"')

|

||||

|

||||

# If any proxy settings are enabled, then we should setup the proxy object

|

||||

proxy_args = {}

|

||||

for k in self.playwright_proxy_settings_mappings:

|

||||

v = os.getenv('playwright_proxy_' + k, False)

|

||||

if v:

|

||||

proxy_args[k] = v.strip('"')

|

||||

|

||||

if proxy_args:

|

||||

self.proxy = proxy_args

|

||||

|

||||

# allow per-watch proxy selection override

|

||||

if proxy_override:

|

||||

# https://playwright.dev/docs/network#http-proxy

|

||||

from urllib.parse import urlparse

|

||||

parsed = urlparse(proxy_override)

|

||||

proxy_url = "{}://{}:{}".format(parsed.scheme, parsed.hostname, parsed.port)

|

||||

self.proxy = {'server': proxy_url}

|

||||

if parsed.username:

|

||||

self.proxy['username'] = parsed.username

|

||||

if parsed.password:

|

||||

self.proxy['password'] = parsed.password

|

||||

|

||||

def run(self,

|

||||

url,

|

||||

timeout,

|

||||

request_headers,

|

||||

request_body,

|

||||

request_method,

|

||||

ignore_status_codes=False,

|

||||

current_css_filter=None):

|

||||

|

||||

from playwright.sync_api import sync_playwright

|

||||

import playwright._impl._api_types

|

||||

from playwright._impl._api_types import Error, TimeoutError

|

||||

response = None

|

||||

with sync_playwright() as p:

|

||||

browser_type = getattr(p, self.browser_type)

|

||||

|

||||

# Seemed to cause a connection Exception even tho I can see it connect

|

||||

# self.browser = browser_type.connect(self.command_executor, timeout=timeout*1000)

|

||||

# 60,000 connection timeout only

|

||||

browser = browser_type.connect_over_cdp(self.command_executor, timeout=60000)

|

||||

|

||||

# Set user agent to prevent Cloudflare from blocking the browser

|

||||

# Use the default one configured in the App.py model that's passed from fetch_site_status.py

|

||||

context = browser.new_context(

|

||||

user_agent=request_headers['User-Agent'] if request_headers.get('User-Agent') else 'Mozilla/5.0',

|

||||

proxy=self.proxy,

|

||||

# This is needed to enable JavaScript execution on GitHub and others

|

||||

bypass_csp=True,

|

||||

# Should never be needed

|

||||

accept_downloads=False

|

||||

)

|

||||

|

||||

if len(request_headers):

|

||||

context.set_extra_http_headers(request_headers)

|

||||

|

||||

page = context.new_page()

|

||||

try:

|

||||

page.set_default_navigation_timeout(90000)

|

||||

page.set_default_timeout(90000)

|

||||

|

||||

# Listen for all console events and handle errors

|

||||

page.on("console", lambda msg: print(f"Playwright console: Watch URL: {url} {msg.type}: {msg.text} {msg.args}"))

|

||||

|

||||

# Bug - never set viewport size BEFORE page.goto

|

||||

|

||||

# Waits for the next navigation. Using Python context manager

|

||||

# prevents a race condition between clicking and waiting for a navigation.

|

||||

with page.expect_navigation():

|

||||

response = page.goto(url, wait_until='load')

|

||||

|

||||

|

||||

except playwright._impl._api_types.TimeoutError as e:

|

||||

context.close()

|

||||

browser.close()

|

||||

# This can be ok, we will try to grab what we could retrieve

|

||||

pass

|

||||

|

||||

except Exception as e:

|

||||

print("other exception when page.goto")

|

||||

print(str(e))

|

||||

context.close()

|

||||

browser.close()

|

||||

raise PageUnloadable(url=url, status_code=None, message=e.message)

|

||||

|

||||

if response is None:

|

||||

context.close()

|

||||

browser.close()

|

||||

print("response object was none")

|

||||

raise EmptyReply(url=url, status_code=None)

|

||||

|

||||

# Bug 2(?) Set the viewport size AFTER loading the page

|

||||

page.set_viewport_size({"width": 1280, "height": 1024})

|

||||

extra_wait = int(os.getenv("WEBDRIVER_DELAY_BEFORE_CONTENT_READY", 5)) + self.render_extract_delay

|

||||

time.sleep(extra_wait)

|

||||

|

||||

if self.webdriver_js_execute_code is not None:

|

||||

try:

|

||||

page.evaluate(self.webdriver_js_execute_code)

|

||||

except Exception as e:

|

||||

# Is it possible to get a screenshot?

|

||||

error_screenshot = False

|

||||

try:

|

||||

page.screenshot(type='jpeg',

|

||||

clip={'x': 1.0, 'y': 1.0, 'width': 1280, 'height': 1024},

|

||||

quality=1)

|

||||

|

||||

# The actual screenshot

|

||||

error_screenshot = page.screenshot(type='jpeg',

|

||||

full_page=True,

|

||||

quality=int(os.getenv("PLAYWRIGHT_SCREENSHOT_QUALITY", 72)))

|

||||

except Exception as s:

|

||||

pass

|

||||

|

||||

raise JSActionExceptions(status_code=response.status, screenshot=error_screenshot, message=str(e), url=url)

|

||||

|

||||

self.content = page.content()

|

||||

self.status_code = response.status

|

||||

self.headers = response.all_headers()

|

||||

|

||||

if current_css_filter is not None:

|

||||

page.evaluate("var css_filter={}".format(json.dumps(current_css_filter)))

|

||||

else:

|

||||

page.evaluate("var css_filter=''")

|

||||

|

||||

self.xpath_data = page.evaluate("async () => {" + self.xpath_element_js + "}")

|

||||

|

||||

# Bug 3 in Playwright screenshot handling

|

||||

# Some bug where it gives the wrong screenshot size, but making a request with the clip set first seems to solve it

|

||||

# JPEG is better here because the screenshots can be very very large

|

||||

|

||||

# Screenshots also travel via the ws:// (websocket) meaning that the binary data is base64 encoded

|

||||

# which will significantly increase the IO size between the server and client, it's recommended to use the lowest

|

||||

# acceptable screenshot quality here

|

||||

try:

|

||||

# Quality set to 1 because it's not used, just used as a work-around for a bug, no need to change this.

|

||||

page.screenshot(type='jpeg', clip={'x': 1.0, 'y': 1.0, 'width': 1280, 'height': 1024}, quality=1)

|

||||

# The actual screenshot

|

||||

self.screenshot = page.screenshot(type='jpeg', full_page=True, quality=int(os.getenv("PLAYWRIGHT_SCREENSHOT_QUALITY", 72)))

|

||||

except Exception as e:

|

||||

context.close()

|

||||

browser.close()

|

||||

raise ScreenshotUnavailable(url=url, status_code=None)

|

||||

|

||||

if len(self.content.strip()) == 0:

|

||||

context.close()

|

||||

browser.close()

|

||||

print("Content was empty")

|

||||

raise EmptyReply(url=url, status_code=None, screenshot=self.screenshot)

|

||||

|

||||

context.close()

|

||||

browser.close()

|

||||

|

||||

if not ignore_status_codes and self.status_code!=200:

|

||||

raise Non200ErrorCodeReceived(url=url, status_code=self.status_code, page_html=self.content, screenshot=self.screenshot)

|

||||

|

||||

class base_html_webdriver(Fetcher):

|

||||

class html_webdriver(Fetcher):

|

||||

if os.getenv("WEBDRIVER_URL"):

|

||||

fetcher_description = "WebDriver Chrome/Javascript via '{}'".format(os.getenv("WEBDRIVER_URL"))

|

||||

else:

|

||||

@@ -451,11 +94,12 @@ class base_html_webdriver(Fetcher):

|

||||

selenium_proxy_settings_mappings = ['proxyType', 'ftpProxy', 'httpProxy', 'noProxy',

|

||||

'proxyAutoconfigUrl', 'sslProxy', 'autodetect',

|

||||

'socksProxy', 'socksVersion', 'socksUsername', 'socksPassword']

|

||||

proxy = None

|

||||

|

||||

def __init__(self, proxy_override=None):

|

||||

from selenium.webdriver.common.proxy import Proxy as SeleniumProxy

|

||||

|

||||

|

||||

proxy=None

|

||||

|

||||

def __init__(self):

|

||||

# .strip('"') is going to save someone a lot of time when they accidently wrap the env value

|

||||

self.command_executor = os.getenv("WEBDRIVER_URL", 'http://browser-chrome:4444/wd/hub').strip('"')

|

||||

|

||||

@@ -466,16 +110,6 @@ class base_html_webdriver(Fetcher):

|

||||

if v:

|

||||

proxy_args[k] = v.strip('"')

|

||||

|

||||

# Map back standard HTTP_ and HTTPS_PROXY to webDriver httpProxy/sslProxy

|

||||

if not proxy_args.get('webdriver_httpProxy') and self.system_http_proxy:

|

||||

proxy_args['httpProxy'] = self.system_http_proxy

|

||||

if not proxy_args.get('webdriver_sslProxy') and self.system_https_proxy:

|

||||

proxy_args['httpsProxy'] = self.system_https_proxy

|

||||

|

||||

# Allows override the proxy on a per-request basis

|

||||

if proxy_override is not None:

|

||||

proxy_args['httpProxy'] = proxy_override

|

||||

|

||||

if proxy_args:

|

||||

self.proxy = SeleniumProxy(raw=proxy_args)

|

||||

|

||||

@@ -485,12 +119,8 @@ class base_html_webdriver(Fetcher):

|

||||

request_headers,

|

||||

request_body,

|

||||

request_method,

|

||||

ignore_status_codes=False,

|

||||

current_css_filter=None):

|

||||

ignore_status_codes=False):

|

||||

|

||||

from selenium import webdriver

|

||||

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

|

||||

from selenium.common.exceptions import WebDriverException

|

||||

# request_body, request_method unused for now, until some magic in the future happens.

|

||||

|

||||

# check env for WEBDRIVER_URL

|

||||

@@ -506,26 +136,19 @@ class base_html_webdriver(Fetcher):

|

||||

self.quit()

|

||||

raise

|

||||

|

||||

self.driver.set_window_size(1280, 1024)

|

||||

self.driver.implicitly_wait(int(os.getenv("WEBDRIVER_DELAY_BEFORE_CONTENT_READY", 5)))

|

||||

|

||||

if self.webdriver_js_execute_code is not None:

|

||||

self.driver.execute_script(self.webdriver_js_execute_code)

|

||||

# Selenium doesn't automatically wait for actions as good as Playwright, so wait again

|

||||

self.driver.implicitly_wait(int(os.getenv("WEBDRIVER_DELAY_BEFORE_CONTENT_READY", 5)))

|

||||

|

||||

self.screenshot = self.driver.get_screenshot_as_png()

|

||||

|

||||

# @todo - how to check this? is it possible?

|

||||

self.status_code = 200

|

||||

# @todo somehow we should try to get this working for WebDriver

|

||||

# raise EmptyReply(url=url, status_code=r.status_code)

|

||||

|

||||

# @todo - dom wait loaded?

|

||||

time.sleep(int(os.getenv("WEBDRIVER_DELAY_BEFORE_CONTENT_READY", 5)) + self.render_extract_delay)

|

||||

time.sleep(int(os.getenv("WEBDRIVER_DELAY_BEFORE_CONTENT_READY", 5)))

|

||||

self.content = self.driver.page_source

|

||||

self.headers = {}

|

||||

|

||||

def screenshot(self):

|

||||

return self.driver.get_screenshot_as_png()

|

||||

|

||||

# Does the connection to the webdriver work? run a test connection.

|

||||

def is_ready(self):

|

||||

from selenium import webdriver

|

||||

@@ -547,41 +170,24 @@ class base_html_webdriver(Fetcher):

|

||||

except Exception as e:

|

||||

print("Exception in chrome shutdown/quit" + str(e))

|

||||

|

||||

|

||||

# "html_requests" is listed as the default fetcher in store.py!

|

||||

class html_requests(Fetcher):

|

||||

fetcher_description = "Basic fast Plaintext/HTTP Client"

|

||||

|

||||

def __init__(self, proxy_override=None):

|

||||

self.proxy_override = proxy_override

|

||||

|

||||

def run(self,

|

||||

url,

|

||||

timeout,

|

||||

request_headers,

|

||||

request_body,

|

||||

request_method,

|

||||

ignore_status_codes=False,

|

||||

current_css_filter=None):

|

||||

|

||||

proxies = {}

|

||||

|

||||

# Allows override the proxy on a per-request basis

|

||||

if self.proxy_override:

|

||||

proxies = {'http': self.proxy_override, 'https': self.proxy_override, 'ftp': self.proxy_override}

|

||||

else:

|

||||

if self.system_http_proxy:

|

||||

proxies['http'] = self.system_http_proxy

|

||||

if self.system_https_proxy:

|

||||

proxies['https'] = self.system_https_proxy

|

||||

ignore_status_codes=False):

|

||||

|

||||

r = requests.request(method=request_method,

|

||||

data=request_body,

|

||||

url=url,

|

||||

headers=request_headers,

|

||||

timeout=timeout,

|

||||

proxies=proxies,

|

||||

verify=False)

|

||||

data=request_body,

|

||||

url=url,

|

||||

headers=request_headers,

|

||||

timeout=timeout,

|

||||

verify=False)

|

||||

|

||||

# If the response did not tell us what encoding format to expect, Then use chardet to override what `requests` thinks.

|

||||

# For example - some sites don't tell us it's utf-8, but return utf-8 content

|

||||

@@ -592,24 +198,12 @@ class html_requests(Fetcher):

|

||||

if encoding:

|

||||

r.encoding = encoding

|

||||

|

||||

if not r.content or not len(r.content):

|

||||

raise EmptyReply(url=url, status_code=r.status_code)

|

||||

|

||||

# @todo test this

|

||||

# @todo maybe you really want to test zero-byte return pages?

|

||||

if r.status_code != 200 and not ignore_status_codes:

|

||||

# maybe check with content works?

|

||||

raise Non200ErrorCodeReceived(url=url, status_code=r.status_code, page_html=r.text)

|

||||

if (not ignore_status_codes and not r) or not r.content or not len(r.content):

|

||||

raise EmptyReply(url=url, status_code=r.status_code)

|

||||

|

||||

self.status_code = r.status_code

|

||||

self.content = r.text

|

||||

self.headers = r.headers

|

||||

|

||||

|

||||

# Decide which is the 'real' HTML webdriver, this is more a system wide config

|

||||

# rather than site-specific.

|

||||

use_playwright_as_chrome_fetcher = os.getenv('PLAYWRIGHT_DRIVER_URL', False)

|

||||

if use_playwright_as_chrome_fetcher:

|

||||

html_webdriver = base_html_playwright

|

||||

else:

|

||||

html_webdriver = base_html_webdriver

|

||||

|

||||

@@ -1,72 +1,27 @@

|

||||

import hashlib

|

||||

import logging

|

||||

import os

|

||||

import re

|

||||

import time

|

||||

import urllib3

|

||||

|

||||

from inscriptis import get_text

|

||||

from changedetectionio import content_fetcher, html_tools

|

||||

|

||||

urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

|

||||

|

||||

|

||||

# Some common stuff here that can be moved to a base class

|

||||

# (set_proxy_from_list)

|

||||

class perform_site_check():

|

||||

screenshot = None

|

||||

xpath_data = None

|

||||

|

||||

def __init__(self, *args, datastore, **kwargs):

|

||||

super().__init__(*args, **kwargs)

|

||||

self.datastore = datastore

|

||||

|

||||

# If there was a proxy list enabled, figure out what proxy_args/which proxy to use

|

||||

# if watch.proxy use that

|

||||

# fetcher.proxy_override = watch.proxy or main config proxy

|

||||

# Allows override the proxy on a per-request basis

|

||||

# ALWAYS use the first one is nothing selected

|

||||

|

||||

def set_proxy_from_list(self, watch):

|

||||

proxy_args = None

|

||||

if self.datastore.proxy_list is None:

|

||||

return None

|

||||

|

||||

# If its a valid one

|

||||

if any([watch['proxy'] in p for p in self.datastore.proxy_list]):

|

||||

proxy_args = watch['proxy']

|

||||

|

||||

# not valid (including None), try the system one

|

||||

else:

|

||||

system_proxy = self.datastore.data['settings']['requests']['proxy']

|

||||

# Is not None and exists

|

||||

if any([system_proxy in p for p in self.datastore.proxy_list]):

|

||||

proxy_args = system_proxy

|

||||

|

||||

# Fallback - Did not resolve anything, use the first available

|

||||

if proxy_args is None:

|

||||

proxy_args = self.datastore.proxy_list[0][0]

|

||||

|

||||

return proxy_args

|

||||

|

||||

# Doesn't look like python supports forward slash auto enclosure in re.findall

|

||||

# So convert it to inline flag "foobar(?i)" type configuration

|

||||

def forward_slash_enclosed_regex_to_options(self, regex):

|

||||

res = re.search(r'^/(.*?)/(\w+)$', regex, re.IGNORECASE)

|

||||

|

||||

if res:

|

||||

regex = res.group(1)

|

||||

regex += '(?{})'.format(res.group(2))

|

||||

else:

|

||||

regex += '(?{})'.format('i')

|

||||

|

||||

return regex

|

||||

|

||||

|

||||

def run(self, uuid):

|

||||

timestamp = int(time.time()) # used for storage etc too

|

||||

|

||||

changed_detected = False

|

||||

screenshot = False # as bytes

|

||||

screenshot = False # as bytes

|

||||

stripped_text_from_html = ""

|

||||

|

||||

watch = self.datastore.data['watching'][uuid]

|

||||

@@ -92,229 +47,134 @@ class perform_site_check():

|

||||

if 'Accept-Encoding' in request_headers and "br" in request_headers['Accept-Encoding']:

|

||||

request_headers['Accept-Encoding'] = request_headers['Accept-Encoding'].replace(', br', '')

|

||||

|

||||

timeout = self.datastore.data['settings']['requests']['timeout']

|

||||

url = self.datastore.get_val(uuid, 'url')

|

||||

request_body = self.datastore.get_val(uuid, 'body')

|

||||

request_method = self.datastore.get_val(uuid, 'method')

|

||||

ignore_status_codes = self.datastore.data['watching'][uuid].get('ignore_status_codes', False)

|

||||

# @todo check the failures are really handled how we expect

|

||||

|

||||

# source: support

|

||||

is_source = False

|

||||

if url.startswith('source:'):

|

||||

url = url.replace('source:', '')

|

||||

is_source = True

|

||||

|

||||

# Pluggable content fetcher

|

||||

prefer_backend = watch['fetch_backend']

|

||||

if hasattr(content_fetcher, prefer_backend):

|

||||

klass = getattr(content_fetcher, prefer_backend)

|

||||

else:

|

||||

# If the klass doesnt exist, just use a default

|

||||

klass = getattr(content_fetcher, "html_requests")

|

||||

timeout = self.datastore.data['settings']['requests']['timeout']

|

||||

url = self.datastore.get_val(uuid, 'url')

|

||||

request_body = self.datastore.get_val(uuid, 'body')

|

||||

request_method = self.datastore.get_val(uuid, 'method')

|

||||

ignore_status_code = self.datastore.get_val(uuid, 'ignore_status_codes')

|

||||

|

||||

|

||||

proxy_args = self.set_proxy_from_list(watch)

|

||||

fetcher = klass(proxy_override=proxy_args)

|

||||

|

||||

# Configurable per-watch or global extra delay before extracting text (for webDriver types)

|

||||

system_webdriver_delay = self.datastore.data['settings']['application'].get('webdriver_delay', None)

|

||||

if watch['webdriver_delay'] is not None:

|

||||

fetcher.render_extract_delay = watch['webdriver_delay']

|

||||

elif system_webdriver_delay is not None:

|

||||

fetcher.render_extract_delay = system_webdriver_delay

|

||||

|

||||

if watch['webdriver_js_execute_code'] is not None and watch['webdriver_js_execute_code'].strip():

|

||||

fetcher.webdriver_js_execute_code = watch['webdriver_js_execute_code']

|

||||

|

||||

fetcher.run(url, timeout, request_headers, request_body, request_method, ignore_status_codes, watch['css_filter'])

|

||||

fetcher.quit()

|

||||

|

||||

self.screenshot = fetcher.screenshot

|

||||

self.xpath_data = fetcher.xpath_data

|

||||

|

||||

# Fetching complete, now filters

|

||||

# @todo move to class / maybe inside of fetcher abstract base?

|

||||

|

||||

# @note: I feel like the following should be in a more obvious chain system

|

||||

# - Check filter text

|

||||

# - Is the checksum different?

|

||||

# - Do we convert to JSON?

|

||||

# https://stackoverflow.com/questions/41817578/basic-method-chaining ?

|

||||

# return content().textfilter().jsonextract().checksumcompare() ?

|

||||

|

||||

is_json = 'application/json' in fetcher.headers.get('Content-Type', '')

|

||||

is_html = not is_json

|

||||

|

||||

# source: support, basically treat it as plaintext

|

||||

if is_source:

|

||||

is_html = False

|

||||

is_json = False

|

||||

|

||||

css_filter_rule = watch['css_filter']

|

||||

subtractive_selectors = watch.get(

|

||||

"subtractive_selectors", []

|

||||

) + self.datastore.data["settings"]["application"].get(

|

||||

"global_subtractive_selectors", []

|

||||

)

|

||||

|

||||

has_filter_rule = css_filter_rule and len(css_filter_rule.strip())

|

||||

has_subtractive_selectors = subtractive_selectors and len(subtractive_selectors[0].strip())

|

||||

|

||||